Many run A/B tests, few do it right.

Ask a seasoned conversion rate optimization professional what is the most crucial component for successful CRO experiments, and they’d answer: it’s the right process.

But what does that right process look like? What are the prerequisites for a good test, and how to run it correctly? What do you do with your test results afterwards? What if the test is inconclusive?

In this post, we’re answering these questions, trying to go deeper than such posts usually go, supporting our suggestions with knowledge from various CRO experts with decades of experience in conversion rate optimization.

Let’s go!

Definition

A Conversion Rate Optimization (CRO) test is an experiment on a website with the goal of figuring out which version of a page leads more visitors to take a desired action (like making a purchase or signing up for a service).

A CRO test involves creating different versions of the same webpage and showing them to different groups of visitors. The CRO test concludes with an analysis of which version was better at getting the users to take the desired action and why. Usually, the goal of a CRO test is to make your website more effective at converting visitors into customers.

First, let’s quickly run through the list of things that should be in place before you attempt to run your first test.

- A website. We are here to optimize conversion rate on a website, aren’t we?

- A website analytics tool. It could be Google Analytics 4, Adobe Analytics, Mixpanel, or something else. Could be Mouseflow as well, even though as a quantitative analytics tool it’s likely not as useful as for qualitative analytics purposes.

You’ll need a website analytics tool to collect quantitative data to a) come up with hypotheses for your experiments b) analyze the results.

- An A/B testing tool. There are plenty of good ones, such as AB Tasty, Convert Experiences, Optimizely, and others. Most of them are quite expensive.

Sadly, Google Optimize is now gone – it was a good free alternative to those listed above. There are still cheaper and fully functional options such as Varify.io with a fixed cost of a little more than $100 per month.

-

A behavior analytics / customer experience analytics tool like Mouseflow. To run quality experiments, you’ll need many features behavior analytics platforms have to offer. Make sure the one you choose offers a good heatmap tool, a session replay tool with friction events tracking, conversion funnels, and a feedback survey tool. We’ll return to when you’ll need those as we go through the experimentation process.

One other thing to pay attention to when choosing a behavior analytics tool is making sure that it has an integration with the A/B testing tool that you chose. That’s totally necessary so that you can segment data for each variation and each experiment – and analyze it separately.

For example, Mouseflow offers quick pre-built integrations with the 8 most popular tools plus an API, so that you can build an integration yourself if it’s missing.

We have a detailed comparison of the best heatmap and session replay tools if you need to choose one.

- Management buy-in. That’s not a tool, but that’s no less important. All stakeholders should be aware of what you’re doing and why you’re doing it, and support your initiative.

You’ll need that support on pretty much every step of the CRO process: from getting developers and designers to do what you need – and all the way to HIPPOs (aka highest paid person’s opinion) not interfering with the experiment.

That’s it for the prerequisites list. We’ve discussed a list of the best CRO tools of all kinds in another article if you need to pick your gear before diving into experimentation..

OK, we’re all set and ready to begin doing experiments the right way! And it always starts with research.

The main thing about each test is what it is that you’re actually testing – the idea.

Of course, you have a million and one ideas about what you can test – starting from different call-to-action copy variations and all the way to revamping the whole checkout process. So, you’ll need to choose wisely.

Why Research Is Important for CRO

Ideas are great, but many CRO experts agree about the same thing: never run CRO experiments based on gut feeling. Even if they don’t fail (which is unlikely, let’s be honest), you won’t get as much learning from a random experiment, as you would from one with a well-researched hypothesis that is relevant for your particular case.

The same goes for ideas that you get from your boss or client without any underlying research. Neither your gut or your bosses’ should be making CRO decisions.

Your boss or client need convincing?

Show them this story from Shiva Manjunath, the host of “From A to B” podcast, about spaghetti testing.

“Now, I don’t run any tests unless they’re backed by some form of data. That was a huge mindset shift for me – from spaghetti testing to strategic testing by means of corroboration of data sources into strong hypotheses.”

Or you can refer to this story from Daniël Granja Baltazar, CRO specialist from VodafoneZiggo, about a client who insisted on running “big and bold” experiments instead of small, but well-researched ones. Both stories showcase the paramount importance of research for successful experimentation.

The main goal of CRO is learning. You want to learn as much as possible from each test, and valuable learning is only possible if the idea you’re trying to test has a foundation.

Ideally, you should have a roadmap with your well-researched hypotheses outlined and prioritized, so that every time you’re running the most impactful experiment possible. We’ll discuss creating a CRO roadmap in a separate post. If you started with doing a CRO audit of your website, you likely already have something like a roadmap, which is great. If you don’t, you’ll create one as you go.

How to Formulate a Hypothesis for a CRO Test

A hypothesis is an idea that if you change something on your website, some metrics would improve, because users would behave differently. If you want to formulate a hypothesis, it usually has the following format:

We hypothesize that if we do A (e.g. change CTA copy on a landing page), we will get B (e.g. increase conversions to signups by 5%), because C (reason why it’s so, proven by data).

The “because C” part is the one that is often overlooked, but it is the one that makes your hypothesis data-driven. And to have this data, you need to do some research.

Data Sources for Generating a Hypothesis

For a hypothesis, you need to get data. Here are the main or most usual sources of this data:

- Quantitative analytics – GA4, Adobe Analytics, whatever you’re using. Look for discrepancies in the numbers – there’s plenty of things one can get from a good quantitative analytics tool while searching for anomalies.

- Behavior analytics. To be more precise, I mean heatmaps and session recordings. We have guides on how to read a heatmap (all sorts of them) and what you can learn by recording website visitors. I’d suggest that you refer to them to learn about all the types of data you can extract from heatmaps & session recordings.

To give you a rough idea, it could be something like “our heatmaps suggest that only 40% of web page visitors see our most important messages” or “our recordings show that, apparently, some users get into an endless loop while trying to do this specific task in our app.”

- User feedback surveys (that your behavior analytics tool can likely handle for you). These are good for asking direct questions. Is the page helpful? Did they find what they were looking for?

Of course, it’s better to fire surveys strategically rather than randomly, so having some data to support why you want to survey people about this particular thing on this particular page could help. We have a post for you if you’re new to user feedback surveys that can help you get more out of them.

- User testing. That’s almost like feedback, but even more interactive (and more expensive). With platforms like Userlytics you can invite real users who match your ideal customer profile to perform certain tasks on your website. You get to watch them do it and ask questions about their perception of the website, what’s easy, what’s hard, what they expect, etc.

Again, it’s better to know what exactly you want to ask these questions about before starting with user testing. Watch this webinar with Rawnet’s Optimization strategist and CRO expert Georgie Wells diving into more details about how to do it.

There, of course, can be more data sources. It could be support tickets, feature adoption data, error logs, market research, reviews, and so on, and so forth. If you want to dive deeper into how to create data-driven CRO hypotheses based on all these sorts of data, we, as always, have a post for you.

Matt Scaysbrook, a CRO veteran with over a decade of experience, suggests that it’s important to make sure you get at least two correlating data sources. That raises the probability of your experiment winning and makes your CRO strategy more sustainable.

If you don’t know where to start and don’t have the data yet, CRO best practices such as adding social proof or moving CTAs above the fold make for good hypotheses to try out.

Best practices are not truths set in stone. They are good hypotheses to test. This realization has changed my career as a marketer and optimizer.

Once you’re done with research, it’s time to document how your test is gonna look like. Your hypothesis is what you’re going to test, and your documentation is how you’re going to test it.

Documentation can live wherever you want, but make sure that it’s some place where you can easily return to it – like Google Sheets, for example. Optimizely has a nice example of how this sheet can look like. It should contain not only the hypothesis, but also many more things:

-

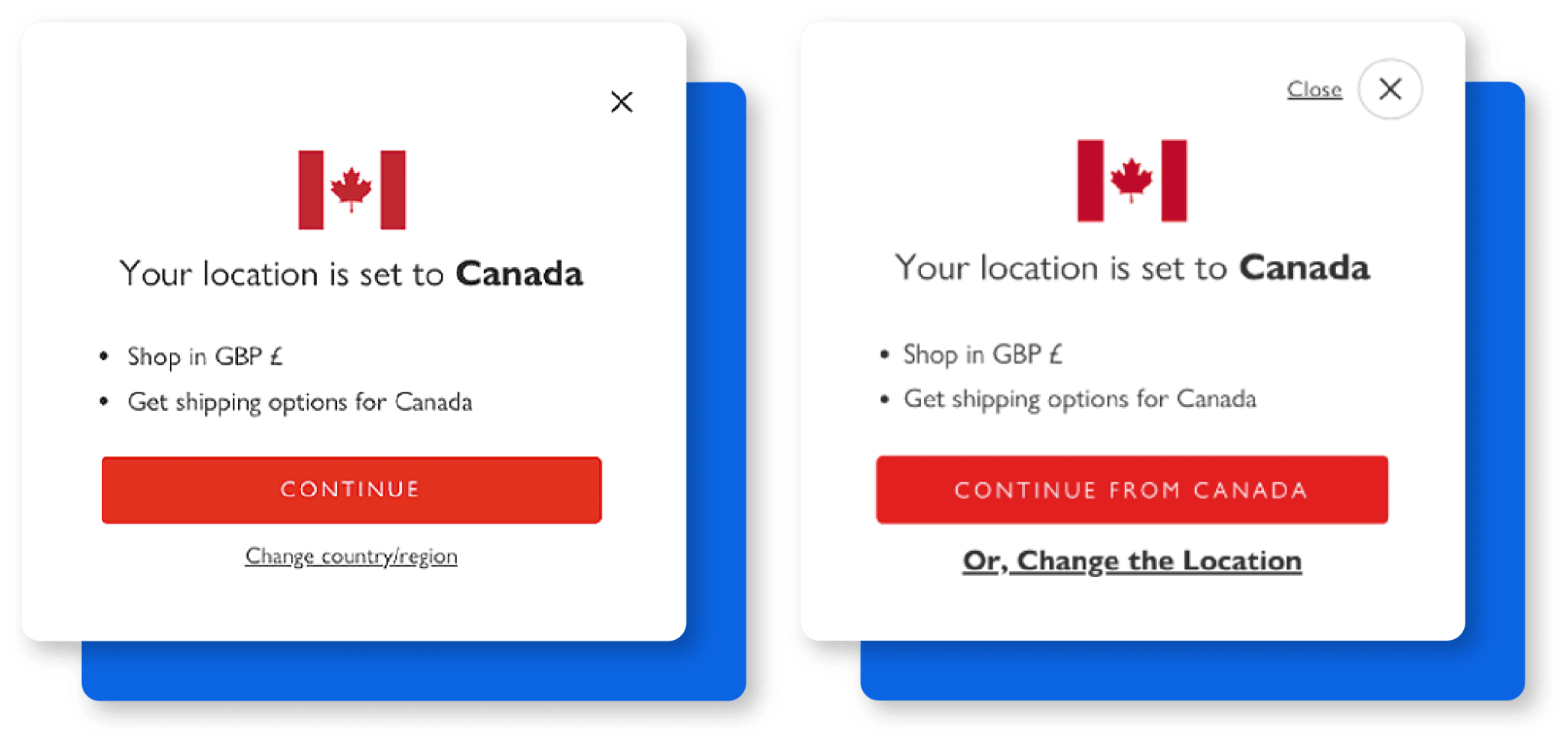

What exactly and how are you going to change for the variation (variations)? Write down all things that you want to tweak, but remember that ideally it should be one thing at a time. It’s just that the scope of this one thing could be a little flexible: for example, you can either only change the copy on the popup or change the copy and make sure users can close it if they click outside the popup.

In both cases, it’s only changing one thing – the popup, but the scope of changes is different. Theoretically, the first approach is cleaner, but realistically, you may want to stick to the second one – again, for experiments to be conclusive.

That’s a real example from a test that Derek Rose ran, by the way, and they managed to improve their conversions by 37% with (almost) just that.

-

What are the primary and secondary metrics you want to watch? Likely, you would want to consider not only the metric that you specified in the hypothesis, but also some other metrics that you’ll need to monitor to ensure that everything is going well.

Here’s an example of why you may need to do so. Lucia van den Brink, founder of Women in Experimentation, once ran an experiment with the primary target to increase the click through rate (CTR). Formally, the experiment was a success – the CTR rate went up. But the conversion rate went down, because people were clicking only to get the information they were missing, and that frustrated them. That’s likely something that you’d like to avoid, right?

- What audience segment (segmented geographically or otherwise) you want to run the test on?

- On which pages would the changes happen? Is that something that you’re introducing site-wide, or only on a couple of specific pages? That will influence the experiment duration, because you’ll need to run a test on just one page for much longer to be conclusive.

- What are the expected outcomes? Yes, that’s the part of the hypothesis, but in the documentation you can have them in a little more detail.

- What’s the necessary test duration and traffic? Depending on how much traffic you get to the page per day, percentage of visitors that will see the variation, the expected changes, and so on, you’ll need to run your experiment for a different amount of time to reach statistical significance. You can use some experiment calculator like this one to calculate the experiment duration for you. Most A/B testing tools have one built-in as well.

- What are the necessary resources to run the test? Who is going to be doing what, and how many developer and designer hours (or points, or whatever they mention their work in) it would take.

- Who are the stakeholders?

Types of CRO Tests

Also, this is the time to decide which type of test you want to run. Most likely, that’s going to be an A/B test – that’s what most CROs run. But in addition to A/B tests, there are also some other test types.

- A/A test. That is testing the page against itself, which is usually done to make sure your testing setup works as expected and that your CRO efforts won’t be in vain because of some misconfiguration.

- Split testing. That’s very similar to A/B testing, but in this case different visitors get to see different URLs. For the purpose of today’s post, we can say that split testing and A/B testing are the same.

- A/B/n test. The easiest way to explain it is saying that it’s an A/B/C or A/B/C/D test. Of course, there could be even more variations that you test against the control.

- Multivariate test. This is when you change more than one variable and create variations that have all the combinations. If you change 2 variables (one with versions A and B, the other with versions X and Y), that would be 4 combinations: AX, AY, BX, BY. Multivariate testing can give you more insights, but this type of test is harder to analyze and needs much more website traffic to be conclusive.

After you’ve thoroughly documented the experiment, and know who, when, and where is doing what, it’s high time to make that variation that you want to test. Here are a few best practices that CRO experts recommend following at this stage.

- Using code instead of implementing variations through A/B testing tools WYSIWYG (what you see is what you get) visual editors. That way, you won’t need to redo it again normally once you figure out your experiment worked. Also there won’t be any unknown variables.

-

Building your variation on the staging environment first and doing thorough quality assurance (QA) checks before shipping it to the production environment. Nobody wants their users to have a poor experience because of some implementation errors. One important part of QA is trying the updated page on different devices and different browsers. You can ask your colleagues to help you with that, as you likely don’t have the whole zoo of devices yourself.

Pro tip: don’t loom over their shoulders while they’re testing your page, just watch Mouseflow recordings afterwards.

- Finally, despite the fact that you obviously want your experiment up and running sooner rather than later, don’t rush. And don’t make designers and developers rush as well. The users will be happier if the experience is really pleasant and the page loads quickly. And the developers will thank you when they don’t end up a ton of poor hastily written code that’ll need refactoring later.

Oh, we got to finally run something!

If it’s the first test you’re running, run an A/A test (testing the page against itself with your A/B testing tool) first. Stephen Schultz from Varify recommends that to make sure your A/B testing setup is working just fine. If you find that an A/A test suggests that there’s a winner and the test is conclusive – you have a problem with how you’ve set up things.

If the setup seems to be alright, and if there are no other experiments running at the moment, you can proceed.

One more thing: since we’re talking about CRO, it’s likely that you’re aiming at increasing conversion rate somewhere. To make it easier for you to track this conversion, you can set up conversion funnels with a tool like Mouseflow for both control and variations. A conversion funnel is a very easy visual tool to show conversion rates that is very useful for reporting.

Only Run One Test and Change One Thing at a Time

It’s hard to overstate the importance of running just one experiment at a time if you want to learn something valuable in the end.

Say, you run two at the same time. If you’ve seen great results, which experiment do you attribute them to? Or, if the overall result seems to be inconclusive, is it because both experiments produced inconclusive results, or is it because one was in fact a clear winner, and another a clear loser?

This is why there should be no overlaps between CRO tests, and in each test, there’s only one thing that changes. In the roadmap (if you have one), one experiment follows the other. If you really want to speed things up, try multivariate testing. This is where you can test 2 or 3 things at a time, but only if your traffic allows you to.

Avoid Stopping Tests To Early

In most cases, it’s better to wait for the full duration of the experiment before stopping it and jumping to conclusions. But we, humans, are impatient. And so are our stakeholders – which comes at no surprise, most of them also seem to be human.

So, after a few days of seeing conversions go down, either you or your client / manager may want to stop the experiment. That likely would be a mistake.

Ubaldo Hervas, Head of CRO at LIN3S, highlights the importance of the so-called novelty effect. Users can be scared by something that is new, which makes the conversions go down. But overtime, they get used to it, and the conversions might go up. Wait for the novelty to wear down a little before hitting that stop button.

The “novelty effect” refers to the phenomenon where a change or something new on a website (such as a new design, feature, or product) initially attracts more (or less) attention and engagement from users, leading to a temporary increase/decrease in metrics.

Check User Behavior While the Test is Running

One thing to pay attention to while you’re running your CRO test is if the users are behaving the way you expected them to. If they aren’t, that could be a result of something working the wrong way – some errors, broken links, loops, whatever.

If you find that there’s a problem with the technical setup that is severely impacting the test – this is, perhaps, the only situation where it may be worth stopping it. You’ve already learned everything important from this test.

Pro tip: Watch session recordings for website visitors from different browsers and device types – this way you’ll know all users are getting an equally pleasant user experience.

That’s actually the most important, but, perhaps, the most tedious part.

Is the experiment conclusive?

The first thing here is to determine if the experiment is conclusive: you’ve reached statistical significance and you see a clear winner. You can use a statistical significance calculator for that. Note that 95% confidence isn’t the magic number. If you don’t have much traffic, going down to 90% confidence will give you a higher chance of getting conclusive experiments (while, of course, increasing the risk as well).

If your experiment is conclusive, congrats! If not, or if your variation clearly lost to the control, don’t worry. To have a true experimentation mindset, you need to be ready to fail often, and that also includes inconclusive CRO tests.

Post-test Segmentation and Learnings

The important thing in conversion rate optimization is how much you’ve learned. And it goes way beyond “this version won, this lost”. The main learnings are in understanding why things happened. And for that, you again need to dive into the data.

After the test is concluded, you can analyze heatmaps and watch session recordings for the variation to see if users behaved the way you thought they would. That’s where the integration between your behavior analytics tool and A/B testing tool comes in handy.

If you’ve set up a conversion funnel, you can separate recordings of those who converted (or those who didn’t) and watch them to find behavior patterns.

These are examples of segmentation, and actually, a lot of CRO experts recommend doing post-test segmentation: segmenting your results by device type or by country and trying to see if there’s something that stands out.

Keep an eye for outliers. Here’s an example: in his CRO Journal story, Amrdeep Athwal, Founder & Director at Conversions Matter LTD, tells about a CRO experiment where just one purchase was so big that it influenced the results of the test. Turns out, some CEO liked the product so much that he bought it for every senior employee in the company. These situations happen, so, watch out for something like that.

I learned the hard way that you need to dig into all experiments, both winners and losers, to find outliers that could skew data.

Segmenting by desktop vs mobile and looking at key conversion metrics and recordings for the different device types can help you understand if the user experience was equally pleasant or if there were any issues on some devices. For example, were the CTAs clearly visible both on desktop and on mobile? Did the user need the same amount of clicks or taps to go through the checkout process on these devices?

At this stage, the most important thing is to document everything you find. These are the most valuable insights, and you’ll need them for further experiments.

It never stops with one test, right? Running just one gives you ideas for three more. And so you keep running them, continuously optimizing your website for conversions, diving into the results, and coming up with new hypotheses. Rinse and repeat.

Also, it’s worth noting that conversion rate optimization is more of a culture rather than a one-time effort, so be patient. Seasoned CRO experts prefer to call it either just optimization or website optimization, because it’s not necessarily about conversions only. It’s about changing the mindset, about testing everything before blindly committing to it.

This mindset helps a lot with risk management, growth, and overall many say that it’s the only healthy mindset a company can have nowadays. Yes, it is exactly this mindset that Erik Ries describes in his bestseller book, Lean Startup. And conversion rate optimization is one good way to apply it.

I’ve seen experimentation changing organizational cultures. Going from managers saying: “I know what works and this is how we’re going to do it” to being data-driven and looking for the best solutions together with a team.

This post gives you a 6-step action plan for running impactful conversion optimization tests with advice and examples from seasoned CRO experts. They have decades of experience and successful careers in the field of conversion optimization, bringing their clients 6-7-figure profits thanks to the experiments they’ve run. They learned a lot of what they recommend the hard way.

And remember, be ready to fail again and again. That’s absolutely fine, all those experts did it. What you learn from your failures is what matters most. You learn about your customers, you learn about the market, and in the end these learnings will help you run successful tests that increase conversions and give revenue a nice bump.