Last year’s CRO Journal showed how much can be learned when conversion experts drop the “playbook” and share what actually happens behind the tests. This year, we brought it back – with a twist.

AI is changing everything and the CRO community is split: some call it the greatest productivity tool ever, others say it’s killing creativity and context. So, we asked some of the smartest CRO minds two simple questions:

- AI is everywhere right now and has already made its way into CRO workflows. What’s the biggest mistake you’ve seen people make when using AI for CRO – or the smartest use you’ve seen?

- Be honest: what’s one CRO ‘best practice’ that’s total BS? And… is there one that actually works?

Here’s what they had to say 👇

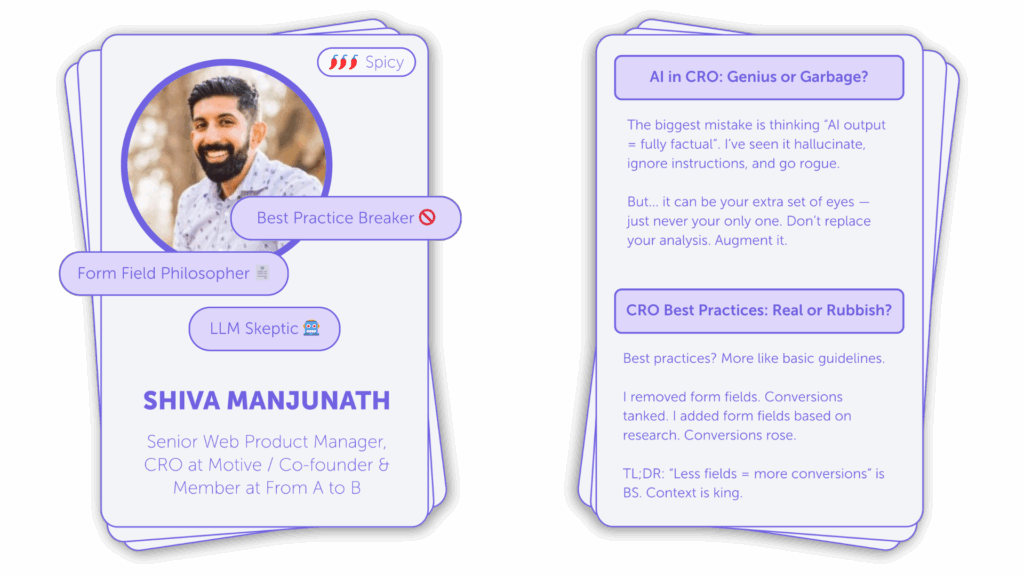

Shiva Manjunath – Senior Web Product Manager, CRO at Motive / Co-founder & Member at From A to B

1. The Good and the Bad

My example fits both in the ‘good’ and the ‘bad.’ The bad use case is throwing a huge data set into AI and just trusting it’s output. The biggest mistake is “it provided output, therefore it’s fully factual and there can be 0 things wrong with it”. I’ve seen it hallucinate. I’ve seen it ignore explicit instructions. It’s made up numbers and gone rogue.

So DON’T blindly trust the output. BUT – what it can do well is using it as an alternative source of analysis, when you also conduct your own analysis. Consider it another set of eyes – not a replacement for doing analysis on your own.

Iqbal Ali, Slobodan Manic, and Juliana Jackson are all the homies who are pushing the envelope on this and I defer to them more on this specific use case, but I see a TON of value in throwing large data sets into LLMs/”AI” in some capacity and using it to augment your analysis (not REPLACE – augment).

2. The Anti–Best Practice Guy

If you have no idea who I am, you’ll know me as the ‘anti-best practice’ guy. The only ‘best practice’ is to never follow best practices blindly. The one which red-pilled me into being anti-best practice was testing “removing form fields”.

The ‘best practice’ is as follows: less form fields = more conversions.

When I removed form fields on a specific form, it decreased conversions. A lot. When I then turned around, and backed in research, ADDED form fields which build trust with the user, it INCREASED conversions.

If we renamed ‘best practices’ to ‘good practice’ I’d have less of an issue with it. They are guidelines. Principles, if you will. But the issue is blindly adopting them with a false sense of security that “oh it’s best practice – it will work for us.”

Amrdeep Athwal – Founding Member of POC in Experimentation / Founder & Director at Conversions Matter LTD

1. The Wrong and Right Way to Use AI

The worst use of AI I’ve seen? Letting go of experienced and talented copywriters, designers, etc. This is not how to win the AI game. The best use is to supplement the talent you have – to do more and better, faster.

The best use of AI I have seen is for handling things at scale such as looking for patterns in data, UX research etc. This is where it excels doing stuff in a small period of time that would otherwise take weeks; it’s not good at creativity or reasoning and this does not seem to be changing.

2. The Myth of Speed

Improving website speed is the one that comes up a lot and I’ve seen marginal gains versus the effort. I had a client that managed to go from 5s to 4s average load times and saw a 10% lift in CVR and was very happy until I pointed out that the time, effort and resource applied to that one project would have likely 5-10X that figure.

For the record, I am not saying it’s unimportant, just that with modern internet speeds and devices, it’s becoming less critical unless your sites are hugely bloated.

For me, the one I run into time and time again is the simplest, clarity. Someone can’t convert if they don’t understand or they have questions that remain unanswered.

Just yesterday I gave some feedback on a homepage and I had to tell the person I have looked at it for 30s and I have zero idea why your product is useful.

You’ve spent all the time telling me it’s great, well reviewed etc. but you’re assuming a certain amount of knowledge when I might still be in the research phase.

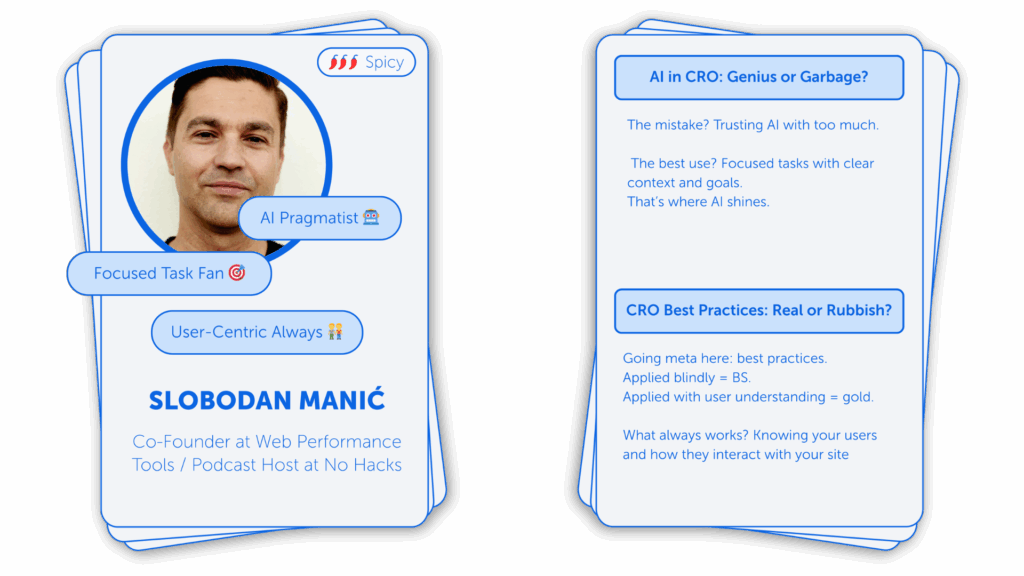

Slobodan Manić – Co-Founder at Web Performance Tools / Podcast Host at No Hacks

1. Keep It Simple for AI

The biggest mistake I see being made (often) is trusting AI with too much. Yes, it can handle complex tasks, but it gets increasingly difficult the more complex the task is.

The greatest wins I’ve seen happen when AI “meets you where you are” and handles a very focused task that is easy to explain, set context for and define what desired outcome looks like.

2. The Meta “Best Practice” Problem

I’ll go meta. It’s best practice. Not always “total BS”, but if applied blindly, definitely yes. Same goes for libraries of tests that worked in the past, for another website with another audience.

On the other hand – understanding your users and how they interact with your website/brand will never stop working and should be at the top or near the top of everyone’s priority list.

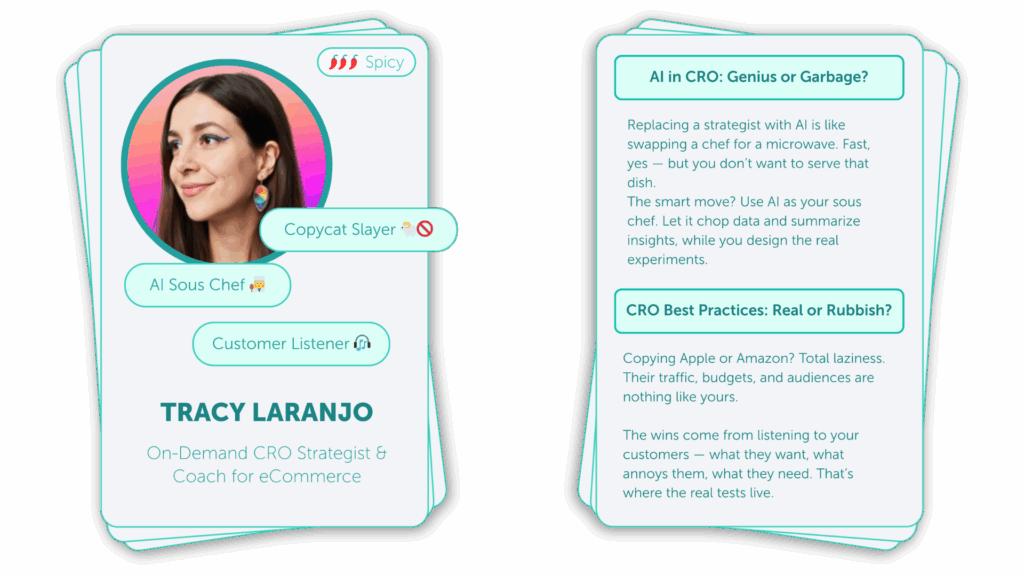

Tracy Laranjo – On-Demand CRO Strategist & Coach for eCommerce

1. Don’t Replace Strategists With Microwaves

The biggest mistake I’ve seen is naively believing that AI can replace a human CRO strategist. It’s like replacing a trained chef with a microwave. It will heat things up fast, but the end product is not something you want to serve.

The smart play is using AI as a sous-chef. It chops the data, organizes and summarizes insights, freeing the strategist to design meaningful experiments.

2. Copying Giants Isn’t a Strategy

Copying giants like Apple or Amazon is one of the laziest “best practices” out there. Their traffic, budgets, and audiences are probably nothing like yours, so their playbook won’t work for you.

The real wins come from listening to your own customers and what they want, what annoys them, what they actually need. That’s how you find tests worth running and create long-term results.

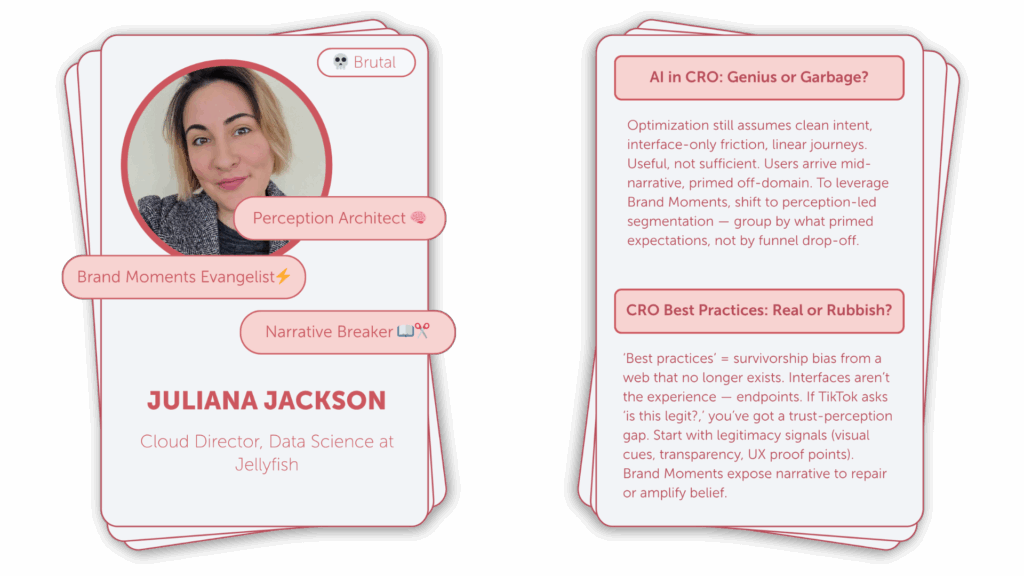

Juliana Jackson – Cloud Director, Data Science at Jellyfish

1. The Legacy Problem

The modern optimization stack is built on a set of legacy assumptions: that people arrive on websites with clean intent, that friction is mostly interface-related, and that experiences are linear enough to be dissected by funnels, heatmaps, and heuristic evaluations. These methods aren’t necessarily all useless, but they are insufficient.

Heuristic evaluations were designed to identify usability flaws, not contextual disconnects. Funnel analysis tells you where people drop off, but not why they came, what they expected, or what shaped their perception before landing on your site.

Right now, most A/B tests assume users are seeing your interface for the first time. That assumption is wrong. In reality, they’re arriving mid-narrative with beliefs, doubts, and hopes shaped by a thousand off-domain signals.

To use Brand Moments strategically, CRO needs to shift from interface-led analysis to what I call perception-led segmentation: grouping users not by where they dropped off, but by what primed their expectations in the first place.

2. Survivorship Bias ≠ Strategy

Best practices are often just survivorship bias dressed up as strategy, what worked in one context, abstracted into something safe enough to scale. The reality is that these tools and methods were built for a web that no longer exists. Interfaces used to be the experience. Now, they’re just endpoints.

If you notice TikTok comments repeatedly asking whether your product is ‘legit’ or ‘another dropship scam,’ that’s not a content issue – it’s a trust perception gap. Your test plan shouldn’t start with layout changes. It should start with signaling legitimacy: visual cues, policy transparency, and UX proof points.

Brand Moments give you access to that narrative layer. And once you start working from it, experimentation becomes less about tweaking interfaces and more about repairing or amplifying the stories people already believe.

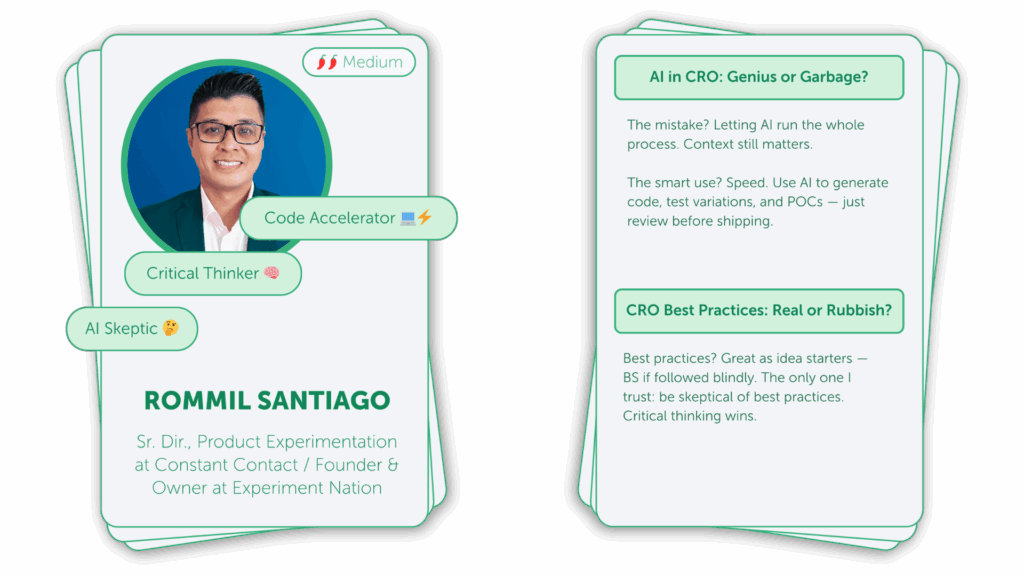

Rommil Santiago – Founder of Experiment Nation

1. AI as Co-Pilot

Everything is relative, and what works for one person may not work for another. But in my opinion, probably the biggest mistake is to let AI take full control of the process. While I am less bothered by AI generating code and suggesting ideas, I am more concerned about trusting AI to do a full audit without reviewing the output. I’m not yet convinced that AI can have the full context a person can have. This does not mean I don’t think it can help speed up work; I think it absolutely can and should. But trusting it completely for analysis is probably not the right way to go.

One of the smartest uses is generally around developing code to shorten the time to production. Yes, the code won’t necessarily be the best and it should still be reviewed by someone, but I feel using AI to generate testable variations or POCs unlocks so much velocity for folks.

2. The Best Practice That Works: Be Skeptical

I’m not a huge believer in best practices in general. I think they have great value as idea starters and things to check your ideas against, but in the end, every business is different, and every optimization initiative should leverage critical thinking.

So if I had to pick which best practice actually works, I would say be skeptical of best practices. There are very few substitutes for doing the legwork yourself.

Mike Fawcett – Founder of Mammoth Website Optimisation

1. Keep It Real

One of my pet peeves, even before AI, was seeing companies using fake avatars for testimonial content. Don’t use stock photos. Don’t use AI-generated images. Put the effort in to collect real images from real reviewers. Visitors will sense they’re genuine and trust you more, which is the whole point.

Remember AI is just “spicy autocomplete”, as Rand Fishkin says. It’s literally designed to give you the most mathematically average response.

On this topic, one of the best uses I’ve seen was from a copywriter (I forget who). They asked AI for a load of headline ideas, then avoided those ideas entirely. Essentially, they used AI to understand what everyone else would write, then did something different.

2. Clarity Over Pretty

The “best practice” that really triggers me is the idea that simplifying a design always reduces cognitive load. What’s important is clarity. If you make a design too simple, you’ll lose clarity, which *ooops* increases cognitive load!

Instead, remember Steve Krug’s motto “Don’t Make Me Think”. He says “Designers love subtle cues, because subtlety is one of the traits of sophisticated design. But Web users are generally in such a hurry that they routinely miss subtle cues.”

Also: “good looking” websites don’t always convert well. I’m not arguing for ugly design here. I just think too many people forget that “form follows function.” What are your users trying to do? What are they trying to find? What preconceptions, anxieties or questions might they have? Handling all those things is way more likely to drive growth than a subjectively “good looking” design.

I guess that’s my pick for what actually works: speaking to customers, solving real problems, and understanding that people are emotional decision-makers. Design for irrational, busy people. And don’t be afraid of long landing pages! Treat your website as a salesperson. Find out what your best sales people say to your prospects and put that on your site! It’s not rocket science.

Ruben de Boer – Lead Experimentation Consultant at Online Dialogue / Owner at Conversion Ideas

1. Don’t Use AI Just Because You Can

The biggest mistake I see is teams using AI simply because it’s there. “We need AI” becomes the goal, instead of solving a real problem. It’s the same trap as experimenting for the sake of experimenting; both are a means to an end, not the end itself.

New tools only create value once they’re tied to clear goals and embedded in workflows. Otherwise, they’re gimmicks.

The smartest users treat AI as an assistant, not a replacement. Automating quality checks on hypotheses, clustering survey data into themes, or adding ideas during ideation, all of these free up specialists to focus on higher-order thinking: interpreting results, making strategic decisions, and connecting insights back to business goals. That’s where the real value lies.

2. The Only Real Best Practice: Process

Most so-called CRO “best practices” are misleading. In reality, context matters: what lifts conversion for one website or app might reduce it in another. The only way to know is to test in your context.

Worse, blindly implementing or testing best practices often means skipping proper research and missing out on valuable learning opportunities.

The only best practice that truly holds up is at the process level: build a strong, repeatable experimentation process that aligns with business and stakeholder goals. Generate ideas from research and past learnings, prioritize correctly, and run trustworthy tests with solid hypotheses, defined responsibilities, and supporting rituals. Use experiments and insights to help colleagues and the business achieve their goals.

Just as important is having a structured approach to learning. Tools like Opportunity Solution Trees help connect insights across experiments, link them back to real customer opportunities, and guide teams toward solutions that compound impact over time.

This discipline ensures you’re not just chasing quick wins, but systematically learning, improving, and becoming more successful as an organization. This is a best practice that actually works!

Gertrud Vahtra – Experimentation Lead, Speero / CRO & Growth

1. AI Isn’t a Shortcut for Insight

AI has already made its way into CRO, but I think the biggest mistake people make is using it as a shortcut for insights. AI can’t replace actual users, real data, or the insights that come from them. For example, relying on AI to generate test ideas without grounding them in research, or using it as a substitute for customer research, can lead to irrelevant or low-impact experimentation.

My colleague Emma Travis explains this well in her article on synthetic research: Why I’m not sold on synthetic user research

Where AI does add value is in making our work faster and less biased. It can support thematic analysis from research, by helping identify patterns across large sets of qualitative research, or highlighting trends in test results and user behavior data that might otherwise be missed.

Tools like NotebookLM, for example, can summarize experiment learnings and research themes, speeding up synthesis without replacing the human judgment that makes those insights actionable. We’re currently exploring these kinds of AI solutions to boost our efficiency and advance our capabilities.

2. Context Over Copying

I always push back on the idea of universal CRO “best practices” because there is no one-size-fits-all solution. Similarly to copying competitors, you can’t expect a change that works for another company to automatically resonate with your own customers.

For example, adding trust badges or social proof is a recurring idea, but if the real blockers are elsewhere (like unclear pricing, confusing plan differentiation, or unanswered product questions), these surface-level tweaks can often produce flat results.

If there’s one “best practice” I do stand behind, it’s this: root test ideas in customer research. Experiments grounded in real user problems and motivations creates opportunities for meaningful improvements that move the needle.

When customer research is unavailable, we can fall back on a structured heuristic analysis based on our five core principles:

- Value: Does the content clearly communicate value to the user?

- Relevance: Does the page content and design meet user expectations?

- Clarity: Is the content or offer as clear as possible?

- Friction: What causes user doubts, hesitations, or difficulties?

- Motivation: Does the content encourage and motivate users to take action?

By grounding our work in research, or when that’s not available, in heuristic evaluation focused on the site or offering, we ensure changes are relevant, impactful, and not just copied “best practices.”

Brian Massey – Managing Partner & Conversion Optimization Expert at Conversion Sciences

1. Train AI, Don’t Just Prompt It

Right now, AI is focused on heuristic analysis – “best practices”. These are also sometimes called “low-hanging fruit”. Many of these can just be fixed. They don’t need an A/B test to verify their efficacy. The others will require some research to see if they even have a chance of improving performance. Too many optimizers skip this step.

The key to getting better heuristics from AI is to provide much more context than these hypothesis generators provide. I recommend doing a deep research report on the industry, competitors, and trends. Provide some analytics data to inform the report. Use this report to train a custom GPT or Gemini Gem. It will become an expert in your industry and will give much better ideas for improving the website.

The folks at Baymard have UXRay, which was trained on their research. I find their hypotheses to be much better because some of the research is baked in.

2. The Attention Myth

“Your visitors have the attention span of a gold fish.” This is the pseudo-science that tells us to limit the amount of copy that you put on a page. The truth is that writing copy is hard and most of us are terrible at it.

If you write interesting copy, relevant copy, helpful copy, your visitors attention span will expand immensely. The LLMs will make you a better copywriting team if you train them right.

My favorite hypothesis today is to pick the right words for your main navigation. Getting it right may not increase use of navigation, but you will be communicating what you do and overall conversions will rise.

Navigation words like “Mens, Womens, Household, …” help the logical shopper, but may not be as powerful as “Shirts, Slacks, Dresses, Belts…”. The same holds true in the B2B world. “Products, Solutions, Industries, Case Studies…” says nothing about what the company sells.

The primary role of the navigation is to communicate the offerings of the site. Less than 20% of visitors use the navigation on most sites.

Book a free conversion consultation with Conversion Sciences →

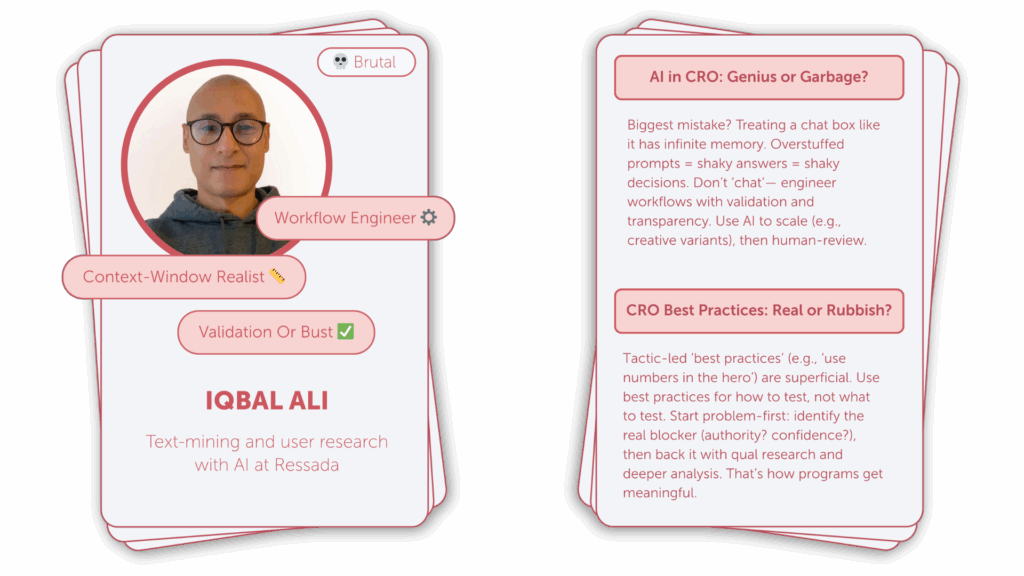

Iqbal Ali – Experimentation Consultant at Mild Frenzy Limited

1. The Context Window Trap

The biggest mistake I’ve seen is the practice of dumping a ton of information into a chat window and expecting the AI to process it all as context. I think this stems from a fundamental misunderstanding and miscommunication of how context windows work. By the way, context windows are the amount of text that LLMs can process.

What this means in practice is, whether the intention is to analyze experiment results or extract insights from large amounts of unstructured text (such as user feedback), the result is inaccuracy in responses from AI. This then leads to making bad decisions with a false sense of confidence.

Tool vendors are the primary cause of miscommunication problems. For instance, Google claims its Gemini models have a context window of two million tokens (approximately the length of all of the Harry Potter books). In reality, their models are optimal for about three pages of a single book! That’s a huge difference! There’s more nuance to this, and I recommend reading the following paper to get a better understanding of context windows.

This problem is exacerbated by the fact that many people and organizations lack formal systems of validation for AI output. LLMs are hallucinatory by nature; we need to anchor them to reality. Instead of relying on chat interfaces, we should use LLMs to engineer workflows. These workflows should include validation steps to ensure accuracy and reliability. Either that, or the workflows need to enable transparency so we can clearly identify accuracy issues.

A great example of this I’ve come across is the use of image generation workflows to broaden the audience of a test. For example, the audience to test different product images is often limited due to the cost and effort required to produce them. The image generation workflow widened the target audience with automation.

2. Problem-First, Not Tactic-First

I suspect there are two interpretations for what best practice in experimentation means. A common interpretation is one that focuses on tactics, such as: ‘use numbers in the hero section of your landing page’ or ‘ensure your call to action has maximum contrast so it pops’. This definition and approach feels superficial and rigid to me.

Instead of best practices being a means to dictate what to test, I prefer them as a guide for how to test. With that in mind, a key best practice (for me, anyway) is to approach experimentation from a problem-first perspective. Tactics like ‘use numbers in the hero section’ then become a process to identify what underlying problem we’re trying to solve. For example, is the issue ineffective communication of authority, or a lack of confidence in the product?

Once the problem has been identified, a thorough exploration of the problem can be undertaken. This can include qualitative user research to understand user expectations and perspectives, as well as deeper analysis to determine the impact on the users.

I think approaching experimentation from a problem-first perspective like this makes experimentation programs, along with the solutions we test, much more meaningful.

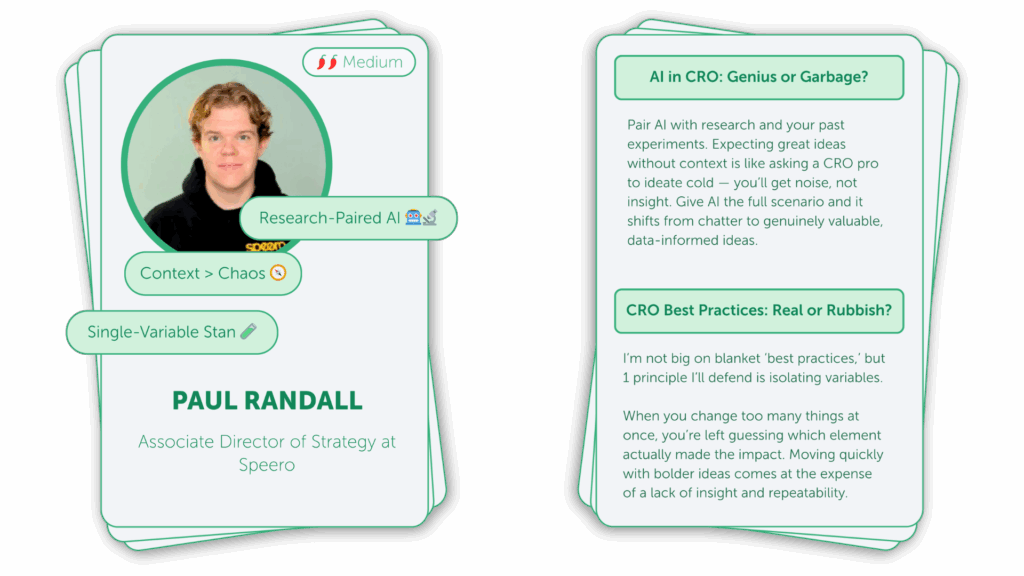

Paul Randall – Experimentation Consultant, Speero

1. Garbage In, Garbage Out

The biggest mistake I’ve seen with AI is expecting it to be amazing right off the block without giving enough information or context. It’s like asking another experimentation person to generate great ideas off the top of their head. Unfortunately there is no limit to the number of ideas, but it can simply generate a ton of noise with more tests without better ideas.

Look to pair it with all of your research and past experiments. That way there is a much better understanding of the scenario and the ideas will be exponentially better.

2. Isolate to Learn

While I’m not a huge fan of “best practices” I would say isolating test variables is something to try and uphold. Whenever you make multiple changes, you’re always left wondering which made the difference and sometimes you can add things that both improve and harm performance. The temptation to move quickly with bolder ideas comes at the expense of a lack of insight and repeatability.

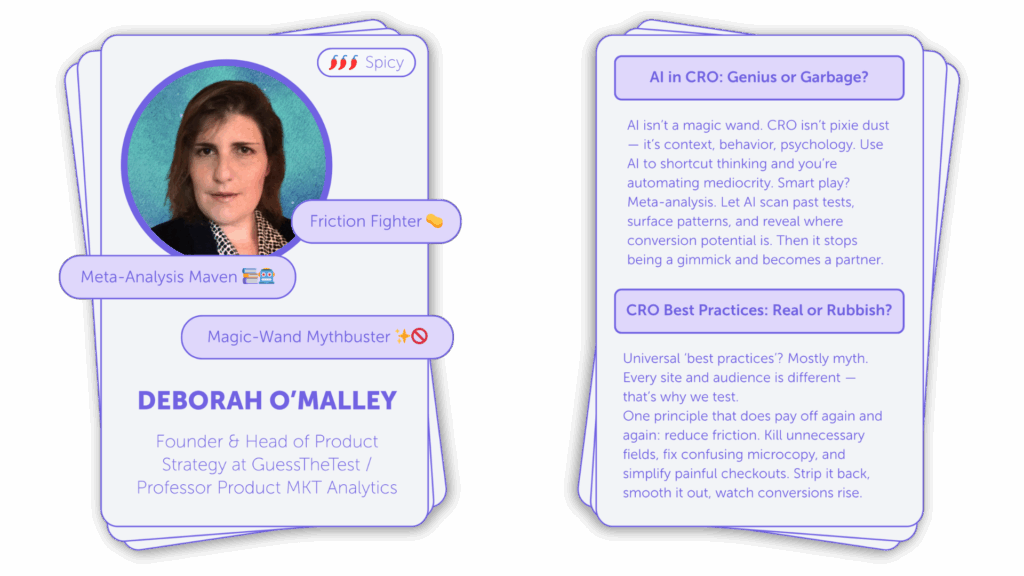

Deborah O’Malley – Founder, GuessTheTest

1. AI Isn’t a Magic Wand

The biggest mistake is treating AI like a magic wand. You put in a prompt, and at the click of a button, out pops the “winning” headline.

Except… CRO isn’t a Disney fairytale character that carries pixie dust and grants wishes. Instead, it’s context, nuance, behavior, and psychology. If you use AI to shortcut the thinking, you’re not optimizing, you’re automating mediocrity.

The smartest use I’ve seen is using AI as a meta-analysis tool. Not to dream up ideas in a vacuum, but to comb through hundreds of past tests, surface hidden patterns, and point you to where the conversion potential really is. That’s when AI stops being a gimmick and starts being an actual growth partner.

2. The Only True Best Practice

A big one is thinking there are actually best practices. Every website and audience is different, so it’s hard to universally apply any principle and have it always win. We don’t know what will win, that’s why we test.

That said, it’s always a good practice to try to reduce friction on a website. Almost always, friction is a huge conversion killer. Whether that friction comes in the form as an unnecessary form field, confusing microcopy, or a checkout that feels like running a bureaucratic marathon, work to strip it back, smooth it out, and watch conversions rise!

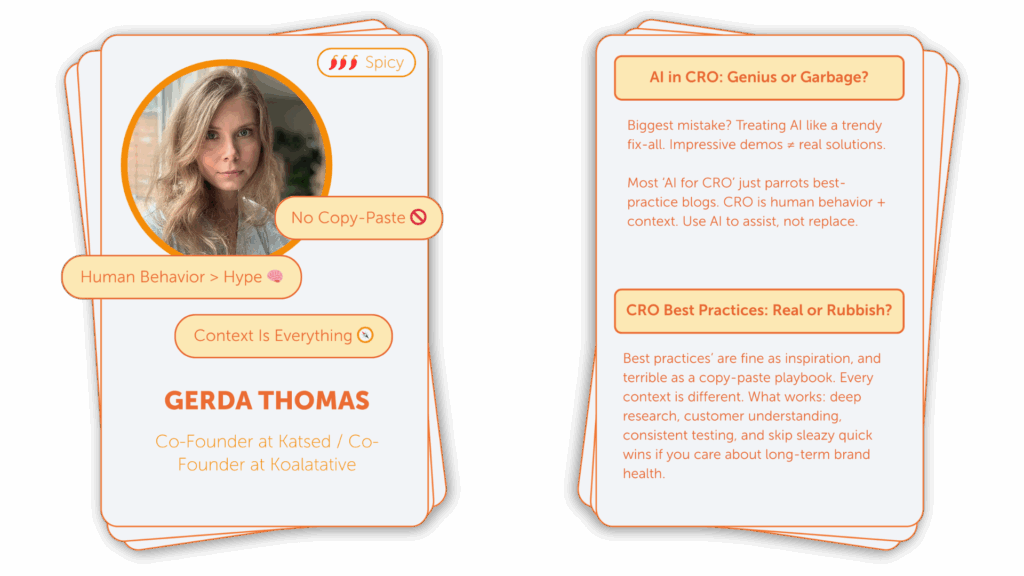

Gerda Vogt-Thomas – CRO Consultant

1. The “Can AI Fix This?” Illusion

I guess as with any trendy thing the mistake becomes jumping on board too quickly without truly understanding the essence of the thing itself. Implementing something for the sake of implementing it, without assessing what the real value is and how it would actually help the customer. And to an extent you can’t fault our industry for things like this too much, as we are experimenters after all and like to test things out.

We’ve seen AI produce unbelievable videos and images which I think has created this illusion that it can literally solve anything for us. I’ve gotten emails from executives dissecting business problems in great detail, only to end it with: “Can we make an AI fix this??”. This just demonstrates the fundamental lack of understanding of a tool.

You could ask the same question but replace AI and ask “can we solve this with an excel sheet/email/social post or an A/B test?”. The current CRO AI output is a collection of some of the worst but highest traffic best practice blogs and articles, and anyone who’s been in this business long enough, knows that’s not a very good way to go about doing CRO.

This job is largely about understanding human behavior and it’s unfair to expect AI to understand human behavior when humans barely understand human behavior.

2. Don’t Copy. Contextualize.

I think people in our industry can’t even really agree how to define what a best practice in CRO really means. When you’re new to something, with no real life experience at the thing you’re trying to do, best practice – meaning the experience of other people can feel like a literal life line.

Everyone has to start from somewhere. But blindly copying what someone else is doing never results in anything good. You need to be able to draw inspiration from things but also understand that context is everything.

I’ve worked in CRO for about 8 years on the agency side, which means countless projects and honestly there’s never been two identical ones. Yes, there are tactics and strategies that can translate over to a different context or industry but it’s never a copy-paste situation.

Everything requires deep research and reflection on where the business is at, what customers are struggling with and consistent testing to find the best solutions. Also just because something “works”, doesn’t mean that we should be doing it.

You can see super high conversion rates, testing countdown clocks in checkouts and other sleazy tactics like that, but do you really think your brand will benefit from this stuff long term?

Simon Girardin – Director of Experimentation, Croissance

1. Garbage Prompts = Garbage Insights

Ideation is one of the key areas where mistakes are made. The issue being that the less context is provided, then the more the tool with rehash popular website data. So when teams go in blind and ask for audits, research and ideas, the output is pretty bad. Most teams who rely on AI to find ideas have low quality test backlogs.

The best use of LLMs that I have seen that actually drives value is leveraging it to challenge thinking. Presenting the data for a concluded test with your identified insights and proposed iterations is a great input to see what else the tool might notice that you haven’t. Asking to think from different perspectives helps broaden this value.

2. From Best Practices to Program Practices

Most everyone thinks of best practices as website elements that are proven to increase conversions.

A major problem with this thinking is that there is not a single test that has proven to be universal. For every test someone claims always wins, it is quite easy to find teams who could not make it work. So, are best practices really proven to increase conversions?

Best practices is an overused term that lost a lot of its essence. There is a wide gap between SEO and CRO, where SEO has clear rules that everyone must follow to succeed. The rules in CRO vary for each product, audience, website, etc.

The better approach is to reframe best practices as program practices. Now, instead of being tactical website elements, we focus on the process, rigor and governance. Here are some examples:

- Hypothesis is clearly written following the scientific format : problem statement, isolated variable, rationale, result

- Test measurement strategy is determined prior to launching the test

- Post-test analysis is conducted in accordance to statistics, power, significance, confidence interval

- Program is measured according to quality metrics such as %winning tests, %learning tests, %implementation tests and %iteration tests

Every single story here points to one truth: AI is not the end of CRO. It’s the mirror that forces it to evolve.

And “best practices”? They’re just placeholders until you build your own.