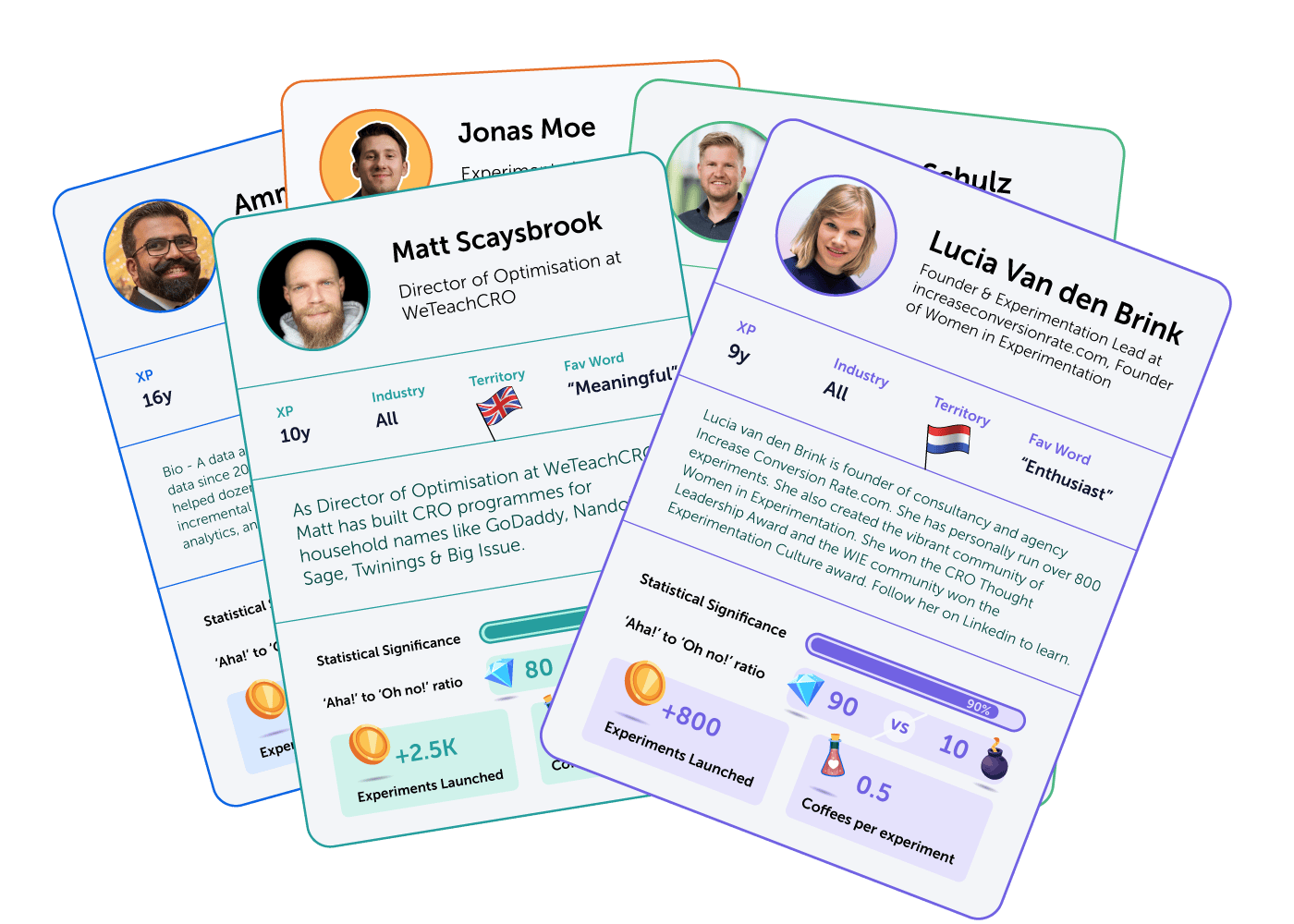

Amrdeep Athwal - Founder & Director at Conversions Matter LTD

CRO and Christmas Miracles

I ran an experiment for a B2C company that sold confectionery. We did some work with their checkout to streamline the process, enclose it and add some trust elements as well as some upselling, and so on.

The experiment’s primary metric was AOV (average order value), because changes related to upselling and cross-selling were big, and the rest were more like refinements.

The control ended up winning by a large margin. At first I could not understand why. I dug into the data with the help of our analytics tool and saw the issue.

The control had one huge order – easily 10-15 times bigger than the usual AOV. And this order was skewing things. Turns out, some CEO decided he liked the products so much that he bought them for every senior employee of the company as a Christmas gift.

I learned the hard way that you need to dig into all experiments, both winners and losers, to find outliers that could skew data. And keep in mind that seasonality can affect results. In the end, we re-ran the test in the new year with some tweaks – and it went well.

Experimenting With Trust

My very first experiment defined my whole career. And it was literally the first project I was given on day 1 working in digital, after I switched from direct marketing.

It was for a cosmetic surgery client, and they wanted us to look at a particular cosmetic surgery they offered. They had about a 10% enquiry to booking rate and this was their main CTA.

So I looked at all the different pages and saw that the location pages for the hospitals they owned had the highest engagement metrics.

After some more reading about the brand and looking at its competitors, I saw that their core differentiation was that they owned and ran their own clinics rather than working out of other clinics belonging to 3rd party private or public establishments.

I realized that going for cosmetic surgery is no easy decision. And when people make it, they want comfort and assurance – they prefer to be in a specialized clinic close to home, and that was something that none of the competitors could offer.

So, working with our design team, we created a CTA with a map of the UK and pushpins with all the relevant hospitals to visually convey this message, as well as variations in wording to push the message home.

The experiment was a great success. We ended up increasing traffic to the enquiry form by over 400%, and enquiries for this procedure went up by over 100%.

This made me use quantitative data to build a picture of how users interacted with the brand and what they cared about. And then use empathy to put myself in the place of the user and see what the core value proposition is for the brand and how that aligns with the users’ needs. That can change a lot both for users and for businesses.

Daniël Granja Baltazar – CRO Specialist at VodafoneZiggo

Standing Up to a “Big and Bold” HIPPO

I was working at a digital agency and got a well-known Dutch luxury clothing brand as a client. During our introduction call I presented a few A/B test ideas based on data. The founder of the company dismissed all the ideas. He wanted to test “big and bold ideas” only.

I showed him a few tests that I ran for clients in the past quarter to convince him that subtle changes can have a big effect. At the end of the meeting he gave in: “But if this first A/B test does not work, we are switching to a big and bold test next.”

I agreed and proceeded to set up the A/B test. It was quite simple: adding the most used payment methods underneath the “add to cart” button. However, I personalized those payment methods based on the five biggest markets where we were going to A/B test this (The Netherlands, Germany, France, USA, and Canada).

The result? The A/B test saw a statistically significant uplift in conversion ranging from 2% to 6% (depending on the market). “Low risk culture countries” like Germany saw a stronger conversion uplift compared to “high risk culture countries” like the United States. Nevertheless this “simple” A/B test generated the client tens of thousands of euros of extra revenue each month.

After that A/B test the founder did not mention “big and bold A/B test ideas” ever again.

The Moment You Can’t But Become a CRO

Your first test is like your first love – you remember it forever. It really shaped me as a CRO. I was working for a Dutch museum which naturally wanted to sell more tickets online.

At the time there was a big debate going on internally on how to improve the exhibition pages on their website.

There were two camps:

- The marketers wanted short pages with visuals and a big “buy” button.

- The curators (who wrote the content) wanted lengthy and detailed pages to explain what the exhibition was about. And it was how the exhibition pages looked at the time.

I proposed to set up an AB test in Google Optimize (which was free – that always helps with convincing) to test which version would work best. The marketers and the curators both agreed.

I set up the AB test where I tested the detailed exhibition pages against shorter and more visual pages which briefly described the exhibition.

The result? Users spent twice as much time on the new exhibition pages and bought eight times more tickets for the exhibition compared to the control variant. That A/B test proved to me (and the Dutch museum) what the value is of A/B testing.

I’ve been a fan of experimentation ever since!

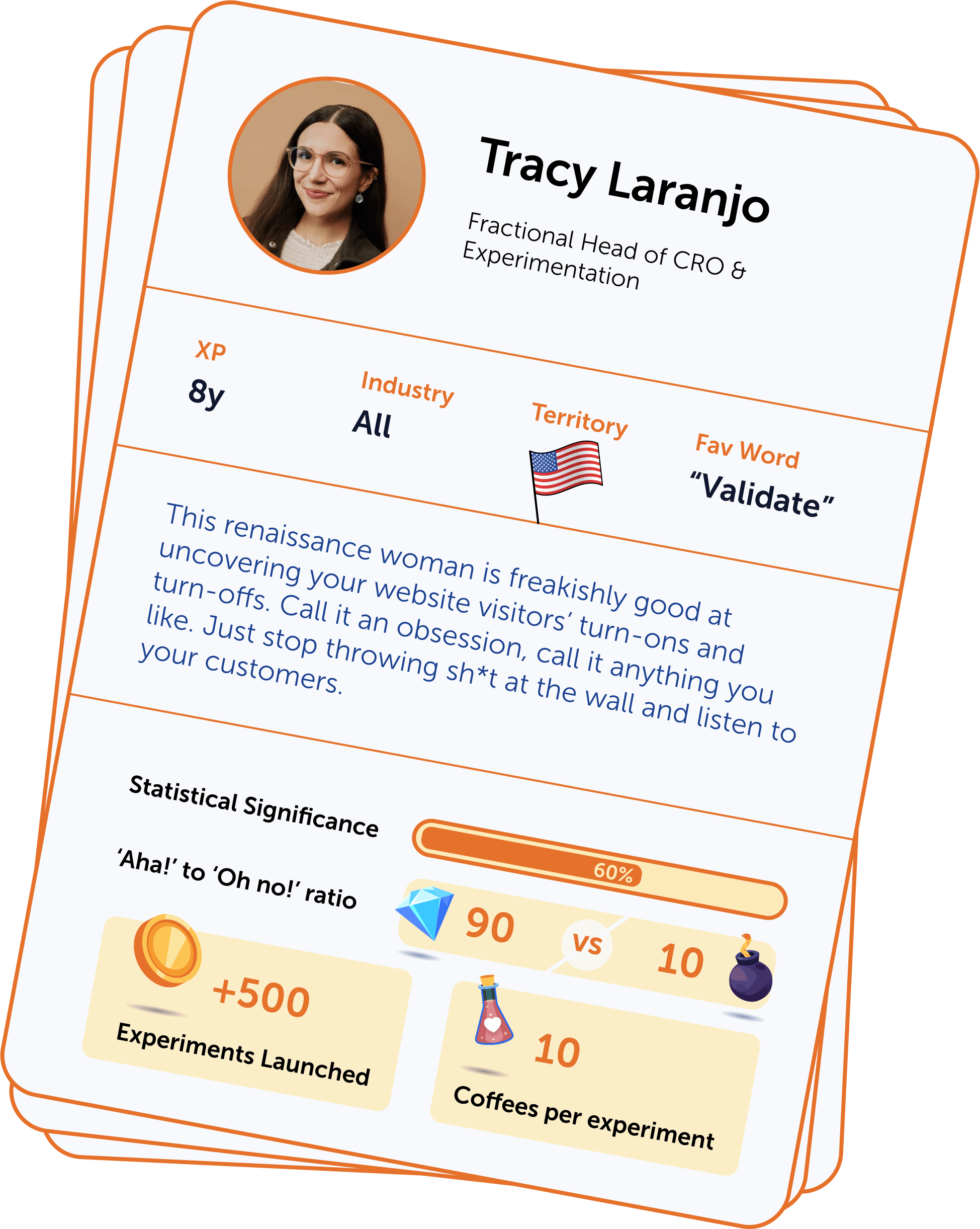

Tracy Laranjo 🍊 - Founder at Aftergrow, Fractional Head of CRO

What to Do Before Discarding the ‘Loser’

There was a time working for a client where we had learned that their visitors found the existing subscription opt-in flow daunting and unhelpful. To address this, we ran a test that redesigned the flow from a very long form into a multi-step, paced questionnaire with informative tooltips.

Upon first glance, the results were flat at best. After diving deeper into the results, we found a bug that affected Android devices only and therefore tanked that segment’s performance. All other devices saw a positive, conclusive impact on conversion rate.

It reminded me that instead of taking a poor result at face value, or sweeping the negatives under the rug, it’s important to ask tough questions like why are we seeing a poor result.

What I’ve Learned Optimizing a Sex Shop

One of the things I find most interesting about CRO is the psychology behind the average person’s unique buying decisions, and how getting to know your customer properly can lead to success. Nowhere have I seen this to be more evident than in the sexual wellness and pleasure niche.

Several weeks of rigorous customer research for a client in this space revealed some eye-opening information about how their site visitors liked to shop:

- Not knowing how loud a toy was the #1 most common conversion barrier. This was especially important for parents.

- Everybody experiences pleasure differently. For example, some visitors wanted high intensity toys while others preferred light stimulation.

Based on these insights, the first test we ran together was a ‘Shop Toys by Category’ section on their homepage, with category tiles ranging from solo vs. couples toys, vibration intensity, internal vs. external stimulation, and a special category for the quietest toys.

The result of thinking about the unique pleasure preferences and privacy concerns of the visitor, and helping them more easily find a toy that suited those preferences, was a revenue increase of over $20,000 per month. Aside from a clear monetary win, we now had learnings that could be applied to other site areas (e.g. navigation) and marketing channels (e.g. email).

Jörg Dennis Krüger – The Conversion Hacker

Trust > Aesthetics (Learned the Hard Way)

There was a prestigious fashion e-commerce client, and the experiment was about introducing a minimalist redesign of their product pages. Our vision was to enhance the user experience and boost conversions. And we were quite confident in it.

Except, initially, this radical approach seemed to be a misstep — conversion rates fell sharply, putting us in a challenging spot with our client.

But, this became a turning point in my understanding of conversion optimization. We did a comprehensive analysis of user behavior and found something, something really important.

While the design was aesthetically pleasing, it lacked essential trust elements. Customers were engaged – but reluctant to complete purchases due to a perceived lack of trust in the new layout.

This taught me that the fundamentals of conversion optimization often trump subjective ideas of user experience and design. Trust, clarity, and reliability are paramount, sometimes outweighing purely aesthetic considerations.

By integrating strong trust signals like customer reviews, trust badges, and transparent return policies into the design, we not only recovered but surpassed our initial conversion rates.

Get a free strategy consultation with Jörg here.

The First Major Win is What Makes You Stay

The stage was set with a store specializing in personalized presents, and our audience: visitors from Google Shopping and organic Google search landing on a Product Detail Page (PDP).

Our hypothesis was simple yet ambitious: enhance product visibility to aid decision-making, thereby reducing bounce rates and elevating conversions. This led to a strategic shift: moving “recommended products” from the PDP’s bottom to the forefront. The outcome was nothing short of remarkable—a staggering 15% surge in sales, diminished bounce rates, and heightened user engagement.

This experience was transformative. It underscored the immense power of personalization—not just as a user experience enhancer but as a catalyst for significant business growth. It challenged traditional perceptions, proving that even minor modifications, when rooted in a profound understanding of user behavior, can yield extraordinary results.

This experiment redefined my professional trajectory, instilling a relentless pursuit of testing and optimization, particularly in personalization. It reinforced my commitment to data-driven strategies, continually adapting to empirical evidence. It urged me to delve deeper into the untapped potential of personalization in CRO, setting new benchmarks in the field.

Matt Scaysbrook - Director of Optimization at WeTeachCRO, Mouseflow Partner

From a 10k Win to a 250k Win

In the first year of my agency, I worked with one of the UK’s largest domain registrars. Their order process was full of upsells, cross-sells, add-ons & all the other possible AOV-boost-seeking options you could think of!

So my initial hypothesis was this:

If we remove all upsells from the order process, visitors will have fewer friction points, and that will increase overall sales conversions.

The first run of this test was reasonably positive. But it wasn’t until we started to do post-test segmentation that I realized the full potential of what we had.

By using post-test segmentation to fuel pre-test divisions, we began to create ever-more targeted experiences based on the results of the previous tests.

What was +£10k / quarter for them in potential revenue soon became +£250k / quarter once we started to create experiences for individual segments as opposed to a single experience for everyone. Although it took 5 iterations to get to that!

It taught me that within every winning experiment, there’s a subset of visitors that overperform the average & a subset that underperform. Armed with that knowledge (and high traffic volumes!), it is possible to turn virtually any “failure” into a win.

Segment post-test, use that to fuel the iteration’s pre-test segmentation, rinse & repeat with ever-deepening segments to get the right experience for the right visitor.

How I Nearly Drowned… in Data

It’s 2014, and I’m in my first-ever agency-side role, working with my first-ever client. And I want to impress them.

I set up a baseline test (no new experience, just detailed tracking) as a way of gathering data we couldn’t get from their analytics.

But in my youthful hubris, I create it sitewide, tracking about a 100 metrics across every product category that they have.

And only when I sit down to analyze it do I realize the mistake that I’ve made. There is data everywhere. Tons of it. Kilotons of it. I’m in trouble.

In the end, I got the analysis done, and it gave us some really valuable insights for future work. But I very nearly killed myself doing it. Early starts & late finishes for the best part of 3 weeks – and by the time I sent it to them, I was cursing its very existence!

The lesson I learned over that period was a clear one – do not set up a test without understanding how you’re going to analyze the data at the end of it.

And 10 years on, it is a lesson I have never forgotten 🙂

Bryan Bredier – Expert CRO Maiit

Fired for Increasing CR by 60% (and Then Hired Again)

I recently had a very impatient client. They wanted to increase the conversion rate of their store but had a very short-term vision. They did not agree to leave time for tests to find the best combinations and wanted to change things after 24 hours. Sounds familiar, doesn’t it?

In the end, the collaboration quickly ended with the client removing access to the website for me. But they didn’t know how to restore the original website, so they left the modifications in place.

A week later, the client came back to us after noticing the changes had increased the conversion rate by around 60%. Turns out, our A/B test defined the best combination!

Guaranteed Hacks Work… Until They Don’t

Like most people at the beginning, I chased a lot of “hacks” that were “guaranteed” to improve the conversion rate of my first website. Well, they did!

With these tricks in my pocket, I was convinced that I could replicate it on any website – until I came across a site for which my tips did not work at all. I was puzzled. I dug deeper and realized that my approach was not good for a very basic reason.

Behind the screen, there are humans. And – surprise, surprise – different people do not react the same way. So, the approach in CRO (and in general in business 😅) must focus on understanding the human behind the screen more than on hacks.

Well, that was the moment when I understood the importance of putting the right tracking tools in place!😉

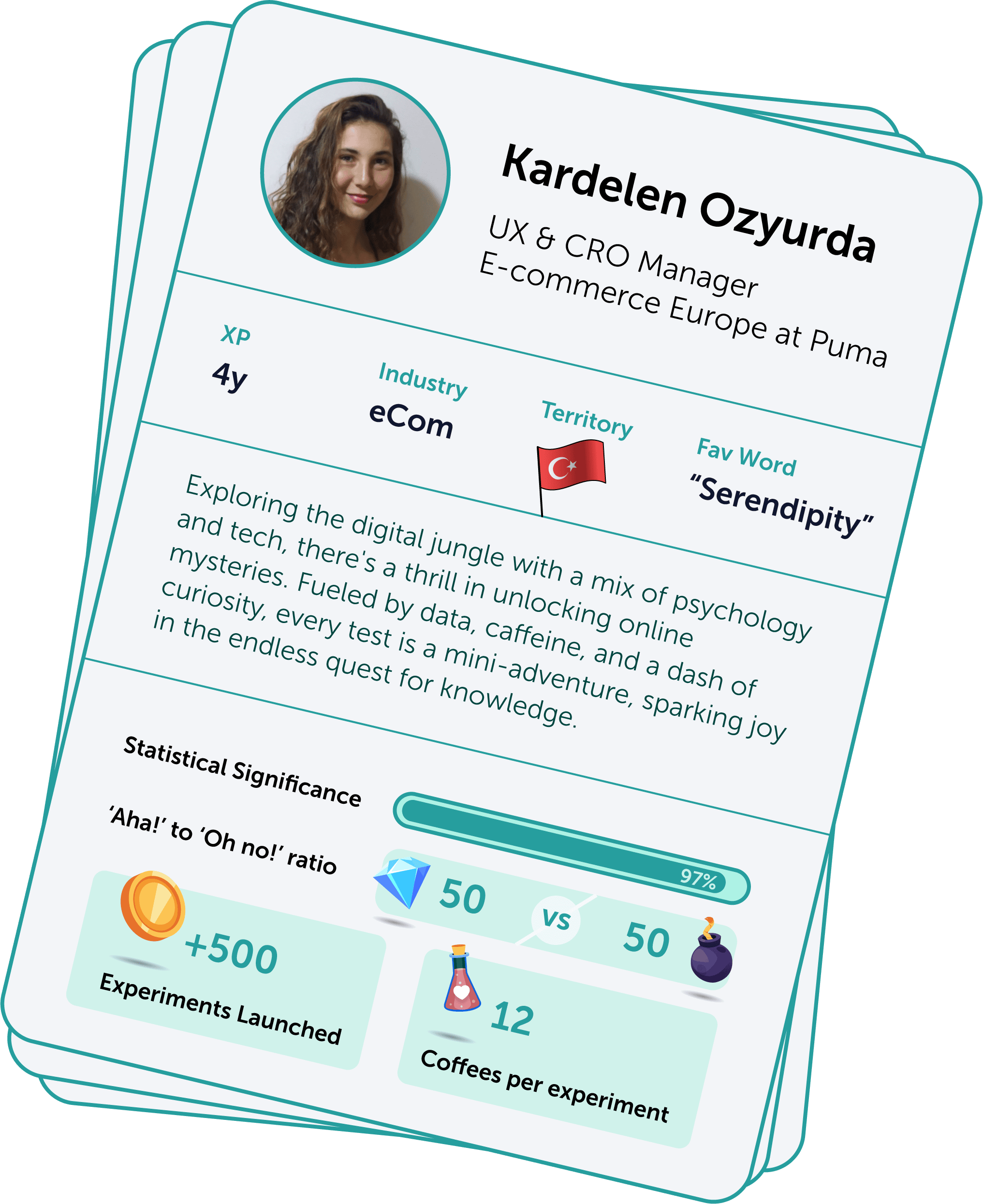

Kardelen Ozyurda - UX & CRO Manager E-commerce Europe at Puma

Turning a Checkout Frown Upside Down

We decided to spruce up our checkout process, condensing the steps. Well, as a result, our conversions took an 8% nosedive.

Turns out, users missed the reassuring final order check.

Quick fix: we introduced a refined order summary step – and voila! Not only did we recover, but we also scored a 5% boost compared to the original design.

Lesson learned: shoppers appreciate a transparent and reassuring checkout – a little psychology magic there.

Elevating Product Pages with a Dash of Flair

Back in my early days of optimizing product pages, we decided to add some flair. So, our product pages got 360-degree views and detailed specs.

Result? A commendable 12% rise in conversions and a 7% drop in bounces.

It was like giving our pages a makeover, and suddenly, they were the stars of the show.

This experiment, coupled with my psychology background, reshaped my approach. It highlighted the importance of engaging content that taps into user psychology, ensuring a winning combo of professionalism and conversions in every project.

Paul Randall – Sr. Experimentation Strategist at Speero

Don’t Peek and Challenge Your Beliefs

Here are the two notable things I’ve learned from running experiments over the course of my career.

Many years ago, I would be looking at tests on a daily basis, but I began learning that a desired sample size is needed before you can make any conclusions.

It’s often stakeholders who will peek and want to stop a test early, but I’ve learned that communicating the number of users per variant helps them resist the urge.

Some testing tools still send out messages way too soon though!

So, don’t peek; have patience.

Another profound test also happened early in my career, as I transitioned from a UX practitioner.

We ran what seemed like quite a counter-intuitive experiment at the time, which was to move a lead generation form behind a preliminary step.

We did find that by not immediately showing the form, we were able to increase leads.

This pushed against many internally held beliefs about what a good landing page looks like, and it paved the way for even more experimentation.

Don’t be afraid to challenge your beliefs!

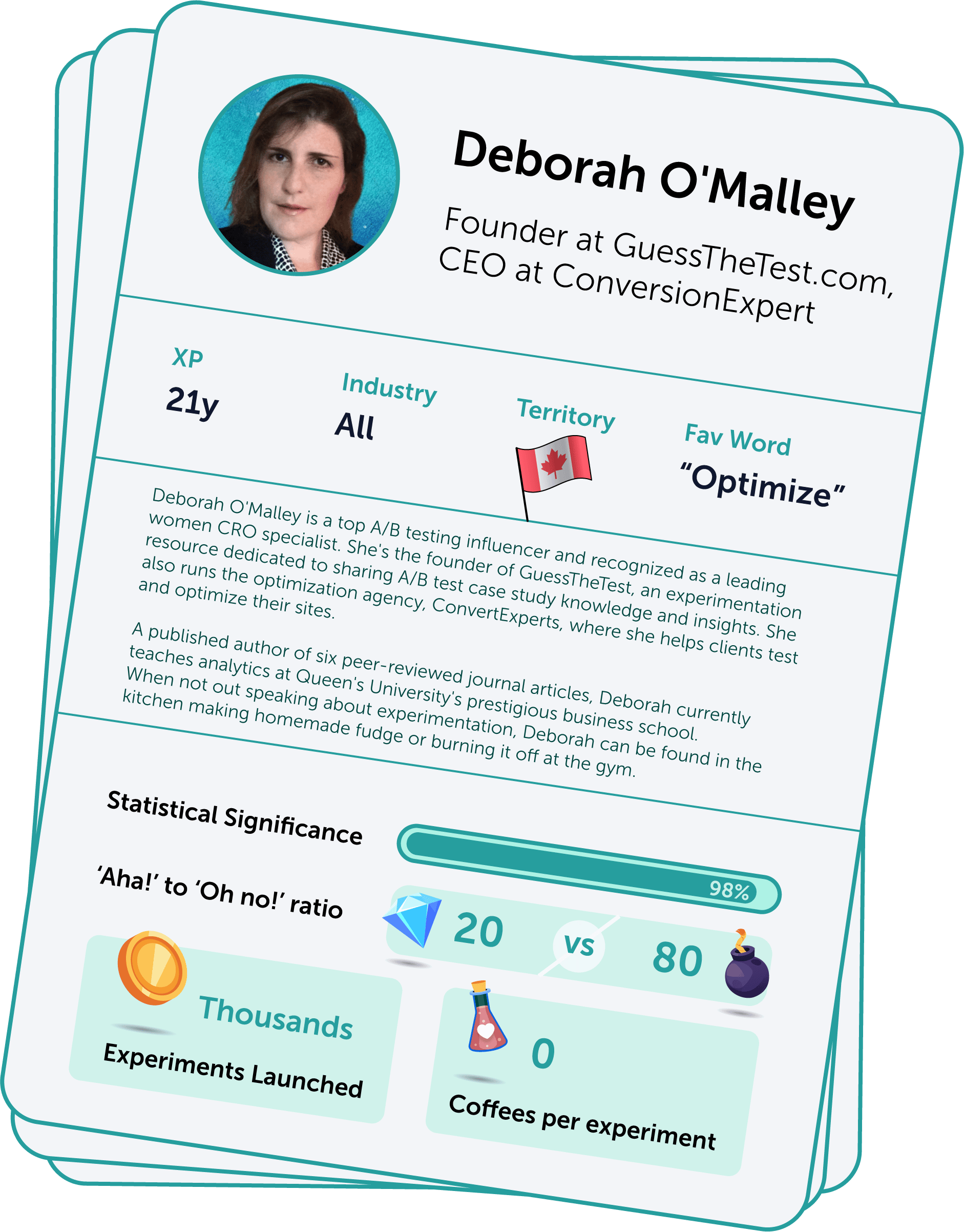

Deborah O’Malley, Founder GuessTheTest

Turning The “Complete Website Redesign” Nightmare Into Success

A client approached me and asked to carry out a complete website redesign without testing any elements. I immediately knew failure was in the cards. Complete website redesigns can be very dangerous because you never know what’s going to work. Nonetheless, I wanted to help out the client, so I agreed to take on the job.

I did everything I could. I conducted in-depth qualitative and quantitative analysis. Using any available customer, UX, analytics, and heatmapping insights, I dug deep into the data.

From there, I came up with hypotheses, informed by my experience about what seemed to be working – and not working – on the current site. I suggested how to fix the current issues and created detailed wireframes of exactly which elements to change.

Working with a designer and developer, we turned the wireframes into high-fidelity designs that were immediately implemented on the updated site.

And then, it was the moment of truth: would the new designs convert better than the original? Or would they flop?..

My worst nightmare was confirmed.

Sales and orders started to drop and continued to drop.

But, in assessing the result, I noticed something super important: the desktop conversion rate had improved! But mobile – which accounted for the majority of the site’s traffic – was lagging far behind.

Segmenting traffic by desktop and mobile, I dug through analytics and heatmapping data – and saw it. Many Call To Action (CTA) buttons appeared below-the-fold, meaning users had to scroll to see and click the buttons. And the scrollmaps told me they weren’t scrolling.

I immediately suggested that we needed to modify the CTA button sizing and placement so that all key CTAs appeared above-the-fold.

Within days after the changes had been implemented, the drop started to reverse and sales started to soar! Today, the site is performing better than ever and the client is thrilled.

From this experience, I learned two important things:

- You can’t just do a site redesign and sit back.

Rather, you must immediately start monitoring results and be open to failing and tweaking the design again, and then again. Do that until you see the bottom line moving up. - Small changes can make a big difference

Data doesn’t lie: this simple, small change – moving CTAs above the fold – turned the project from a failure to a big success.

Jon Kincade – Lead CRO Consultant

One Test to Topple the Whole Business Model

Early in my career – you know, when search engine result pages (SERPs) looked a lot different than they do now – I was working as a business unit manager for an eCommerce shop. This was before shopping carousels topped the page, and in hindsight, was my foreshadowing of the Alibaba’s/Temu’s of the world.

We managed to get good pricing and vendor relationships, optimized product discovery page (PDP) content, and had all the right technical pieces in place. The last thing we had to experiment with was what we needed to do to win that #1 and #2 ad placement on the SERP.

We tried so many different angles and executions, but what it came down to was really simple: by then, the product had become a commodity for consumers and if you didn’t have the price in the ad (matching up to the lander), you didn’t “win”. And the kicker: that price was right around the landed cost (sum of costs associated with shipping). No bueno.

When we zoomed out, we realized that the business’s long-standing, tried-and-true drop-ship model would not work for this product because of the way that the supply chain had changed. Short of us having boots on the ground in Shenzhen, there was simply no way to compete.

Had that business not had an experimental mindset and a humble approach to validating-before-scaling, it could have been a long and costly mistake. Instead, we were able to get invaluable learnings that not only avoided immediate losses but improved our ability to grow and scale other verticals profitably and more quickly in the future.

Now, many years later, I built a custom GPT I have running in the background of meetings that takes declarative statements and output the explicit as well as implicit assumptions that underwrite those statements. It’s not about challenging people’s ideas. It’s more about doing super early, high level stress/assumptions testing to make sure there’s a solid and shared understanding of what may be hidden below the surface before moving on to hypotheses and solutioning discussions.

Shiva Manjunath - Host of 'From A to B' podcast

Test Your “Best Practices”. You’d Be Surprised

I was working for a B2B company that – of course – had a lead generation form. At every step/field in the form, we saw marginal drop-offs.

You know the best practice – make your forms shorter! We wanted to validate this hypothesis, so we cut ALL fields/steps we possibly could (capturing only the essential information our sales team needed).

Lo and behold, we saw a 10% drop in conversion rate by DECREASING the amount of fields in our forms. Didn’t expect that!

What did we learn? Turns out, the additional steps helped with building trust for leads. Multiple steps was a conversion driver, not a conversion killer.

So, what did we do? We added another step to the form to try and build trust, as a way to improve conversion rates. I don’t remember the exact conversion rate lift, but it was above 20%. And the quality of leads increased too.

Lesson learned: don’t think you can blindly follow these arbitrary “best practices” which lack context. Test them. You’d be surprised.

From Spaghetti Testing to Strategic Testing

Early in my CRO career, I was spaghetti testing: trying to test whatever I could, seeing what happened, and moving on.

It wasn’t a bad thing – it sparked my curiosity for experimentation, and I wouldn’t change that experience. But I hit a lot of losers and just a few winners – and even those few were only by pure luck.

The biggest revelation for me was not test-related, but process-related. Seeing heatmaps and session recordings for the first time was like a brain explosion moment for me.

Analytics data is great – fantastic, even. But being able to link heatmap data with this quantitative data was the key in taking me from “good” hypotheses to “fantastic” hypotheses.

Then, somehow, I stumbled upon chat logs and helpdesk tickets while browsing through our toolset, and that was another revelation. It made me aggressively prioritize data capture and analysis from as many sources of data as possible. My test win rate went up significantly after I bugged folks to get access to all the sources of data we had available.

And the great part? Most of that was free data – it just required time to analyze. Now, I don’t run any tests unless they’re backed by some form of data. But that was a huge mindset shift for me – from spaghetti testing to strategic testing by means of corroboration of data sources into strong hypotheses.

Listen to Shiva’s podcast for more CRO insights!

Brian Massey – Conversion Scientist™ at Conversion Sciences

CRO Stands for Continuous Redesign Of-the-website

We had been hired to work with a design team on a website redesign. Most website redesigns are what I call “all in” designs: the web development team spends months making thousands of decisions with the input of the executive team. No one knows how they’ve done until the new design launches. And there’s usually no going back if conversions drop.

We changed this process.

We redesigned the site one page at a time, testing our designs along the way. This meant that some pages had a new design while others had the old design. How many designers cringed at that point? I understand, but we were able to test away bad design decisions and keep good ones.

The redesign process had been expected to take more than six months. However, conversion rates had shot up starting in the second month and continued to climb each month as we worked our way through the site.

This is when I realized an important advantage of data-driven design: You get results much sooner, and the results are guaranteed. Today, we offer data-driven redesign services using experiments to back up our design decisions.

CRO is continuous website redesign.

Get a free quote for Conversion Optimization Services

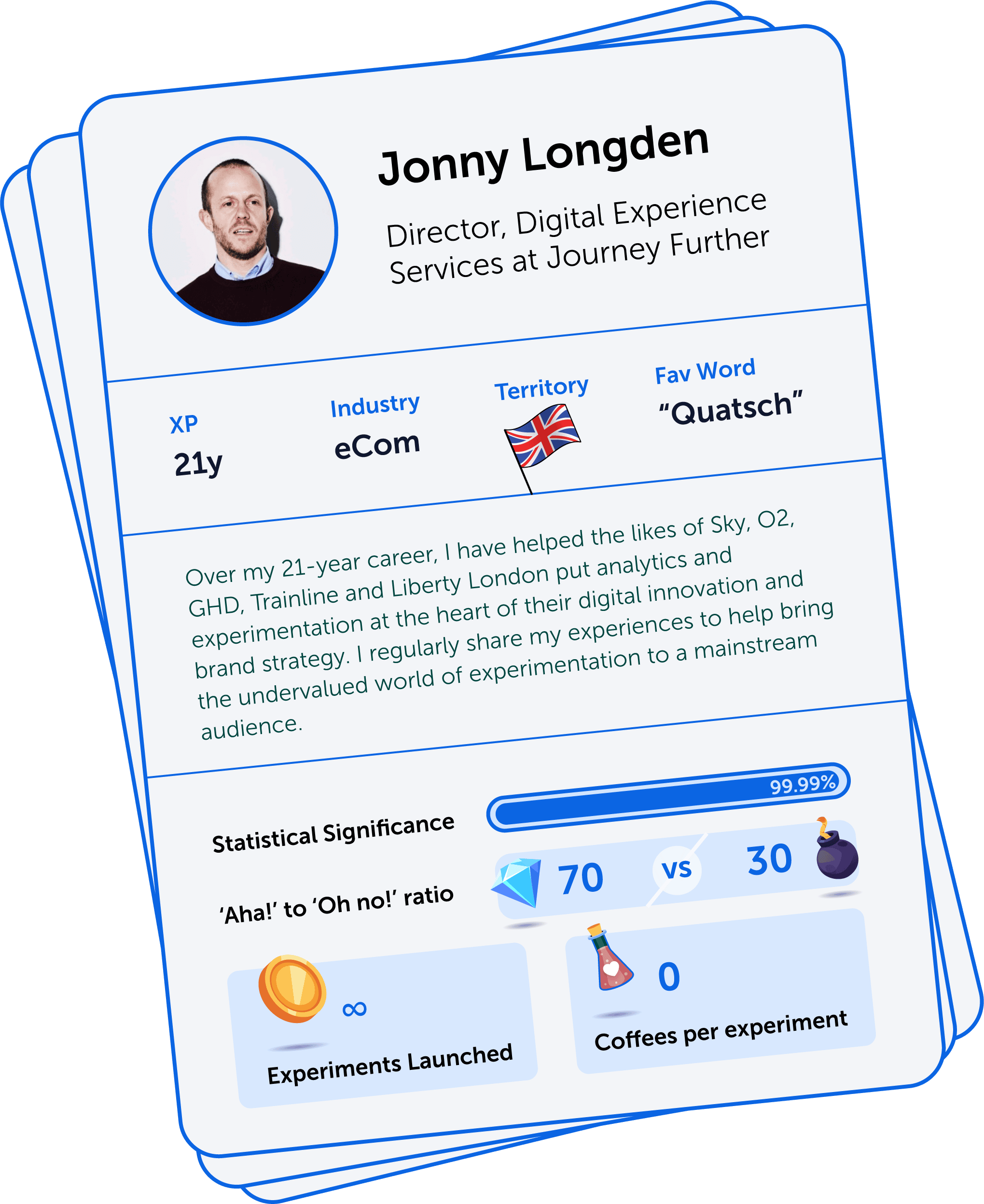

Jonny Longden - Director of Digital Experience at Journey Further

There is really no such thing as ‘failure’

There is really no such thing as ‘failure’ in experimentation because you have always learned something, and there is always something you can do with that information.

Recently, for a retail client, we tested the addition of urgency messaging on their product pages. This was the typical stuff you see on e-commerce sites, such as ‘hurry, only 2 left in stock’. This resulted in a massive 11% decrease in conversion rate for the product pages on which it was shown.

Researching the results of the test, we decided that the older demographic for this product would likely feel overly pressured by these tactics.

So, we looked for other signals around the site that could be construed as pushy and tested removing them. Two of these tests yielded very positive results.

One was taking down a countdown timer in the cart to show how much time the user had to purchase. This had been coded when the site was built, but the cart never actually timed out anyway.

The other was getting rid of further stock messaging ‘badges’ on the product listing pages.

That gave us ideas for some further experiments.

So, how was that initial test in any way a failure when we learned something incredibly valuable about the audience for this site?

Experimentation Before Google Optimize’s Release

The first experiment I ever ran defined the next 15 years of my career.

It would have been sometime around 2007, and I don’t even remember what it was or even which client it was for. However, it was nevertheless a huge pivotal moment for me.

Prior to that time, I had been a consultant in offline data analytics – segmentation, propensity modeling, geo-spatial analytics, etc. It was the kind of stuff that today gets called ‘data science,’ although nobody called it that back then.

As part of that, I had also been heavily involved in direct mail experimentation and campaign measurement. This is basically the same thing as A/B testing but performed on outbound direct mail campaigns. A test mailing would be sent vs. a control and measured statistically in much the same way.

For some time, I had been uneasy with the fact that I was not involved in the digital world, and so made the decision to work in a digital agency.

Digital analytics was fascinating, but I was initially frustrated by the lack of actual feedback from changes that were made. It seemed to me that analytics just meant informing an opinion with some pre-canned reports from GA.

Then, along came Google Website Optimizer (later renamed Google Optimize). To me, this was the perfect synthesis of my former career and my future direction in the digital world.

The rest, as they say, is history.

Nick Phipps – Head of CRO at idhl

Tried Adding Products, Scrapped the Whole Merchandising System as a Result

During some research for an eCommerce client, we identified a large drop-off rate between category pages and product detail pages.

I dug a little deeper using session recordings and heatmaps to discover that users were only scrolling through the top few product cards on a listing. I thought that poor card design and a single-column layout were to blame.

So, we ran a few tests to try and show more products to each user. But all we got were inconclusive results, even on secondary metrics like click-through rates on the products.

This led to deeper research that helped us discover that the merchandising system (including the filters) was not fit for purpose. Users were struggling to find the products they were looking for, wandering around the website without any support.

Eventually, the system was replaced. The thing that stuck with me was to always keep iterating and always dig deeper during analysis. Go beyond the numbers.

Ensure you connect your testing tools to GA4 and behavior analytics tools like Mouseflow to gain a deeper level of detail that the numbers can’t give you.

What Happens When a Young Digital Marketer Decides to Try A/B Testing

I won’t surprise you if I say running my first AB test changed me for life. I can’t remember what it was or what the result was, but the path that it sent me down changed my career.

I didn’t really know anything about CRO or experimentation at that time. I was working in digital marketing, trying to find ways to improve some campaign landing pages. At some point, I’d read about A/B testing as a method to measure the impact of changes on a website, so I thought I’d give it a go.

I designed my experiment and used the visual editor (a harrowing experience) to implement the changes. I set some goals and I launched the test. It didn’t work… After lots of googling and banging my head against a wall, I managed to get it working and I remember thinking that I’d basically just become a developer!

That experience led me down the path to where I am today. I cringe at the thought of those early moments playing around in Optimize with no real strategy or understanding of CRO as a methodology, but it was the first step I needed to encourage me to learn all I could. It propelled my career into the most rewarding disciplines within digital (even though there may be a hint of biased opinion here).

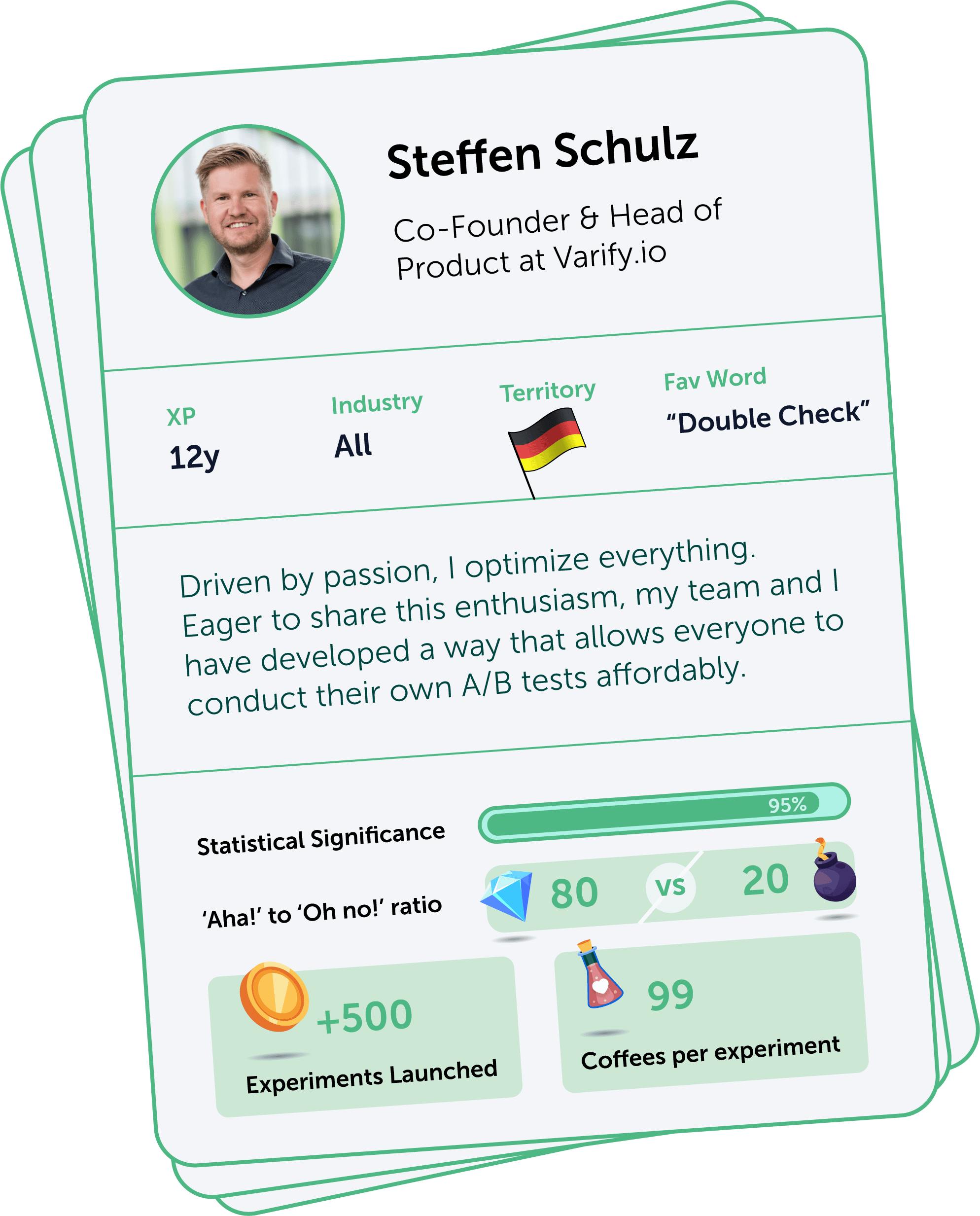

Steffen Schulz - Head of Product at Varify.io

The Power of Perseverance in CRO

At some point, we were running an experiment to improve the checkout process using a progress bar. It was supposed to motivate customers through a psychological ‘cheering’ effect – like “hey, you’ve almost completed the checkout”. We hoped that it would boost conversion rates.

Early results showed a decrease in the overall performance, and the client considered halting the experiment. What does one do in that case? One dives into data.

Segmented data revealed an interesting pattern: the progress bar was effective on mobile devices but caused a significant drop in desktop conversions. We convinced the client to extend it for two more days, refining our approach, particularly for desktop users.

Well, mobile performance improved some more, and desktop results began to stabilize. By the end of the experiment, we not only overcame the initial downlift but also achieved a notable uplift in conversions with a 99.1% confidence level and a 4.3% increase.

This outcome demonstrates the importance of perseverance and data-driven decision-making in digital marketing experiments. Dive deep to understand user behavior differences across devices – and do not discontinue tests prematurely.

How Modifying the “About Us” Page Lifted Conversions by 23%.

Our task was to enhance a website for a company selling motor engine chips. We optimized product detail pages and the checkout process, but to very little success.

So, we decided to analyze the target group a little deeper. And we came up with a hypothesis that potential customers were hesitant to purchase because they were afraid of damaging the engine. And the company didn’t offer any warranty.

We discovered that the ‘About Us’ page received high traffic, and for us, that meant that users were looking to see if the company was trustworthy and credible.

So, we revamped the ‘About Us’ page so that it radiated trust. We highlighted the company’s extensive experience in the field and tried to address the reasons behind the absence of a warranty with transparency. This approach humanized the brand, showcased the knowledgeable people behind the products, and their commitment to quality.

We ourselves didn’t expect the results we saw – a conversion rate uplift of over 23% across the entire shop.

This experiment changed my approach to CRO. Previously, I focused on optimizing specific elements like product pages or checkout flows. But this experiment taught me that sometimes the key to conversion lies in understanding and alleviating customer fears, sometimes in areas of a website seemingly unrelated to conversions at all. I still stand by this approach.

Jesse Reynolds – CRO at ExtraHop

Competitors Don’t Always Know Best

For many SaaS companies, “free demo” is the main call-to-action and the accompanying form is the most important one. We identified that our form had a below average completion rate.

At the time, the form was a small overlay over our live demo experience. The form had five fields and presented only the bare essential info (form field titles, privacy links, etc.).

We opted to test a new page instead of the overlay and created a form that’s more in line with our competitors. We hypothesized this would meet user expectations and, by adding value props, it would ease their decision-making, increasing form completion rate.

The test utterly failed. We measured a 17% decrease in form completions and the number of qualified leads dropped 22%. Testing what our competitors were doing did not work for us.

Regrouping on the failed test, we examined what we knew about our users, specifically those interested in our demo. We realized we were approaching them the wrong way.

They weren’t the decision makers, they were those who would actually use the tool. And they were likely checking it out to report back to their leadership. We needed to create a positive experience for those users.

Setting up a new test, we focused on the benefits of the free demo in a “quid pro quo” approach using direct, “plain speak” (give us your email, and we give you access to the tool, plus 5 scenarios you can go through, etc.).

The test was a success, increasing form completion rate by 24% and qualified leads by 20%, even though it decreased total form submissions. We identified that we had failed to create clear journeys for our different users/personas and needed to add more clarity in our content about what the user could expect. The form now helps users understand what to expect and the results led to the decision to totally redevelop the website.

You Can Drop the “CR” in “CRO”

While I was working for an agency, one of our clients was a well known hotel brand. They had various tech limitations but were trying their best to incorporate A/B testing and optimization.

The team spent a good deal of time doing competitive research, looking deep into the data, thinking through design heuristics and best practices to come up with a super comprehensive deck of test ideas with thorough evidence and organized into one of the best presentations I have ever had the pleasure to work on.

And of course, the majority of the ideas were shot down, but one made it due to how simple it was. We suggested that, while users are searching for hotel rooms, the rooms should open in new tabs when clicked on.

Such a simple idea that was overlooked by the client in search of a “perfect” solution led to an increase of 12 million dollars in MRR.

It was a strong reminder that the user experience is the alpha and omega. Despite pressures to make the numbers go “up and to the right,” we have to focus on making the users’ experience better.

That’s why I like to avoid the term CRO and focus on just the “O”, Optimization. Maybe instead of CRO, we can coin Customer Experience Optimization to muddle things even more 😉

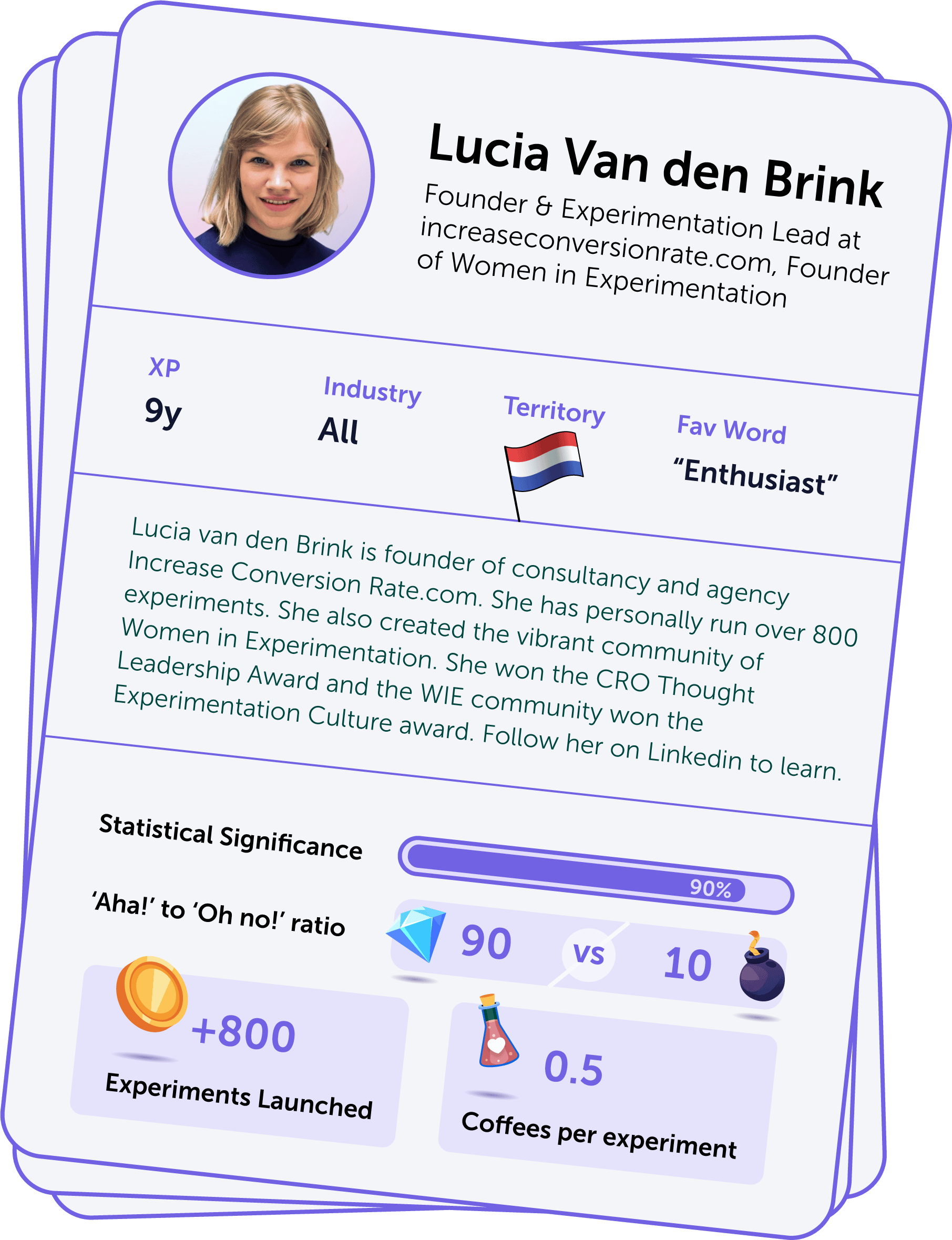

Lucia van den Brink - Founder at Increase-conversion-rate.com

CTR Goes Up, Conversions Go ‘Ouch’

Imagine a product line-up of three products. They can be services, phone subscriptions, insurances, but whatever they are: three next to each other.

We thought: if we remove a lot of information from the page, especially about the price, then the user will have less cognitive load and fear and we’ll measure this in click through rate.

It wasn’t based on our imagination – we had some research on the fact that people found the product line-up overwhelming.

We launched the experiment – and it turns out, our A/B test increased CTR significantly.

YAY!

BUT…

People were just clicking through because they were missing info. And they all dropped in the next step.

Conversion went significantly down, because the information about the price point was missing.

Lesson learned: leaving out information can be hurtful. Information should be there for the users at the right time and place.

I Ran 800+ Tests. Here’s What I’ve Learned

You can learn a lot from one experiment. I’ve run over 800+ experiments. And what I’ve learned is that after a while it’s not about wins and losses anymore.

I’ve seen experimentation changing organizational cultures. Going from managers saying: “I know what works and this is how we’re going to do it” to being data-driven and looking for the best solutions together with a team.

That does something to hierarchy in organizations. It gives a call center guy or an intern space to voice an idea that can be researched, tested, and might just win. It also leaves space for teams and individuals to solve their own problems and come up with potential solutions.

Having your work life and to-do list defined for you by somebody else can be a huge strain on your capabilities. I believe that abandoning that and embracing the experimentation culture changes organizations and the lives of the people in those organizations for the better.

No more: “This is how we do it because I believe it should be like that.”

But more of: “Let’s collect information, try this approach, and see if it works.“

Ubaldo Hervás – Head of CRO at LIN3S

The Novelty Effect

I worked as a data analytics & CRO consultant in an American-based SaaS startup. My job here was to identify opportunities in the retention phase and to apply online controlled experiments to improve the core metrics.

Here’s one of those experiments. We found a negative correlation between the average time on specific features and the Churn Rate: The more time the users spent on particular product features, the fewer users uninstalled our application.

During the discovery phase, those features were probably the most important of our software-as-a-service in churn rate management terms. The hypothesis was that the churn rate would decrease if the users used this feature more.

We ran an A/B test, but the control and variation performed similarly during the first fourteen days. We thought it was a failure or an inconclusive experiment. However, during the third week, things changed.

We didn’t use churn rate as a primary metric, but focused on some engagement-related metrics. The variant started to perform much better, and the reason behind this was an effect called the “novelty effect.”

The “novelty effect” refers to the phenomenon where a change or something new on a website (such as a new design, feature, or product) initially attracts more (or less) attention and engagement from users, leading to a temporary increase/decrease in metrics.

The key learning here is that no Conversion Rate Optimization or Online Controlled Experiments standards exist. Most of the time, you will pause that campaign, and you shouldn’t (in that specific case). You will try your best to improve core metrics, but nobody can know what a variant’s behavior will be over a period.

When you realize the natural effect of the “novelty effect,” you become more humbled.

Research Cannot Be Optional

The success of a CRO initiative is 90% dependent on the quality of research. I learned that lesson when I started in this wild world of online experimentation and tried to base my experiments on best practices. After all, that’s what most people related to this area posted at that time: good practices, checklists, etc.

The results were equal to zero.

Then, I realized that you need to find meaningful pain points – and opportunities to offer meaningful solutions. Sometimes, finding good problems is more important than finding good solutions. From a CRO perspective, you need to research and prioritize those problems or opportunities and start working on them through experimentation (or not – experimentation is a means to an end).

There was no single experiment that told me that lesson. Several experiments with no business context, no key metrics detected, no stakeholders alignment, and no pain points detected were the best way to learn that throwing spaghetti at the wall to see what sticks is the best way to spend money, energy, and technical resources with no return.

Since then, research has not been optional in my CRO projects.

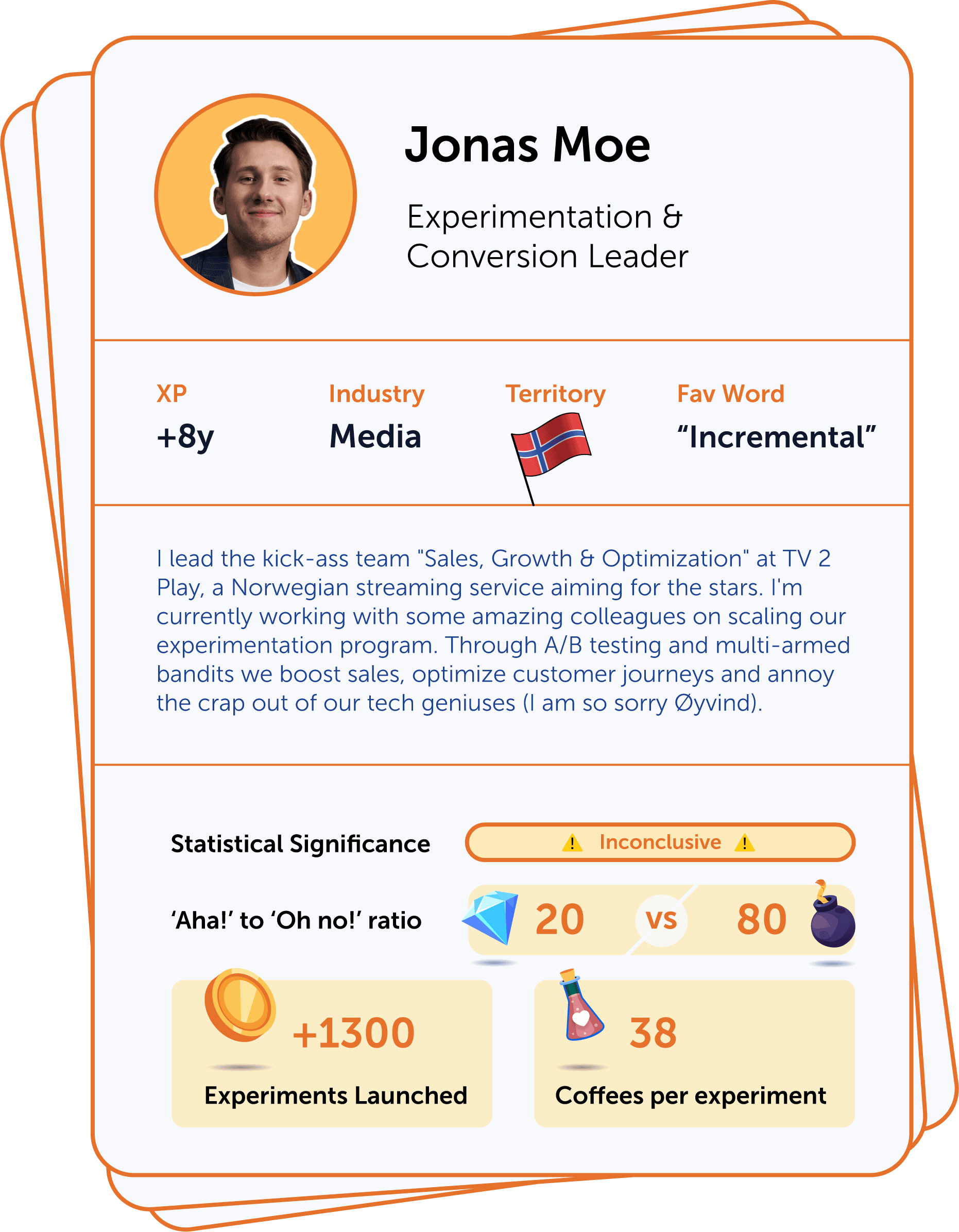

Jonas Moe - Experimentation & Conversion Leader at TV2

When You Have To Revert Changes, But It’s a Win Nonetheless

TV 2 Play is a Norwegian streaming service competing with Netflix and HBO. At one point, we had 6-7 different subscription plans. We wanted to explore how a complete overhaul of our purchase flow would impact the distribution of conversions across the different subscription plans.

We went from a horizontal overview where you could easily scroll past different plans to compare, to a version where you had to click each plan to read more about it before making a choice. A potential conversion killer, but we wanted to explore how it impacted our metrics.

For this new version, we had to choose a default subscription plan for users landing on the page. Should we set the cheapest or the most premium plan as default? We ended up compromising and choosing a mid-tier plan, letting the users move up or down from there based on their needs.

The experiment had significantly lower conversion rates. If we were to implement the changes without testing, we would have lost a ton of subscribers. But when we looked at the distribution of conversions, we realized we were selling a lot more of the mid-tier plan we set as default. It was the only one that saw an uplift.

Now, of course, anchoring is an effect that we’re familiar with, but we used these learnings when we reverted back to our original purchase flow. We flipped the order of the subscription plans so that it was sorted from the most expensive to the cheapest one. We maintained our conversion rate, but drastically shifted the distribution of conversions.

We saw a 23% uplift in the premium plans and a 23% drop in the mid-tier plans just by flipping the order. We run our tests with hundreds of thousands of users, so to see such a huge effect was unexpected.

In qualitative research conducted afterwards, we learned that when users are presented with the most premium subscription plan first, they feel like they lose access to something the further they scroll towards the cheaper options. Whereas in a reversed order (from cheapest to premium), users felt everything was just becoming more and more expensive.

The psychology of “losing something” vs “paying more for something” is a powerful tool in experimentation design, and even though the original experiment initially looked like a complete failure, we’ve experienced massive uplifts based on the learnings from it.

In our experimentation program, we rarely refer to experiments as “Losers”, as we usually learn something useful every time we run an experiment that underperforms.

How One Word Doubled the CTR

We ran an experiment where we offered a price discount to existing customers on their subscription plan if they committed to 6 months. The popup was really simple but this experiment truly showed the importance of copy and psychology. Here’s what we tested:

- Version A: “Get a better price on your current subscription – click here”

- Version B: “Get a lower price on your current subscription – click here”

We did not have high hopes for this experiment as we simply changed one word. But the B version actually had a 97% uplift in CTR to the campaign offer. We went from a click rate of 19.8% to 39.1% by changing one word. The experiment was shown to 267k users, so to see such a drastic effect from one small word was quite interesting.

In post-test research, we discovered that “lower price” is perceived as more absolute, and what is considered a “better price” may be more subjective. This could be a part of the explanation of why version B had such a drastic uplift.

I love this experiment because to this date I’ve yet to see a better example of how powerful words can be in terms of decision making psychology. I think the importance of copy is often overlooked in experimentation design. A lot of CROs, UXers, and marketers tend to gravitate more towards flashy visual changes and design elements rather than good copy.

The psychology of words is not to be underestimated. This is why we frequently run messaging & copy tests to dig deeper into the users’ perception and associations that different words may carry. We can use the learnings to our advantage in experiments, ads, and landing pages.

Juanma Varo – Head of Growth at Product Hackers

Experimenting on LinkedIn

In my opinion, a true CRO expert is one who deeply values and fully embraces the process, viewing it as their most potent asset. But one of the most transformative experiments I conducted was driven by sheer necessity, and there was no A/B testing involved.

My objective was to leverage LinkedIn as an efficient acquisition channel, aiming for low-cost conversions and sufficient volume for scalability. Despite experimenting with various ad copies, targeting different roles, companies, and industries, success was elusive. I was two months behind on my goals.

Then, I had a breakthrough. I set up a page that featured awards for various teams within an organization, including product managers, growth managers, designers, engineers, etc. I invited LinkedIn users to vote for their teams.

That led to an extraordinary engagement rate – more than tenfold compared to previous efforts. This initiative not only boosted engagement with our content but also significantly increased submissions to our programs.

Within weeks, I achieved the year’s submission goals, freeing me to explore new, innovative strategies to further enhance our results, all the while alleviating the stress and pressure I had been under.

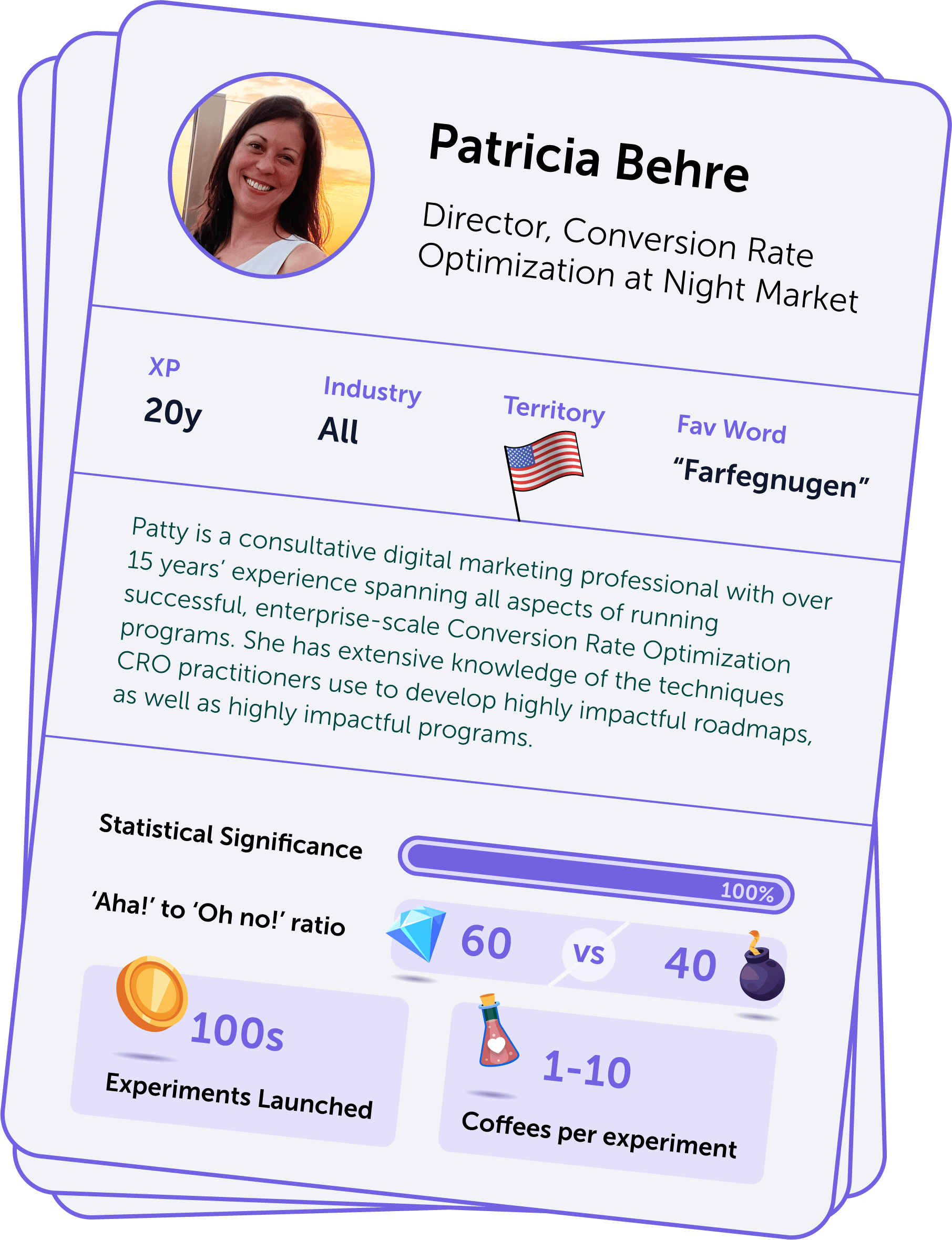

Patricia Behre - Director of CRO at NightMarket

Formally a Loss, Culturally a Huge Win

Once upon a time, our UX team had developed a brand new product page template. Previously, they never invited testing into the pre-release process. This time, they did.

And the proposed new design didn’t fare well in a head-to-head comparison. It was a hard pill to swallow for all the many teams that had invested numerous planning and design cycles into developing the new template.

But at the end of the day, these teams were able to come together under the banner of having saved their site from a fairly serious performance hit, had they pushed a new design to market without testing. Since then, testing became a mandatory part of the process for validating new layout & design bets.

And the thirst to understand the ‘why’ underpinning this outcome drove new behaviors from within the design branch. Not only was there a request for a web analytics deep dive to understand why the proposed design didn’t outperform its predecessor (and ergo what they needed to fix in next design iterations), the requests kept coming even after that. Designers wanted to see web analytics data before designing new navigation paths, on-page engagement data before revamping a page, and so on.

So, formally, this particular test wasn’t a winner. But it sparked a pivot to more data-driven design practices which ultimately led to a great many successes downstream.

What it Takes to Succeed as a CRO Executive

There’s a direct correlation between the interest in your projects and the array of potentially impacted stakeholders. At some point, it changes your job completely.

I recall a particular project that would have significant sales and sales operations implications – the test outcome would impact lead routing flows. By then, I had already understood experimentation to be a uniquely cross-functional exercise. It requires a fairly broad competency framework – some understanding of UX design principles, some technical competency, and proficiency in analysis (and a specific understanding of some statistical analysis).

But going through this project with that many stakeholders who were really interested in the outcome, I realized I needed so much more than just that. The amount of work related to stakeholder management, project management, and change management was enormous.

Turns out, as your work matures and becomes increasingly strategic, the skills required to realize successful projects expand. They tilt somewhat to managing the information flow and decision making surrounding the project to ensure consensus and smooth forward progression.

It takes a lot to succeed in this space – which is what makes the job so challenging and so rewarding.

Martin Greif – President at SiteTuners

The Ugly Face of Trust

I ran an A/B test for a VPN provider years ago that helped me understand the delicate balance between design aesthetics and the perception of trust and security.

We were testing a payment form. The control version had an outdated and overloaded interface provided by a payment processor. The variation was a custom-designed, visually appealing payment page that matched the website’s overall, much more modern design.

Contrary to expectations, the control version outperformed the beautiful custom design by a staggering three-to-one margin. This outcome was measured across key metrics: conversion rate, sign-ups, and revenue. Apparently, when it comes to payment interfaces, the design appeal mattered much less than trustworthiness – in this case, the fact that the interface was unmistakably linked to a recognized payment processor.

Customers were reassured by the familiar, albeit dated, appearance of the control version, interpreting it as a sign of legitimacy and protection for their credit card information.

Armed with these findings, we then ran experiments across the website. We placed trust elements such as privacy seals, reviews, and ratings near every call to action, bolstering the site’s credibility.

Of course, we kept the original payment page, but we also made enhancements to all pages leading to the payment processor, ensuring they communicated trust and security more effectively. And we made sure to include user reviews wherever possible to build trust even further.

This test told me once and for all that every design should accommodate the users’ psychological need for security. I returned to it many times when working on subsequent projects, remembering the importance of trust signals.

There’s an ongoing and evolving challenge in digital marketing and design: balancing the aesthetic appeal with the need for trust and security. And in some instances, the ugly is the winner.

Philipp Becker - Senior CRO Marketing Manager DACH at HubSpot

We Have Problems – Let’s Turn Them Into an ACV Uplift

That happened when I was still working in the B2C sector, for an online store in the Home & Living division. During the Covid pandemic, there were frequent delivery problems.

That’s why we added the following message to the shopping cart to communicate this transparently to customers.

“DELIVERY INFORMATION: Due to the high capacity utilization of warehouses and forwarding agents, there are delays in delivery. Thank you for your understanding!”

Everyone on our eCommerce team agreed that this message would clearly have a negative impact on our KPIs such as transactions and the average cart value.

After the first few days, it looked as if our hypothesis would be confirmed, but then the tide turned. When the sample size and the minimum detectable effect (MDE) were reached, we had a statistically valid result that the message had a positive impact.

In retrospect, we were very happy that we did not simply remove the message, but decided to AB test it (the message is still there, by the way – I was pleased when I looked it up 😀).

You Don’t Always Need Development for a Good Test.

Your questions made me stop and reflect on the experiments I’ve done in my career. And I realized that many of them support one and the same idea: big changes are not always necessary to have a big impact. Tiny changes can bring remarkable results. 😀

It’s often enough to change small things (that do not necessarily require development resources, which is a problem in many companies). A good example is restyling a simple text CTA on our HubSpot blog.

We made the following changes to the CTA:

- Added an arrow as a visual cue in front of the CTA;

- Increased font size;

- Added “[Download for free]”.

Minimal effort, you’d say, but the test massively increased the click-through rate from our blog posts to our offer pages.

To put it briefly: You don’t always have to reinvent the wheel – keep the experiments short and simple!

Alexis Trammell – Chief Growth Officer at Stratabeat

Not All Behavior Data Is Equally Helpful

I can’t remember the first time this happened, but linking behavior analytics and IP detection software together was an absolute game-changer.

When we report on website traffic, it’s not enough to just look at how many visitors landed on a page. We need to know who those visitors are. Using IP detection, we could at least see if they were from the right companies.

We also need to know what those visitors are doing on the page – that’s where tools like Mouseflow come in handy.

Let’s say that IP detection shows me that a certain group of visitors comes from a university – and that means they don’t match my ideal customer profile (ICP). In this case, I can ignore their behavior data so that it doesn’t skew my findings.

This approach has positively affected our conversion rates, because we are not measuring a sample of the audience that does not matter to our campaign.

However, if the visitor does match my ICP, but they are not scrolling far enough to see my CTA button, then I can assume that there is something wrong with my page. I’ll then update the design, the buttons, or the copy on the page – and measure again.

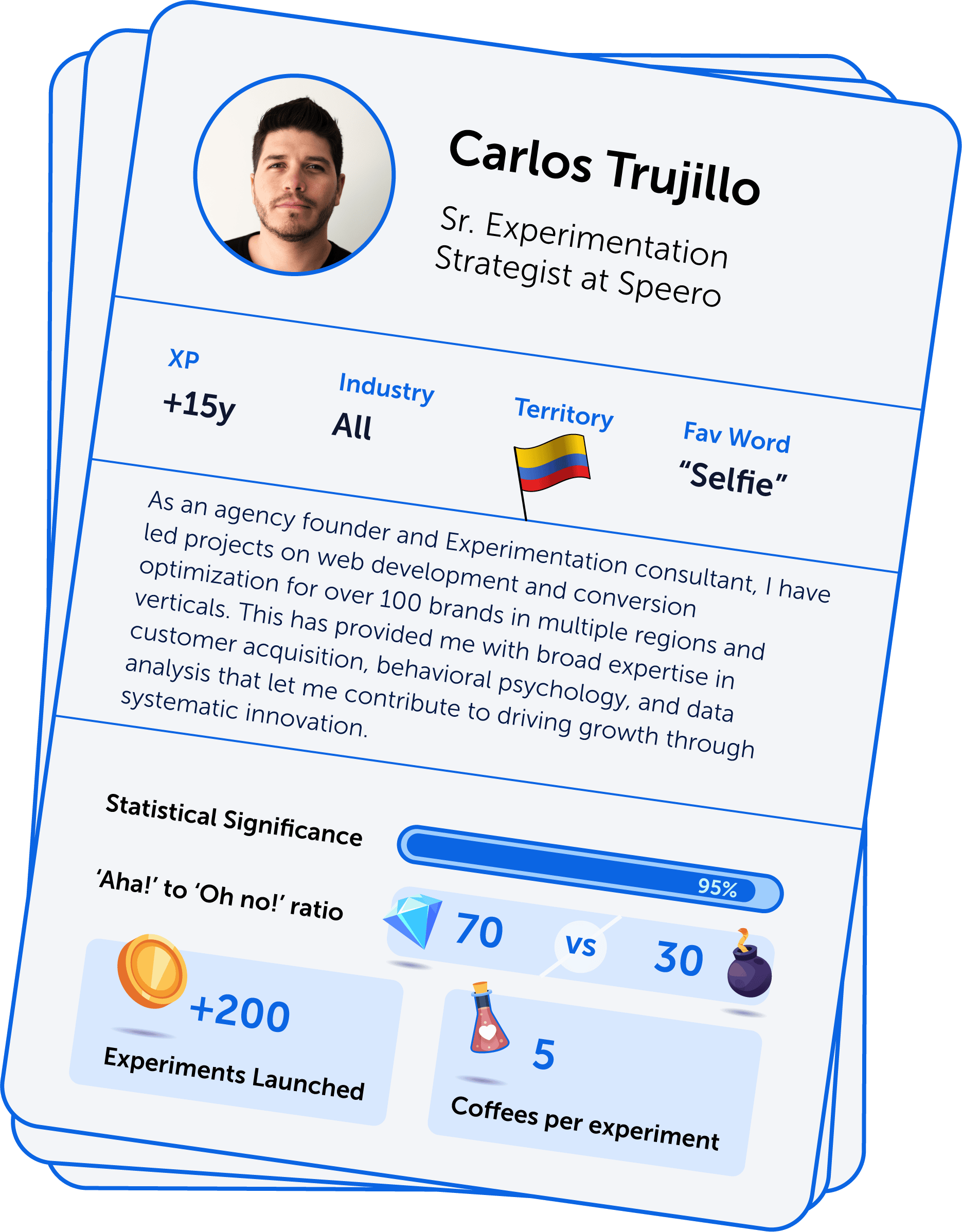

Carlos Trujillo - Sr. Experimentation Strategist at Speero

How I Learned The Importance of Strategic Testing in CRO

I remember an experiment where the client considered switching to a renowned, lower-fee payment processor for their e-commerce store.

We conducted a non-inferiority test to ensure this change wouldn’t negatively impact the business. To our surprise, the test revealed a significant transaction drop. Why? Because the new provider had a lower card acceptance rate in key markets.

This outcome wasn’t a conventional ‘win’ by any means. But it was of so much value! To me, it demonstrated that responsible experimentation, with a serious approach to statistics, always yields beneficial insights, regardless of whether the results are labeled as ‘winners’ or ‘losers.’

This experience was a turning point in my career as a CRO professional. It taught me the importance of strategic testing beyond just UI/UX elements to drive substantial business growth.

Maria Luiza de Lange – CRO Lead at Tele2

Small Changes for Great Results

There were a couple of small tests that were very successful and that changed my philosophy when it comes to experimentation.

One example was a test where we added a simple phrase “tap to zoom” under the images in the image gallery on the PDP for Electrolux.se.

We could see from the data that the more consumers interacted with images the more they converted. And that portion was much lower on mobile devices than on desktops. By increasing the interaction with images on mobile devices through adding just one simple phrase, we were able to increase conversion.

Another example is the one we did with the mobile navigation on tele2.se. Again, we just added words “menu” and “search” under the respective icons – and that significantly increased interaction with these elements.

Both of these prove that no matter how small the change, it can still have an impact. Kind of repeating Ron Kohavi’s mantra: “Test everything, even the bug fixes”.

I also noticed that tests like these tend to energize and fascinate the whole team. They are easy to understand and bring good results.

Gijs Wierda – CRO specialist

Do You Know Why Customers Want Your Product?

Early in my CRO career, I relied heavily on data insights and marketing tactics for experiments. The pivotal moment came when I shifted focus towards leveraging customer insights for experiment ideas. This approach significantly changed my professional journey and philosophy.

A defining example was with a client selling isolation material. They believed their customers were primarily motivated by savings on heating costs.

Contrary to this belief, customer surveys revealed that the real motivation behind buying isolation was to enhance home comfort, like achieving a warmer house and stable air quality.

By aligning our marketing message with these insights, we not only achieved fantastic results but also instilled a culture of valuing customer feedback within the client’s organization.

This experience cemented the importance of understanding customer motivations in my head. It became a cornerstone of my approach as a CRO professional.

Data shows the ‘where’ and ‘when.’ To uncover the ‘why,’ utilize user feedback, customer surveys, polls, ‘over-the-shoulder’ usability research, and focus groups. These methods are invaluable for gathering deep customer insights. They significantly enhance the quality of your experiments.

Simone Schiavo – Sr Customer Lifecycle Manager at Leadfeeder

Curiosity and The Small Things

If you ask Simone Schiavo what is at the core of what drives him, he’ll tell you it’s curiosity. In his nearly decade-long career, Simone has had his share of challenges and ‘Aha!’ moments. The one thing that never changed was his ability to apply his curiosity to solve problems and repeat successes.

In the early days of his career, Simone had to learn the hard way that not everything needs to be a random experiment. While working at an online newspaper company and running an A/B test on their pricing page, he noticed a fall in the checkout metric.

The issue? Everyone was running their own experiments in a silo. During his experiment, he forgot to exclude paid traffic. At the same time, the PPC team was running a different campaign that wasn’t bringing in a lot of qualified leads. Simone says he learned that we shouldn’t think of experimentation or conversion as a stand-alone function. Don’t create silos, but instead, think of experiments as a way to empower running processes.

He had his career-changing experiment more recently, while working in the eCommerce space. The company received a lot of traffic, so the team could test a lot and reach statistical significance quickly. What did they do?

Instead of doing one huge experiment, the team focused on executing many smaller changes like removing the home page slider and reducing core page load times. Quite a few of them worked! Simone became convinced that by rolling out smaller changes, you don’t have to focus on huge breakthrough wins to see big results. Instead, aim for smaller wins that compound into something bigger.

Yes, you need to understand the basics of data and code to be successful in this field, but staying curious and applying that curiosity to what your team is working on is essential.

John Ekman – Chief Conversionista

Don’t Brag, or at Least Don’t Brag Too Early

If CRO is about learning from your mistakes, I had plenty of ”learning opportunities.”

The very first A/B test I ran on my own blog almost immediately showed a winner!

I jumped on the occasion, made a screenshot, bragged about it at a conference, telling everybody what a conversion genius I was. Only to find out a few days later that the test was insignificant, once the statistical dust had settled.

I learned to keep my mouth shut until the test had run for a minimum time. This is by now pretty standard advice, but I had to learn it the hard, humbling way.

There was another memorable “learning opportunity.” The very first A/B test we ran for a client had an incredible uplift of 1200%. And yes, this one was actually significant, so it was a huge win.

Only – It was not.

The test ran on a paywall pop-up/modal on a tech news website. The test goal was the number of trial subscriptions. And the trial subscriptions started from this point, actually increased by 1200%.

But there was almost no traffic to this page to begin with. We increased the number of started subscriptions from something like 1 to something like 12. A huge “micro win,” but it had absolutely no impact whatsoever on the bottom line of the client.

From this experience, I learned that not only do you need to produce great-looking results, but you also need to be experimenting in very important places. So, before you start your testing, you need to figure out what those places are.

When I talked about this test years later with the client, she said – “I know that the test was a failure, but that didn’t really matter, because you opened our eyes to experimentation and changed the way that we work forever. That was the important outcome.”

So, wait for the test to run for its minimum time, test in important places, and work with people who are in it for the long run and accept short term failures as real learning opportunities.

Chris Marsh – CRO Specialist for eCommerce at Dash of CX

The Importance of Not Giving Up

I’ve failed in many ways over the years. The very first test I was tasked with launching needed to launch at a specific time, to coincide with a newsletter. Unfortunately, back in 2017, the tools were quite clunky, and I thought I’d launched it… but I hadn’t.

Thankfully, the client was understanding, and the agency trusted their gut to keep me. Since then, I’ve become very detail-focused, diligent, and have been called a “QA madman” on some occasions!

For one client, we learned how important prices and discounts were to their audience, so we tested various ways to showcase the discount on the product page. Multiple variations and iterations failed: showing a % discount, $ off, or both – all failed.

Then, we tried other solutions and a wildcard idea – displaying the message “Now on sale” instead of a discount figure. And that finally produced a winning solution.

This taught us the importance of iterating. And, when possible, it’s great to run an A/B/C/D test – you’ll try more ideas and interesting solutions and have more chances to win!

Laura Algo – Conversion Specialist at Dash of CX

Research Could Be Hard, But It’s The Right Way

Utilizing research for CRO can be really difficult. But it is the only right way. It could be overwhelming to get through the data. But as you go, many good test ideas start forming in your head. And these are real opportunities to improve the user experience.

It can also be hard to convince the clients to act based on research. It depends a lot on the company culture and stakeholders. Sometimes, clients need reminding and encouragement to take action on the research, rather than chase a shiny new idea. But user research-based tests have proven time and again to have a higher probability of winning. As CROs, we need to remind the clients about that and try to steer them towards running meaningful experiments.

Yes, sometimes, when I find these nuggets in the research data, I still feel that I can’t get the stakeholders on board. In this case, it feels like I’ve failed the users.

But most of the time, when the data is presented with quotes or recordings, with proof, then the stakeholders have no other choice than to listen!

I recently did a large research project for a client, and the insights fed directly into a landing page redesign. While the research was hard, it actually made the redesign easier! We had theories on what resonates with the website visitors and used their own phrases and language in the copy.

The test produced a great win for the client, and helped show the value of research-based CRO.

Johann Van Tonder – CEO at AWA Digital

Wow, That “Talking to Customers” Thing Actually Works!

“Fix them, sell them, or kill them.” – this was the brief from my boss at a global internet investment firm.

I’d been given a small portfolio of stagnant online businesses. It was the early days of eCommerce: we all copied each other, but nobody knew what they were doing.

I did what people in corporate normally do – throw money at agencies and consultants. Heaps of money. No results.

Almost out of desperation, we started talking to customers. We had no formal methodology, just got on the phone with them and found out about the problems they were facing that brought them to our products.

The insights were pretty unexpected, certainly not in line with ideas from our internal brainstorming sessions.

I remember the reluctance with which we started implementing some of the changes. I also remember the disbelief in the results – real money in the bank, not just conversion rates or some fuzzy metric.

A/B testing tools were a new invention back then. The main reason we started testing was to speed up the deployment cycle. In the process, I discovered that some of the “no brainers” didn’t actually make any difference.

It’s such a simple discovery, but it fundamentally changed the trajectory of my career and life. The power of understanding the world of your customer, coming up with theories about how to improve their experience, and validating that thinking – this approach became such a revelation! To this day, thousands of experiments later, I still have that feeling of magic: “Wow, this actually works!”

Michal Eisik – Founder & Copy Chief at MichalEisik.com

Be Ready to Be Criticized

CopyTribe — my copywriting certification program — came close to closing its doors before those doors even opened.

For the beta cohort 6 years ago, my co-founder and I rolled out a sales page with extremely vivid copy. The page painted a bleak picture of the financial reality of magazine & content mill writers who work for pennies (in contrast to copywriters, who typically earn more).

The pain points hit so hard that they offended people. Some readers felt we were twisting a knife into their reality. We got a number of angry emails.

It felt like a punch in the gut. Just when we’d found the courage to roll out something exciting and transformative, we were hit with scathing put-downs. We were demoralized and embarrassed. It seemed like a total failure.

But — and here’s the big but — we ultimately got 25 sign-ups for that first cohort. The program was a huge success, and CopyTribe has since trained and certified over 500 copywriters — many of whom are earning 400% (or even 1000%) more than they did at their previous jobs.

Today, my programs & products comprise 50% of my business. But on a deeper level, I learned a leadership truth: when you do anything impactful in this world, you’re going to be criticized.

Negative feedback is not an “if” — it’s a “when.” Embrace the criticism, pick out the pieces in your work that require change, and make those changes without letting them cripple you. Then, you have a chance of really changing something in the world.

Ruben de Boer – Owner at Conversion Ideas

Embracing the Scientific Approach to Everything

I ran a series of experiments for a furniture e-commerce shop in 2016 – and they changed my approach forever.

I had been in CRO for many years, but this was the first time I adopted a fully hypothesis-driven approach with many insights from scientific literature. I changed how I documented tests and insights to learn more about the user’s needs and motivations.

It led to a very successful CRO program, with many winners and key insights for the whole business. These insights were, for instance, also used in offline marketing.

Ever since I have firmly believed in a hypothesis-driven approach to CRO (instead of random testing based on so-called best practices) to truly learn from all experiments and to have excellent documentation.

Also, scientific literature became one of my most essential sources for optimization research. I even created a free online course, ‘CRO Process and Insights in Airtable,’ so others can benefit as well.

Fortunately, soon after, Online Dialogue approached me to become a consultant on their team. As they had been working this way at a world-class level for many years, it was a no-brainer for me to accept their offer.

Furthermore, in my personal optimization (like health, fitness, and sleep), I am now more focused on books and teachers who take science into account to get better results for myself.

Ben Labay – CEO at Speero

It’s The Simple Things

For almost 3 years, I ran experiments for Native Deodorant, now a Procter & Gamble brand. I worked alongside Moiz Ali in testing before and through the acquisition by P&G.

There were two impactful tests there that really stood out for me:

Test #1 was in the Product Discovery Page (PDP). It was the scent badges. They are still there today – check them out.

I was inspired by Bombas socks – they had color swatches on the page, and I thought: Why couldn’t we do this for scents?

So, we tested using the swatch approach for deodorant scents vs a simpler setup, where the names of the scents were all within a drop down.

The test itself wasn’t a big winner. But what it did was set the stage for tons of great tests afterwards. Highlighting, promotions, anchoring, subscription, and upsells were all tests that were derived from this scent-swatch-based layout.

Test #2 was even more career defining. We tried to build a user-behavior-triggered upsell and cross-sell mechanism (pop-up) after someone added an item to the cart on the PDP. This led to six-digit daily lifts in revenue – it was clear that the pop-up was making a difference.

This was such a pivotal test for me because it did two things:

- It taught me the power of motivation. Deodorant is a ‘convenience’ product, meaning people know the feeling of buying it, as they do it a lot. Therefore people don’t shop around, they just buy. Conversion rates were high, so focusing on AOV via those PDP to cart transitions was huge.

- It taught me about the intent + touchpoint logic – hitting the user with the right info at the right time. This simple test is what I refer back to and think about constantly even with the B2B personalization work that I do today. Visitors are always receptive if you speak to them in the right way at the right time. This experiment taught me to focus on simplification and clarification in all testing.

Anthony Mendes, Head of Strategy at Get Social Labs

A Win Is Building Paths Through Failed Experiments

Most failures with experiments turn into successes one way or another. We’re led to believe that successes often take shape as a growth hack or a home run experiment – but that’s not true.

CRO as a whole has taught me that to succeed is to build many small paths through failed experiments.

No one knows a thing – until it’s tested. And even those tests can vary dramatically depending on who runs them!

Example: there’s a recent experiment that I ran for a SaaS client.

We created a new version of a campaign where we changed the motivation for the user to take action.

At first, we thought it’d be a failure, as the number of daily leads we were getting was lower than in the control version.

But this month, we found out that the conversion rate of the variant’s leads into sales was six times higher. The reason behind this is that leads arrived at sales reps primed for a specific conversation to happen.

Andy Crestodina – Co-Founder and CMO at Orbit Media

Best practices are good hypotheses

This was a realization that changed my approach to digital. It came from a conversation I had with the one and only Brian Massey, and it helped put things in perspective.

The goal in digital isn’t to use best practices. Sometimes, they don’t work at all. Putting them in place is just the beginning. Checking to see the impact is the next step.

Too many marketers apply a best practice – and move on. The best marketers pull ideas from everywhere, including best practices and weird little schemes. Then, they prioritize based on likely impact and level of effort. Then, they act, measure, and iterate.

This realization has changed my career as a marketer and optimizer.

Best practices are not truths set in stone. They are good hypotheses to test.

Monique Poché – SaaS Conversion Copywriter

Change That Copy

I had a client reach out to me regarding a sales campaign that wasn’t working. It was a Facebook ad that took people to a landing page for a free quote on credit repair.

They had tried everything – from changing the Facebook audience to switching around the messaging. Nothing worked.

When they approached me, it didn’t seem like there was a whole lot more that I could do… until I looked at the copy. It was very impersonal and didn’t touch on their target audience’s pain points at all.

I reworked the messaging both on the ad and the landing page, even though my client didn’t think it would work given their recent failures.

By matching the messaging better between the ad and the landing page, and driving home the pain points without going overboard, my efforts were a success. The ad went from 30 impressions in two weeks to 131 in 6 hours. The landing page went from about a 5% conversion rate to 57.5%.

That’s how I learned that the copy and messaging can always be improved!

And it made me realize that it’s not a bad idea to suggest tweaking the copy regardless of where my clients are at in the testing/monitoring phase.

A fresh set of eyes can do wonders when it comes to optimizing conversions. Some people get so immersed in what they’ve always done, they might be missing a key element that makes all the difference.

This philosophy has definitely shaped my career as I help people optimize copy through audits and rewrites. It often enhances their results – and I’m always learning something new throughout the process.

Tyler Mabery – Director of CRO at LaserAway, Co-Founder at CampaignTrip

I come from a design background, and when I was first starting out in CRO, I was always extremely focused on design over everything else. This story is about how I unlearned to do that.

I had a client that provided a government type service where you could order your birth certificate online.

Their landing page was extremely dated from a design perspective. I’m talking about those blue underlined hyperlinks, Windows 98 feel, etc. And they really wanted a complete redesign to modernize the look and feel.

Being new to CRO and super excited to flex some design skills, I jumped in and created a whole new landing page. No iterative testing – I went full speed ahead with a complete overhaul. The page offered the same process, but a revamped modern look.

We were all excited, went through the revisions rounds, and launched the new design as an A/B test. You people who are into CRO already know what happened next.

All this effort – and we saw the results were tanking. This new page’s performance went down significantly.

This was heartbreaking. We spent so much time perfecting the page – all based on our gut feelings. All these “I think we should” and “I feel like”, you know.

It then clicked for me.

This was a government service, and government pages usually aren’t sexy. They are sterile and boring. I hypothesized that users were expecting things to look a certain way from the UI perspective when dealing with a government website.

We then made the page ugly and started iteratively testing things that mattered more to users when dealing with a service like this such as trust badges, guarantees, etc… Things that would make users feel safer ordering such an important piece of paper.

As you might imagine, we were able to score a ton of wins.

This really changed my CRO journey. I stopped being so overly focused on design. A lot of wins come from sticking to the basics and not trying to be novel. Back up your claims, keep things simple, and reduce friction.

While this was super heartbreaking at the time (I hate to see a good design lose), I’m thankful I got to learn this very early in my career. I still love design, but it’s a small piece of the pie… not the whole thing.