Surveying website visitors has quite some things in common with journalism. You see, there’s the best practice that every news article should answer five questions: who, what, where, when, and why. These are often referred to as the 5 Ws of Journalism.

When it comes to surveys and user feedback, the same Ws play a no less important role. For the data that you collect to be valuable and actionable, it matters

1) who you ask;

2) what you ask;

3) where and when you ask.

So, let’s dive into the strategies that help you narrow down the list of respondents for maximum impact, ask the right questions, and determine when and where are the right time and place for feedback or survey prompts. Basically, we’ve prepared a 101 guide for creating customer feedback surveys that work.

Who: How to Segment Users for Better Targeting

While some companies that offer feedback survey tools promote the idea of asking everybody to provide feedback, our position is that segmenting the users and only asking some of them for feedback can significantly improve the quality of the responses that you get.

How do you choose whom to ask?

1. Understanding Your Audience – It’s Not Only Demographics:

Go beyond traditional segmentation like age and location. Consider psychographics (interests, values, lifestyle) and behavioral data (website interaction, purchase history). For instance, you can segment only returning users who visited a particular page on your website.

2. Getting Behavior Insights From Analytics Tools:

Use tools like Mouseflow to track user behavior on your site. Segment users based on their interaction patterns, like those who hover their cursor for some time over a button, but don’t click. Their feedback can differ significantly from what others say.

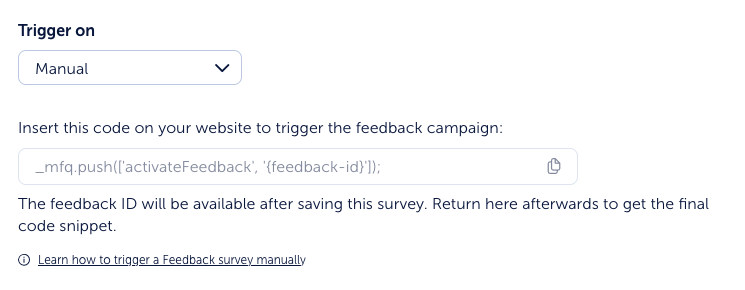

💡Pro Tip: with Mouseflow’s user feedback tool, you can manually trigger feedback surveys. Manually means that a survey is triggered once certain code is executed on a page. This gives you a lot of flexibility in triggering surveys based on user interactions with elements, be it sliders, carousels, forms, or something else – any custom event can trigger a survey.

Mouseflow settings for triggering surveys manually

3. Custom Segmentation for Specific Campaigns:

Another way to create segments is for specific marketing campaigns or product launches. For example, with Mouseflow you can segment out users based UTM parameters such as campaign, source, medium, etc.

This way you can, for example, target with feedback only those visitors that come from retargeting campaigns. Their feedback could be more valuable, than surveying all users.

4. Feedback Loop for Continuous Improvement:

You can use survey responses to refine your segmentation. For example, if feedback from a particular segment indicates a common issue, refine that segment to target them with more specific surveys or solutions.

Using these segmentation strategies, you can target your surveys more precisely, leading to higher quality feedback and more actionable insights.

When and Where: Choosing the Right Time and Place for Feedback Surveys

If a survey pop-up appears at the wrong time, the users would just close it. So, you need to find the sweet spot for timing your surveys to improve response rates and relevance.

1. Identify Key Interaction Points:

Start by mapping out your customer’s journey on your website. Identify critical interaction points – such as after a purchase, a service interaction, or a significant browsing session. That’s how you get the list of points in the user journey where you can potentially survey the users.

There are some interaction points in the user journey where you can survey the users, because you don’t control the platform. When they see your ad on Google, you can’t survey them. When they check reviews on G2, you can’t survey them either.

But sometimes they leave other types of feedback like reviews on the very same G2 or posts on social media that you can also use to get valuable insights. Make sure to collect and process those as well.

2. Post-Interaction Timing:

The best time to deploy surveys is shortly after key interactions. For instance, post-purchase surveys should ideally pop up immediately after the transaction is complete. For general browsing feedback, consider triggering a survey after a user has spent a significant amount of time on your site.

We show our own CSAT Survey pop-up only when users scroll the page almost to the very end

3. Avoid Disruption:

Ensure that your survey doesn’t interrupt critical tasks. For example, avoid presenting a survey in the middle of the checkout process or when a user is deeply engaged with content.

4. Continuous Monitoring and Adjustment:

Regularly review the performance of your surveys. Be prepared to adjust your timing based on changes in user behavior, website updates, or new offerings.

Here are some of the metrics you should be looking at:

- Impressions. That’s the overall number of times your website visitors saw your feedback prompt. If it changes drastically, something is likely going wrong either with the website, or with the feedback tool you’re using.

- Response rate. It’s the amount of responses divided by the number of impressions. According to Vivien Le Masson, Senior Director at Kantar marketing and analytics agency, a good response rate is considered to be between 5% and 30%. But it depends heavily on the type of industry you’re in and the type of surveys you’re using. If the response rate drops, it might mean that your survey started appearing at the wrong moment, or is targeted at the wrong audience.

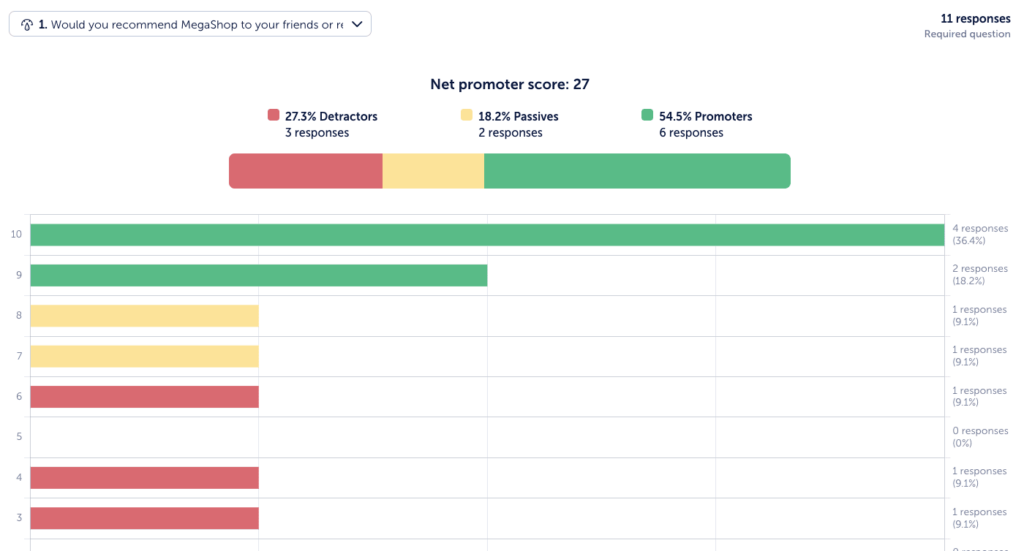

- NPS, aka Net Promoter Score. That’s a metric based on customer responses to the question, whether they would recommend a company, product, or service to somebody. If NPS is a part of your surveys, watch out for its fluctuations. NPS is closely connected with customer satisfaction and has a direct impact on revenue.

How NPS Survey results look in Mouseflow.

What: Crafting Questions to Get Actionable Insights

Now that we have the when, where, and who figured out, there’s one more aspect remaining that may actually be the most important one: What exactly should you ask to get quality feedback? If you use leading questions or if they are just poorly formulated, the whole feedback collection affair could become pointless. How do you make sure that it doesn’t?

1. Define the Objective:

Clearly identify what you want to learn from each question. This could be about user preferences, opinions, experiences, or behaviors – whatever it is, there should be a clear objective, and just one per question.

2. Don’t Overuse Open-Ended Questions:

Open-ended questions can provide deep insights and be a real treasure trove of information. But they are also taxing for respondents – with too many of them, the response rate of your survey could become very low. So, balance open-ended questions with closed-ended ones to make it easier for the users to respond.

3. Avoid Leading Questions:

Craft your questions to be neutral to avoid influencing responses. Find and eradicate suggestive language in your questions that might lead respondents to a particular answer. Otherwise you’ll be getting what you wanted to hear, but this data would be useless.

4. Be Specific and Clear:

Ensure each question is straightforward and unambiguous. Avoid using jargon or complex terminology that might confuse respondents.

💡Get inspiration from our bank of 30 best feedback questions.

5. Consider the Order of Questions:

The sequence in which you ask the questions are can influence responses. Start with broader questions and gradually move to more specific ones.

6. Pilot Test Your Survey:

Before full deployment, you may consider testing your survey on a small, representative sample of your audience to ensure that they understand the questions as intended and give you the type of insights you seek.

7. Analyze for Cognitive Patterns:

When reviewing responses, look for patterns in how respondents interpret and answer questions. This can provide additional insights into cognitive processes and their perspectives.

Knowing how to design questions the right way can help come up with ones that not only fetch responses, but also provide you with deeper cognitive insights of your customers, enhancing the quality of the feedback that you receive.

However, there’s one more thing…

Bonus Track – How to Improve Surveys with Iteration

Just like with pretty much everything else, when designing surveys, you get the best results when you apply the iterative approach. You can test different versions of questions, layouts, and timings to see which yields the most honest and helpful feedback.

Here’s the framework that Jacinda Mariah Camboia, customer success manager at Mouseflow, recommends to customers.

How to Do Iterative Survey Design:

- Start with designing a survey however you want and running it for a certain period of time. What you need at this stage is collect some responses, and track the key metrics for the survey. Set a realistic goal for the number of responses – just 5 could already give you something to work with.

- Analyze the metrics and the replies. Understand if your survey got enough impressions, if the response rate was OK, and if the replies give you the information that you wanted to get. Also, consider the sentiment of the responses. That should give you an idea about what you want to tweak in the survey to improve it.

- Choose one aspect that you’d like to test, and create a variation of the survey that is different from the original in just this aspect. It could be a question wording, layout, timing – whatever. But never test two things at the same time, otherwise you won’t get a clear picture of what change influenced the results.

- Run the variation of the survey that you’ve created for the same period of time.

- Analyze the results: Compare response rates and quality of feedback between the two versions.

- At that point, you can draw conclusions: Determine which version performed better based on your specific goals (e.g., higher completion rate, clearer feedback). Be mindful of timing though: if one of the variations ran during the holiday period, the metrics could be different just because of how people’s priorities change at this time. If you think that timing might have influenced the results, it makes sense to run the variation of the survey again.

- Pick the version that performed better – and repeat the process, choosing a different tweak to test.

- Don’t forget to document and share your findings. Keep a record of your tests and outcomes to inform future survey designs and share insights with your team.

This iterative process allows for continuous improvement in survey design, ensuring you collect the most accurate and valuable feedback from your audience.

Conclusion

Having a clear understanding of who, what, and when to ask allows you to craft better surveys that can help you delve deeper into customers’ mindsets.

Learning the importance of user feedback is the first step to catering better to your customers. Understanding the importance of asking the right questions, at the right time, and to the right people brings the insights that you get to a whole other level.

Finally, iterating can help you polish your surveys, achieving better response rates and more relevant feedback. That’ll give you a strong foundation to improve customer relationships and offer them what they really want.