“Data-driven” was the main business buzzword not so long ago, but it’s being gradually replaced by a new one – “customer-centric .” Buzzwords don’t appear out of nowhere – for organizations, being data-driven and customer-centric is a huge competitive advantage, if not a recipe for success.

For instance, according to Gartner , 80% of organizations expect to compete mainly based on customer experience (CX). In turn, as per PwC , 73% of customers list CX as an important factor when buying from a company.

An organization becomes customer-centric by collecting customer feedback and making sure to act on it. Feedback is what drives meaningful changes in product, marketing, and pretty much every other department.

In this guide, we discuss everything related to customer feedback:

- What it is

- Why it is important

- The different types of user feedback

- User feedback metrics

- How to collect user feedback

- How to analyze user feedback

- What questions to ask

- And more.

What is User Feedback

User feedback is qualitative and quantitative data from customers regarding what they like or dislike, think, feel, or want to change in a product or service.

Basically, it’s a collection of opinions from customers or prospective visitors. They can give these opinions if explicitly asked for them (direct feedback) or if they desire to express them without any requests (indirect feedback).

There’s also inferred feedback which doesn’t include explicitly explaining anything. Instead, data is derived from user behavior patterns.

Why Feedback is Important

Likely, the “but why should I care” question doesn’t apply to user feedback. Almost everybody understands that it’s important for any organization that wants to thrive.

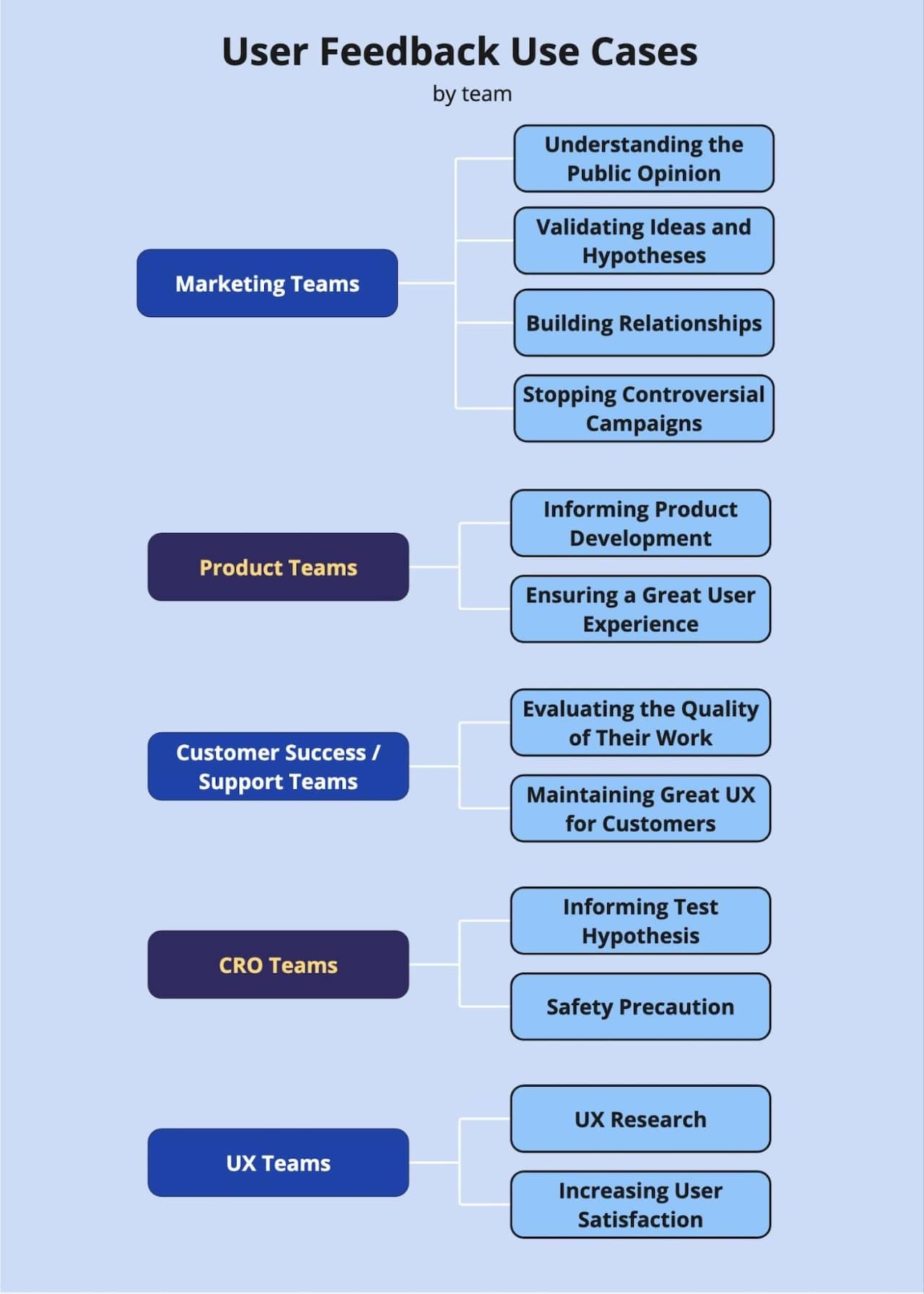

There are, however, plenty of different applications for user feedback for different teams in the organization.

For Marketing Teams

Understanding the Public Opinion

Among all of the organization’s departments, marketing is likely to care the most about its image and how its target audience perceives its products. Collecting feedback – in its many forms, from customer reviews to support tickets – is the only way to get answers.

Validating Ideas and Hypotheses

Marketing is always experimenting with new campaign ideas and channels. Collecting feedback is a way to validate if an idea is going to work well for the target audience.

Building Relationships

B2B marketing is becoming more and more about building personal relationships with clients. For a relationship not to be one-sided, marketing can collect feedback from the audience they are engaging with.

Stopping Controversial Campaigns

Sometimes a campaign goes wrong, accidentally conveying the wrong message and sparking unwanted debate. Monitoring the social media for people’s opinions on the campaign helps marketers discover such situations at early stages and stop the campaign before it does more damage.

For Product Teams

Informing Product Development

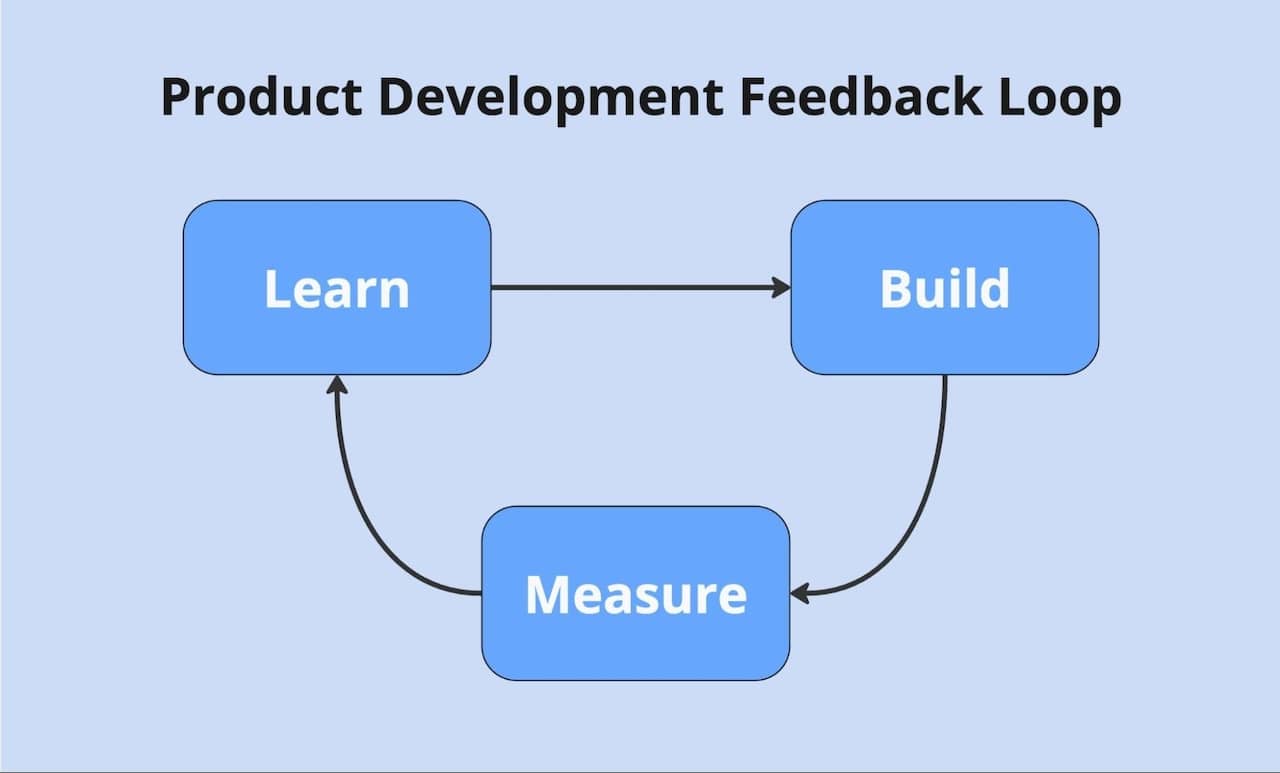

User feedback is the product team’s holy grail – it allows them to establish build-measure-learn feedback loops and develop the product in an iterative manner. Each iteration triggers a new user feedback collection process that, in turn, creates a basis for further feature development.

This way, they can stick to lean development methodologies and create a product that users actually want and would pay for.

Ensuring a Great User Experience

Feedback is not only about feature requests but also about making sure the product is easy to use and does its job without causing frustration.

Collecting feedback such as customer satisfaction score (and we’ll get to what exactly that is a little later) gives the company an idea about how good the customer experience is.

For Customer Success/Support Teams

Evaluating the Quality of Their Work

The main function of customer success and support is making sure the customers are happy with the product. And the easiest way to know if they are happy is by asking them. That’s why every time you talk to a customer support representative, you’re asked to evaluate their work afterward.

Maintaining Great UX for Customers

Customer Success and Support are often the most customer-facing departments in the organization, so they are the primary source of actionable insights about what the customers want.

By collecting and processing customer feedback, customer success helps the product department steer the development in the right direction.

For CRO Teams

Validating Hypotheses with Data

Conversion Rate Optimization (CRO) teams rely heavily on data to form hypotheses about how to improve conversion rates. Various kinds of user feedback are among the data sources they usually use. Feedback from users can highlight pain points in the customer journey that might not be immediately obvious through quantitative data alone.

Relying on feedback, CRO teams can identify these pain points and run experiments to address them, increasing conversion rates.

Preventing Negative Impacts

User feedback also serves as a safety net and quality assurance (QA) tool for CRO teams. Running feedback surveys during an A/B test provides the users with a channel to communicate with the team if their experience changes for the worse.

A significant amount of negative feedback during an experiment can be reason enough to stop it. And if the amounts are not critical, CRO experts still take this feedback into account for further experimentation.

💡Pro tip: Mouseflow allows you to watch session replays for the users that interacted with the feedback widget. This way, if somebody reports a problem using the feedback widget, you can get a recording that provides a lot of details about the problem. Development teams use the combination of session replay + feedback when deploying significant changes to websites.

For UX Teams

Conducting UX Research

UX professionals rely on user testing – which is also a form of feedback – to understand what the users think. By asking targeted questions about specific interactions, they can gather detailed insights about what the users want and need.

This feedback forms the foundation for UX research and helps in designing more intuitive interfaces.

Improving User Satisfaction

If you look on an UX team’s OKR list, you can often find customer satisfaction there. By regularly collecting and analyzing feedback, UX professionals can identify areas where the user experience falls short and make necessary adjustments.

This iterative process ensures that the product remains user-friendly and meets the user needs and that naturally increases customer satisfaction.

💡Idea: Use friction score as a trigger for a survey. If the user experiences a lot of friction events, ask them if everything is working fine. It likely isn’t, and this way you’ll get a bug report. This also helps prevent user churn, as not every user proactively reaches out to the customer service when they’re experiencing problems.

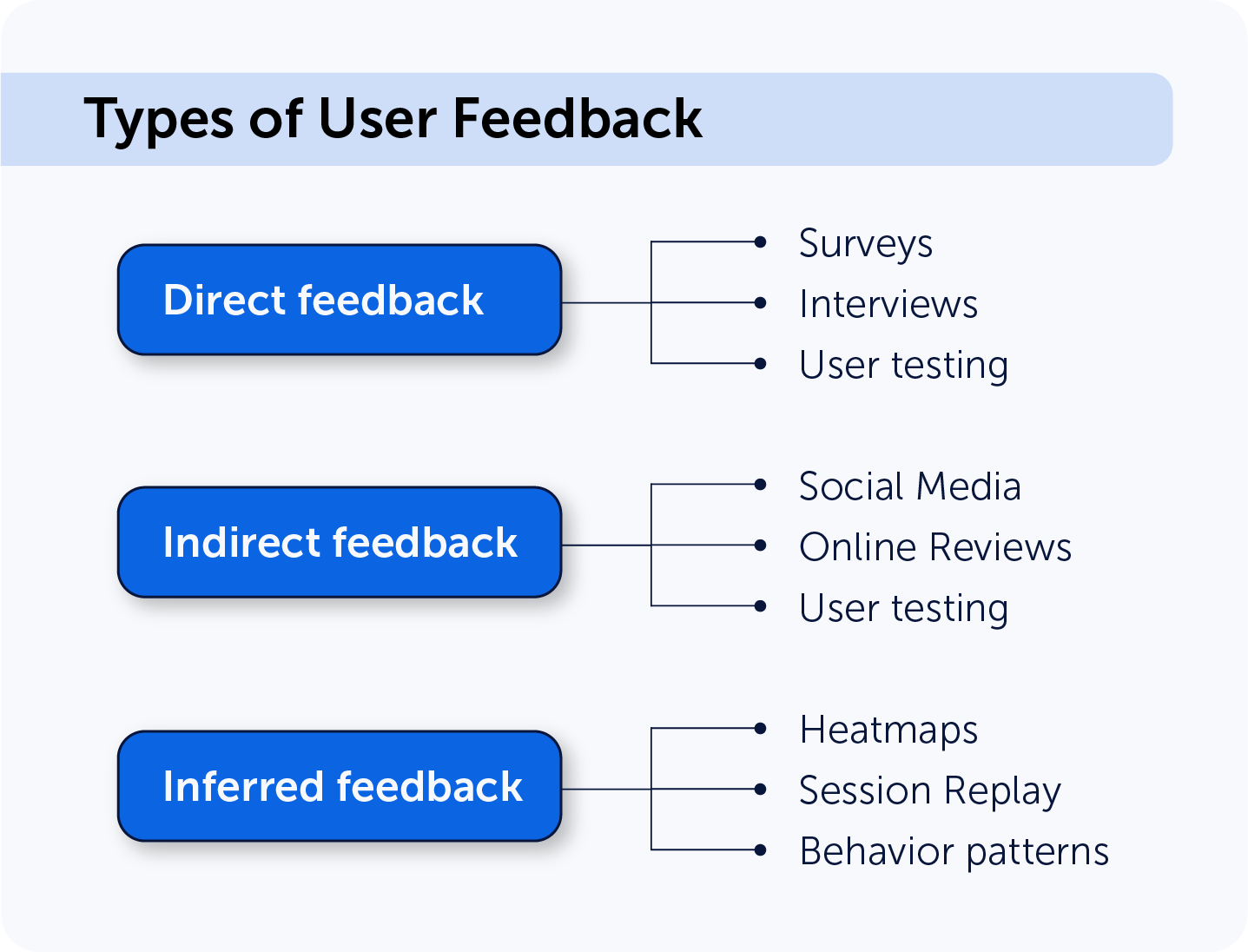

The Different Types of Feedback

There are a lot of things that fall under the umbrella of user feedback, and some are not very intuitive. So, it’s good to understand the types of user feedback that there are:

Direct feedback

That’s the feedback the users give you because you explicitly ask for it. Here are some examples of direct feedback:

- surveys,

- scores (like NPS or CSAT),

- customer interviews.

This type is also known as active or proactive feedback.

Indirect feedback

That’s what the users give you despite the fact that you didn’t explicitly solicit it. Examples of indirect feedback include:

- reviews and ratings,

- posts on social media,

- customer support interactions.

This type is also known as passive feedback.

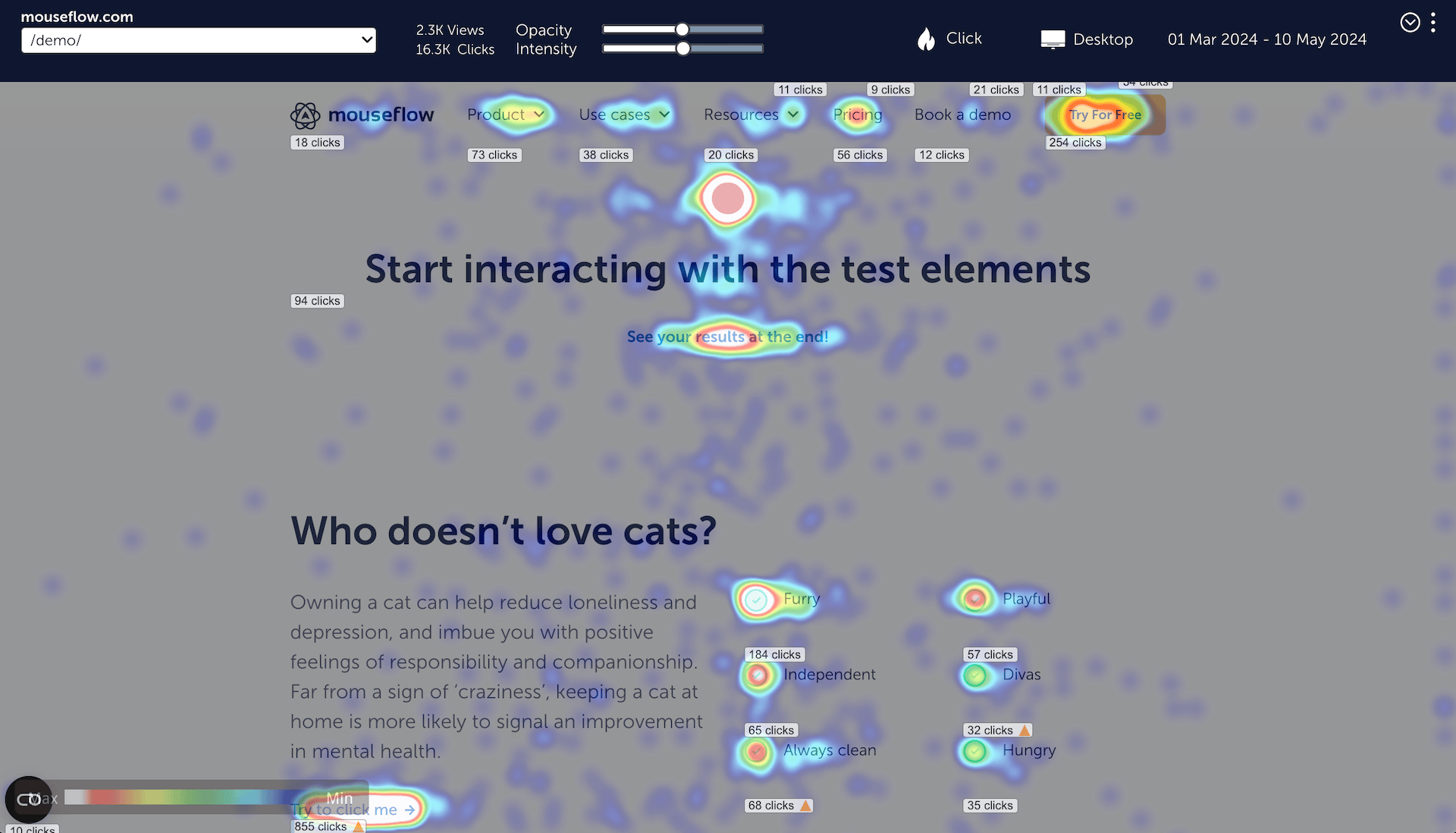

Inferred feedback

This one is about trying to get information from customer experience data. You can get this type of feedback from:

- heatmaps,

- session replays,

- usage patterns.

It’s just as important as the other types of feedback because users often don’t say how they feel or what they want – sometimes because they don’t want to, sometimes because they actually don’t know.

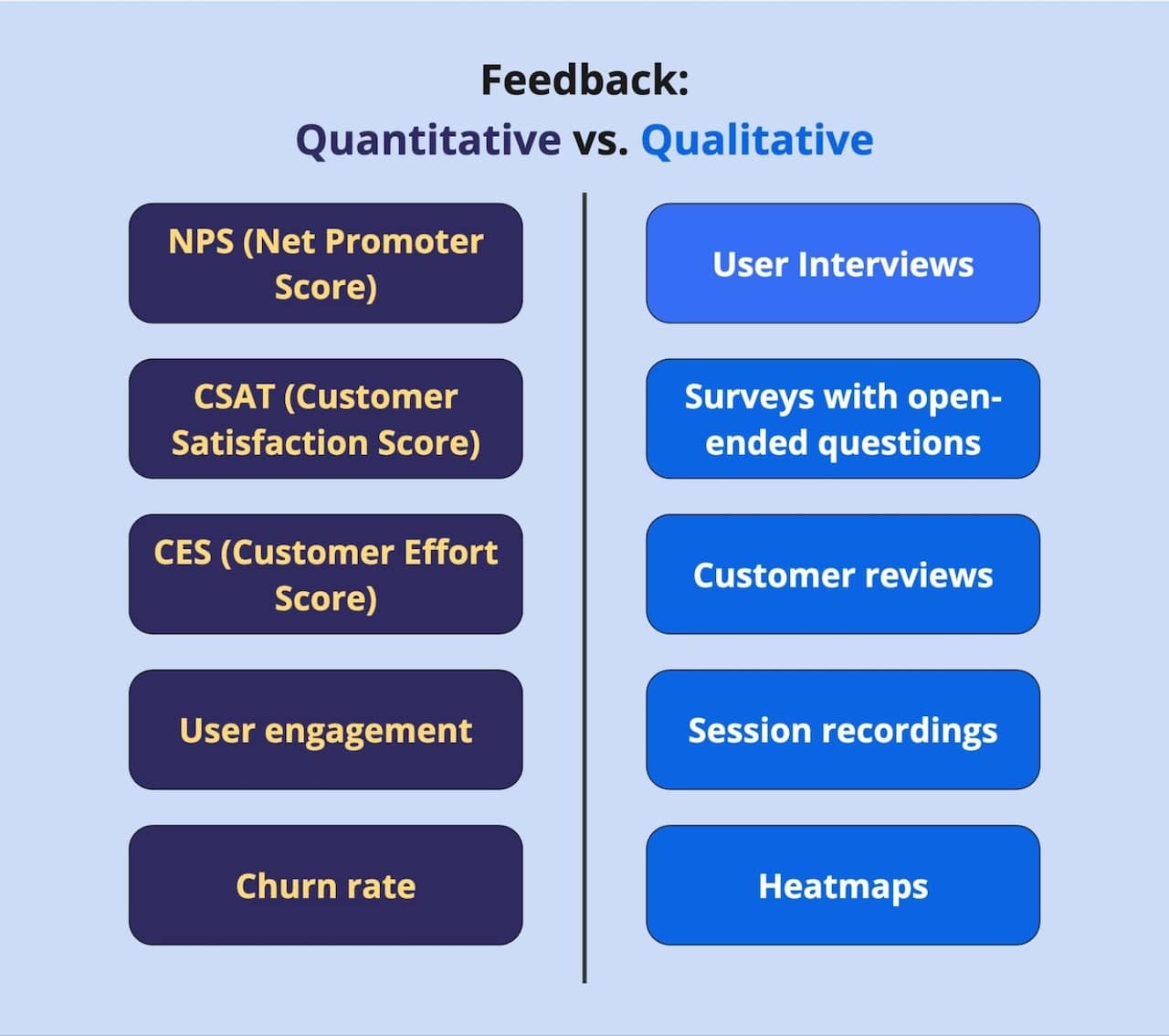

Quantitative vs Qualitative Feedback

There’s another classification that’s also good to keep in mind:

Quantitative feedback is the type of feedback that you can measure and count. All sorts of scores and ratings are quantitative.

Qualitative feedback is what you can’t measure. User interviews are purely qualitative. And reviews, for example, have both the quantitative part (the score that the users give) and the qualitative part – what the users had to say.

Both are important, but in different ways – quantitative feedback is a good measure of progress that you’re making, and qualitative feedback is something that you can use to learn more about what customers actually want or need.

Qualitative feedback tends to be much deeper than quantitative, but is harder to analyze.

📖Read a more detailed post about types of user feedback .

User Feedback Metrics

If you’re going to collect feedback and use it to improve customer experience, it’s likely you’ll want to measure the results of your work somehow.

There’s a bunch of different user feedback / customer satisfaction metrics that you can use for that. Let’s quickly go through them.

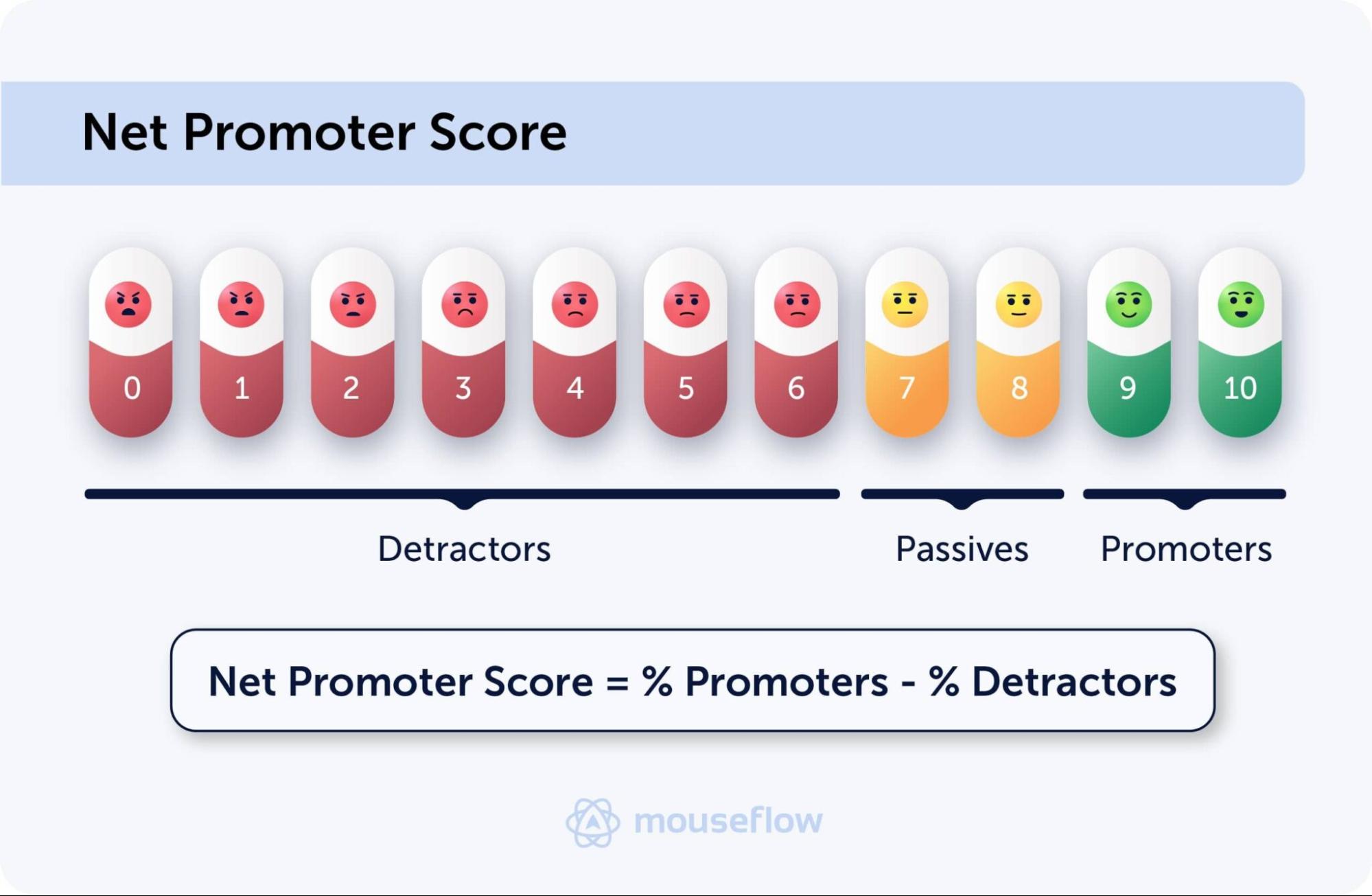

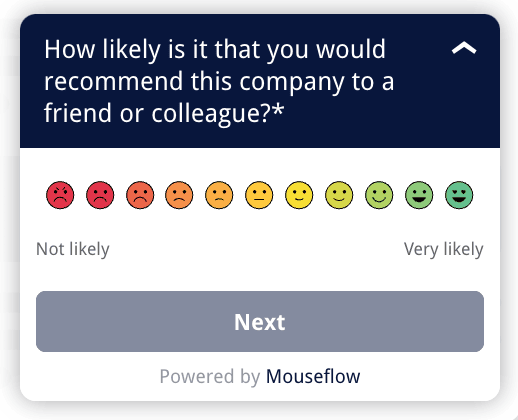

Net Promoter Score (NPS)

NPS was created to measure customer loyalty .

It’s based on answers to one question: “How likely are you to recommend a product/service to your friends or colleagues?” The users who answered 9 or 10 on a scale between 1 and 10 are called promoters, those who gave 0-6 are called detractors.

NPS equals the percentage of promoters minus the percentage of detractors.

Businesses often use NPS as one of their key performance indicators (KPI) of how well they are working with the voice of the customer (VoC). NPS is also an indicator of whether word-of-mouth is going to work as an acquisition channel, as promoters tend to indeed recommend the product or service to other people.

Since it’s a very widely used metric, Mouseflow implemented a special type of survey to collect NPS and automatically calculate it.

💡Idea: Fire NPS surveys on order completion pages – the user managed to get through the whole process, but they may have encountered some friction or just have ideas on how to improve it.

📖Read more about NPS and its use cases

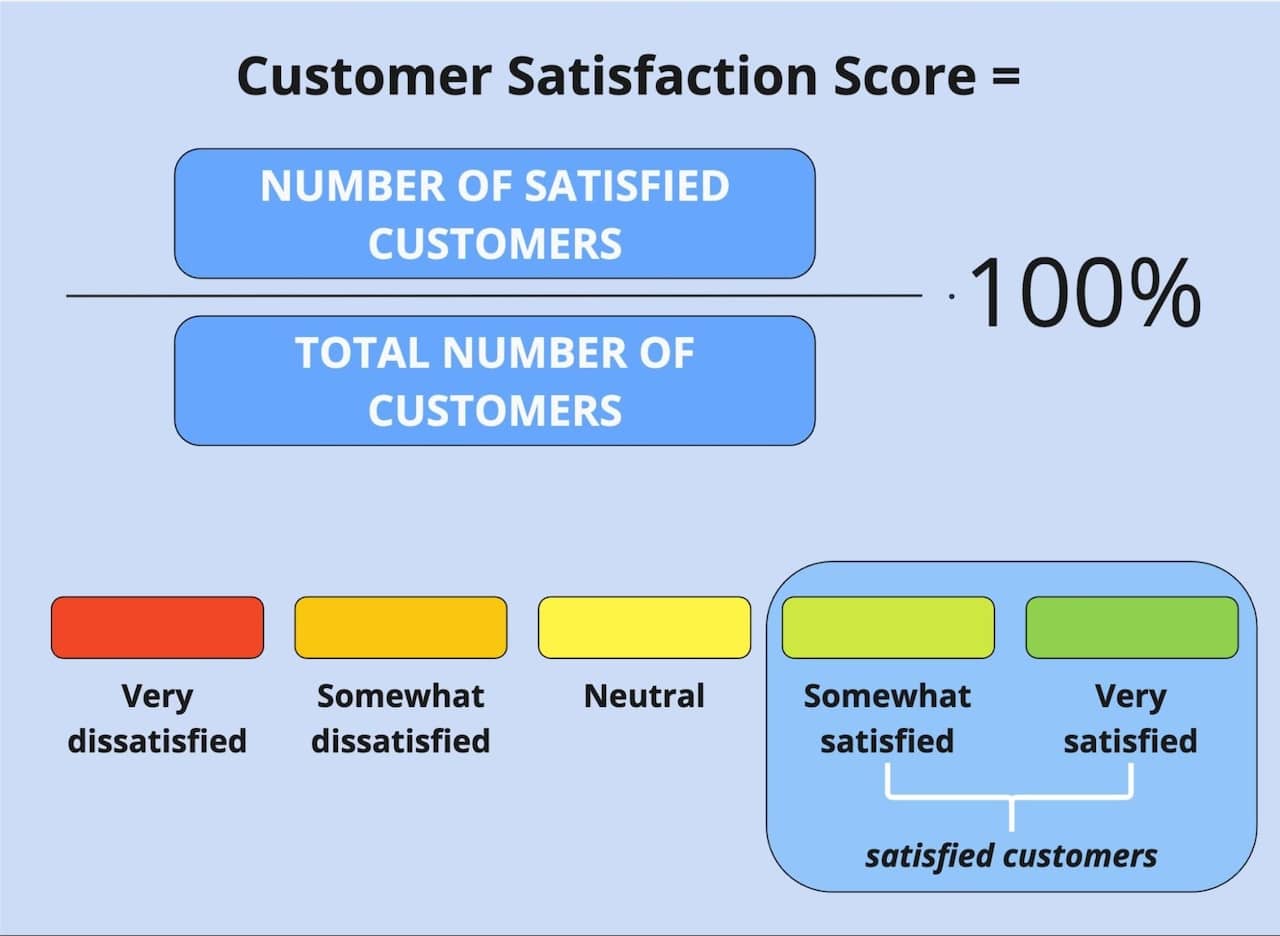

Customer Satisfaction Score (CSAT)

As its name suggests, CSAT was created to measure customer satisfaction .

It’s even simpler than NPS: users rate their experience on a scale from 1 to 5, where 5 stands for “very satisfied”, and 1 for “very unsatisfied”. To calculate CSAT, take the number of answers with 4 and 5, divide it by the total number of answers, and multiply by 100%.

CSAT is widely used to understand how satisfied users are either with the product, or with services such as support.

For instance, we use CSAT to evaluate how our support team is doing. This spring, Mouseflow support got a CSAT score of 98% from our users, which we consider a great result.

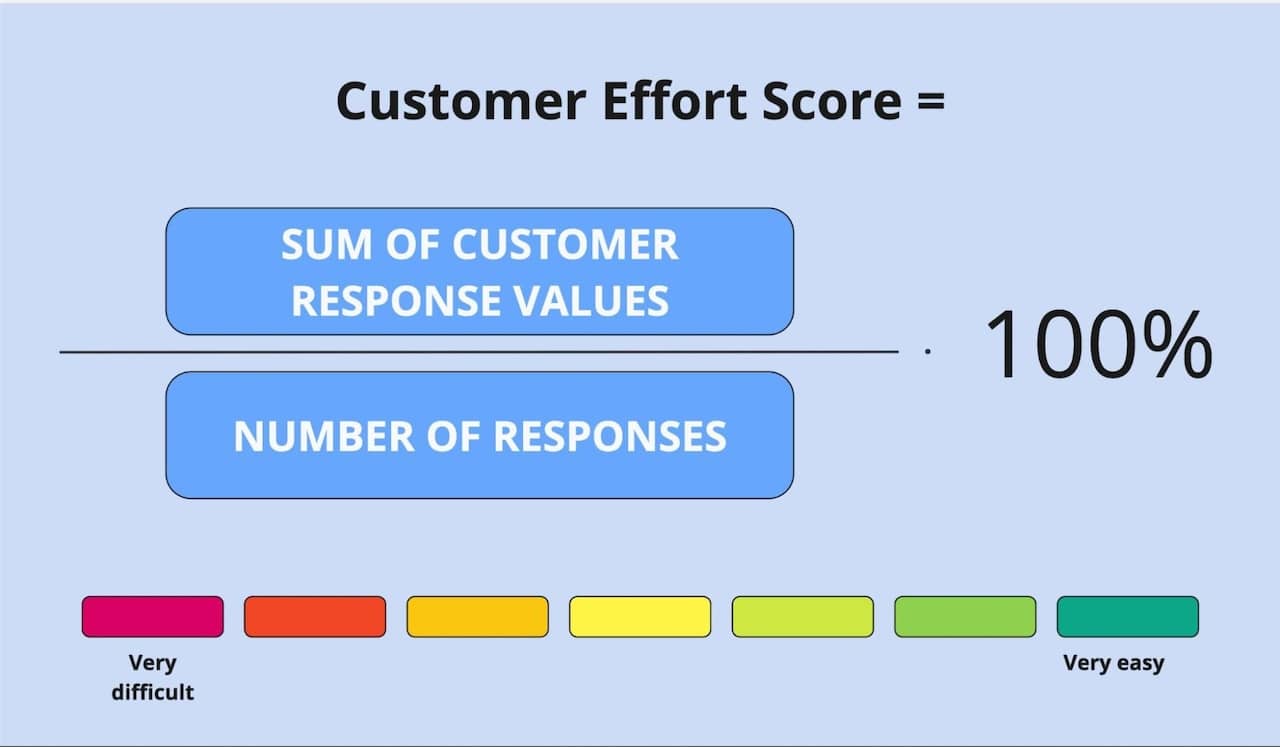

Customer Effort Score (CES)

CES was developed to measure the difficulty of a task .

Upon completing a certain task, the users get to answer how easy it was to complete it on a scale from 1 to 5, where 1 stands for “very difficult” and 5 for “very easy.” Sometimes the scale is between 1 and 7.

CES is calculated as the sum of all answers divided by the number of the answers. The higher the score, the easier it was for the users to complete the task. A low score indicates problems with user experience and signals that something is counter-intuitive.

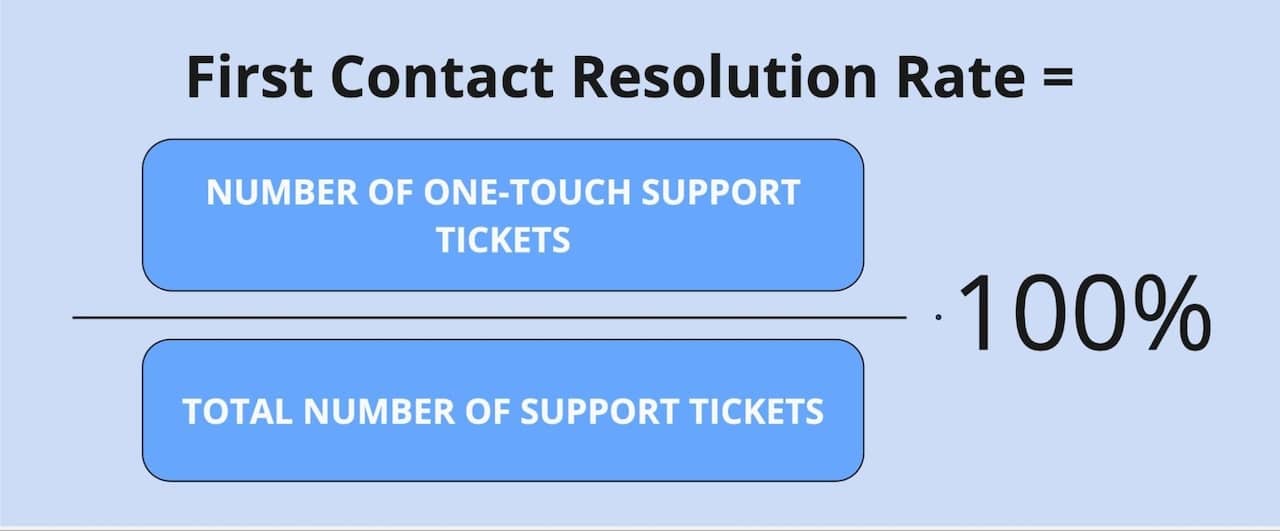

First Contact Resolution (FCR)

FCR is there to measure the quality of support .

When a customer reaches out to customer support, it usually makes them happy if just one answer from the support representative is enough to solve the problem. Helpdesk people call such cases one-touch tickets.

And that’s how FCR is calculated: the number of one-touch support tickets divided by the total number of tickets and multiplied by 100%.

A high FCR is a great thing for two reasons: happy customers (obviously) and efficient support that as a result has time for other activities such as writing documentation.

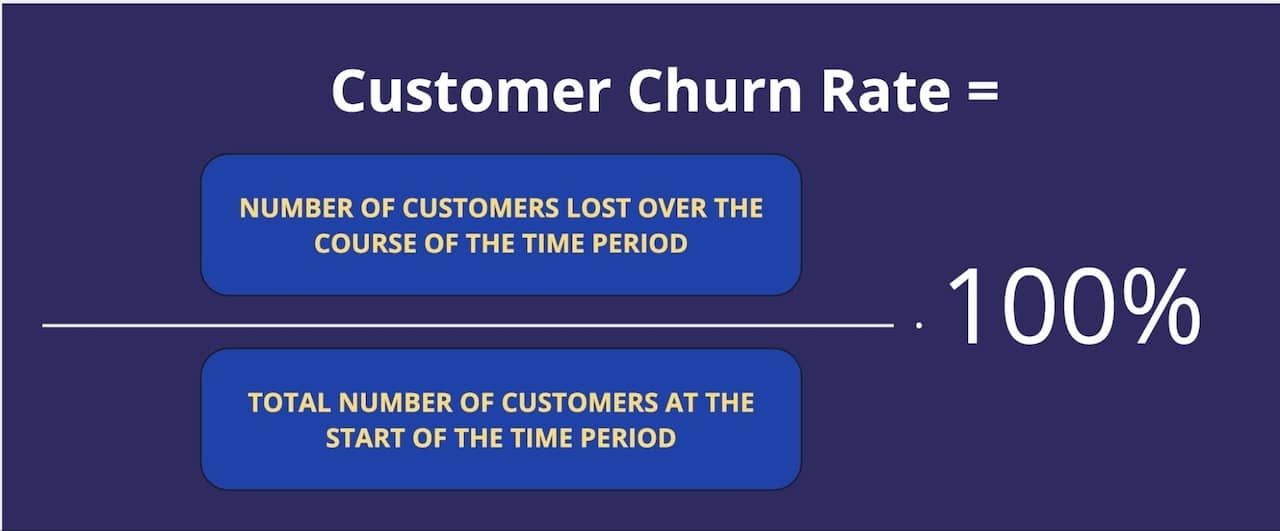

Churn Rate

Churn reflects the rate at which a business loses customers .

It’s calculated as the number of customers lost during a certain period of time divided by the total number of customers at the start of that period and multiplied by 100%.

It’s not based on direct feedback, but it’s an important customer experience metric no subscription-based business should overlook.

A company is growing only if its growth rate (number of new customers divided by the total number of customers at the beginning of the period and multiplied by 100%) is higher than the churn rate.

Churn rate is also often used by human resources departments and applied to employees. It helps understand if the company is indeed such a great place to work as it (just like all companies) claims to be. High employee turnover is a signal that internal policies or compensation need a re-evaluation.

📖Read more about churn and how better CX helps prevent it

Instead of churn rate, eCommerces, who often don’t rely on repeat purchases, often calculate the abandonment rate – the percentage of carts that end up abandoned.

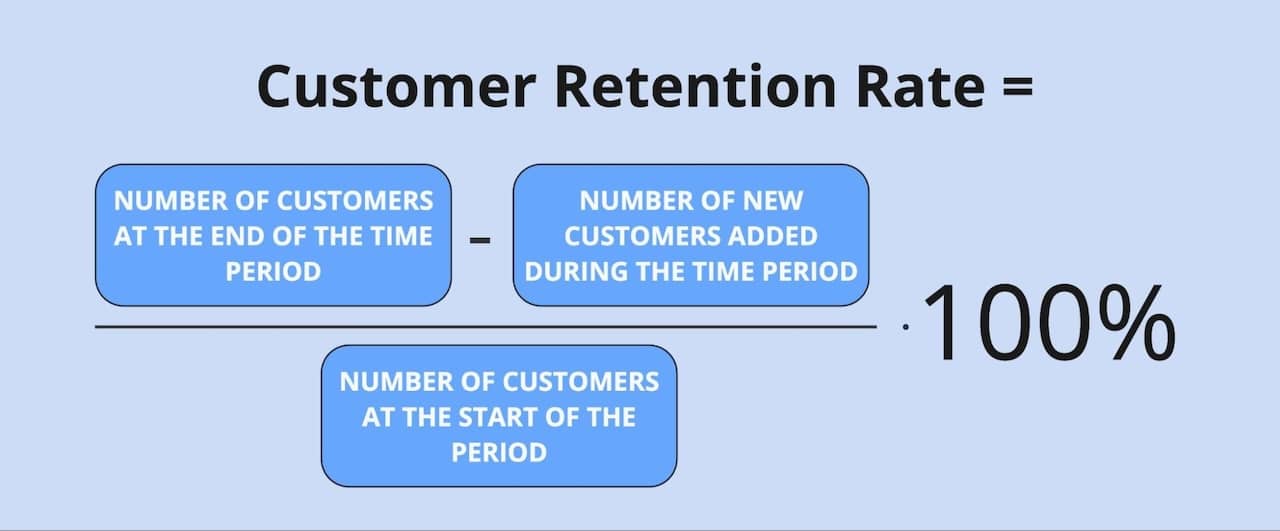

Retention Rate

Retention rate measures stickiness.

It’s a percentage of customers who continue to use a product or service over a period of time. You can say it’s the opposite of churn rate.

The higher the retention rate, the longer the customers stay with the company on average, the more revenue they generate for it.

Which brings us to our next metric.

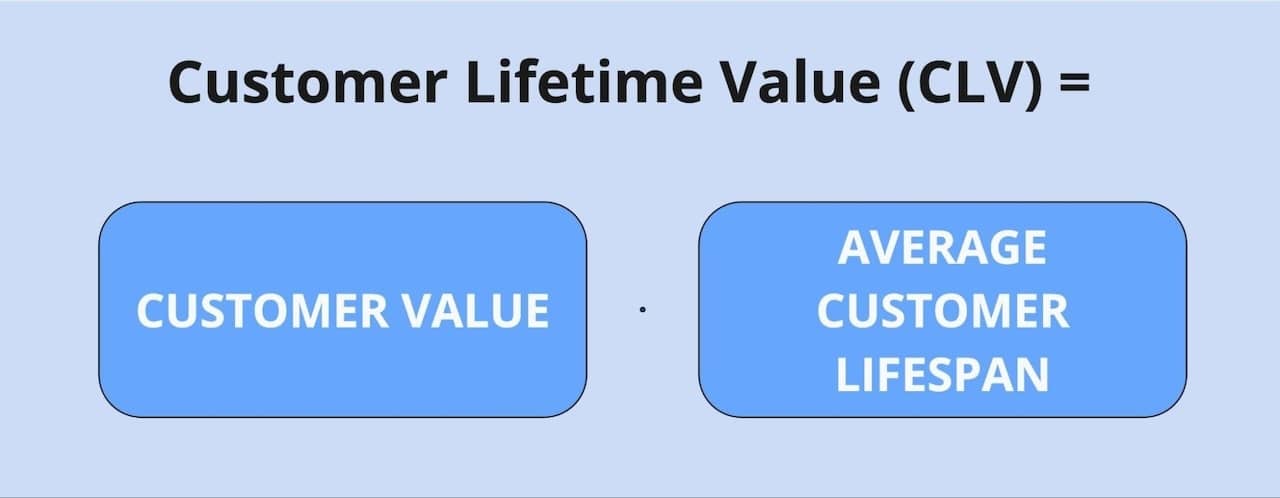

Customer Lifetime Value (CLV or CLTV)

CLV is a measure of the worth of a customer to the business .

It’s calculated as the revenue that came from a customer during the entire period when they’ve been doing business with the company. Again, it’s not a direct feedback metric, but it’s very important for every business.

Usually, businesses consider average CLV – either for all customers or for certain cohorts (like customers on a certain plan). The main reason to calculate it is to predict revenue.

Why is CLV among the feedback metrics? Well, because it certainly has a lot to do with customer experience – the better the CX, the longer the customer will stay, the higher the CLV. It’s the indirect feedback your customers are giving to you.

Low CLV is likely related to high churn or customers sticking with cheaper options – and these are both problems that require a solution if the business wants to stay afloat.

Customer Reviews and Ratings

Customer ratings measure customer satisfaction.

Basically, they are very similar to CSAT, the only difference is that usually the ratings are on the scale from 1 to 5.

The higher the rating, the more customers are satisfied with the product or service.

For instance, Mouseflow has a rating of 4.7 on Capterra and 4.6 on G2 – most of our customers are happy with our product and support.

According to Gartner, 41% of potential customers in the B2B sector evaluate reviews of current customers. So, having good reviews is super important. Also, numerical ratings often come with words about what the user liked or disliked about the product or service. And these words are pure gold when it comes to further development, as fixing problems can positively influence retention, CLV, and many other metrics.

How to Collect User Feedback: Platforms and Tools

We almost answered that in the types of user feedback, but let’s go through the tools that you can use for each type.

Collecting Direct Feedback

For collecting direct feedback, you can use a user feedback tool on your website or inside your customer experience platform.

Many behavior analytics platforms have a user feedback tool as a part of the toolset that they offer. Mouseflow has one, and it allows you to ask open-ended or closed questions and collect scores such as NPS or CSAT. It also allows you to trigger surveys on different events so that you can ask the user something at the right moment.

Mouseflow NPS survey example

Customer experience platforms like Intercom also have a built-in feature to collect user feedback, and it’s quite useful if you’re looking, for example, to evaluate the performance of your customer support.

When it comes to customer interviews, you can just ask your customers for them over the same customer experience platform that you’re using. Or there are special platforms like Userlytics that help with user testing or conduct interviews with people matching your ideal customer profile (ICP) and sum the findings up for you.

Examples of Tools to Collect Direct Feedback

- Mouseflow

- Userpilot

- Survicate

Collecting Indirect Feedback

The best places to collect indirect feedback are social media platforms and review sites.

Users often go to social media to complain or share their experiences. That means, you can look for posts or comments that mention your brand name and process them to get indirect feedback at scale.

There are social listening tools like Mention or Brandwatch that automate this process for you, looking across channels for mentions and also evaluating the sentiment – whether the feedback is positive or negative.

💡Pro tip: Set up social listening to collect feedback not only about your brand, but also about competitors.

💡Pro tip #2: Make sure this feedback gets across to the teams that can take action based on it such as the product team.

Review sites such as Capterra or G2 for B2B and Yelp or even Google Maps for B2C are another place where users go to share their opinions about products or services.

💡Pro tip: You can analyze the sentiment of these reviews at scale using AI. Here’s how to do sentiment analysis with ChatGPT.

Examples of Tools to Collect Indirect Feedback

- Mention

- Brandwatch

- Sprout Social

Collecting Inferred Feedback

Since inferred feedback comes in the form of session replays, heatmaps, and behavior patterns, you need a behavior analytics platform to collect all of that.

Every behavior analytics solution offers session replays and heatmaps, many supplement it with a feedback survey tool for collecting direct feedback, covering most of your feedback-related needs.

Mouseflow click heatmap example

📖Check out our list of the best session replay and heatmap tools to find the one that is right for you.

Quantitative analytics tools like Google Analytics 4 (GA4) can give you some behavior patterns for your website like bounce rate and average engagement time.

Some behavior analytics platforms likely can give you more than that. For instance, Mouseflow provides average scroll depth and friction score for each page on your website. These patterns can help you understand if the users like your content or have a subpar experience on some of the pages.

Examples of Tools to Collect Inferred Feedback

- Mouseflow

- Smartlook

- CrazyEgg

Question Formats for Feedback Surveys

What could you possibly ask the users and how could it look like?

Contemporary feedback tools are flexible and offer to create and process different types of questions:

- yes/no questions (Example: “Did you find what you were looking for? Yes / no”)

- picklists – picking an item from a list(example: “What’s your company size? 1-50 / 51-200 / 201-1000 / over 1000 employees.”)

- open-ended questions (Example: “How can we improve our service?”)

- multiselect questions (Example: “What are your areas of interest? Please select one or more.”)

- rating scale (Example: “On a scale of 0 to 10, how likely are you to recommend our business to a friend or colleague?”)

📖We have prepared a bank of 30 feedback survey questions prepared that you can use. Check it out!

In this post, we also provide additional information such as situations when it’s appropriate to ask those questions and the exact triggers you could use when setting up the surveys.

Feedback Collection Tips and Best Practices

Now that we’ve discussed what to collect and the means of collecting it, it’s time to talk about how to do it. There’s no one-size-fits-all approach, but there are some best practices that are good to keep in mind when creating your customer feedback strategy.

Use Targeting and Segmentation

Direct feedback is the most valuable when it comes from a certain group of users or at a certain moment in time.

For instance, if you run an eCommerce store, you can ask the website visitors if they’ve found what they were looking for, but it only makes sense to ask those who didn’t make a purchase. So, for this feedback survey, it makes sense to segment those who haven’t made a purchase and fire the survey at them when they’re about to exit.

Or, if you want to know if your content is helpful, only ask those who have actually engaged with it – scrolled to a certain percentage of the page or spent some time on it.

You can use even more precise targeting like addressing only users who made a purchase of $100 or more, only returning customers, and so on. Targeting feedback surveys allows you to make your offering more personalized and improve areas where some users may not be having the best experience.

Use Multiple Sources and Types of Feedback

Feedback is evidence that can support a hypothesis. The more evidence there is – the better. And if there is different evidence pointing at the same issue – even better, the more likely it is that the issue is real. Also, some evidence may give you an idea about the fixes needed to deal with the issue.

So, when collecting feedback, don’t settle on one source – use multiple sources, ideally from different types of feedback.

And to up your game one more level, don’t settle on just feedback when collecting evidence for a hypothesis. Quantitative analytics, revenue data, error logs – there’s so much other data that you can use to support your ideas.

💡Pro tip: watch session recordings of the users that interacted with your feedback widget – that’ll provide additional context to their answers.

Establish Feedback Loops

The idea of continuous improvement based on customer feedback is based on establishing a feedback loop. You collect feedback, then you act on it, you collect more feedback to learn what impact your changes had, you act again based on the new data, and so it goes.

The important thing here is to make sure that the feedback loop is actually a loop – that you’re not just collecting feedback, but acting on it. And also that you’re not only acting, but also collecting feedback about your actions’ impact.

Having feedback loops allows you to iterate based on data you get from users, and it’s the healthiest way to build something that’s actually valuable.

“A core component of Lean Startup methodology is the build-measure-learn feedback loop.“

Get All Your Feedback in One Place

If you’re collecting different kinds of feedback, you’re likely using different platforms for that. Often, the side effect of this is that the collected data sits in its respective platforms, and you don’t get a holistic overview of it and compare the findings from different sources.

But there’s much more value in getting it all into one place – a database or a dashboard – so that you can spot trends and correlations in the different types of data.

👉Example:

Say, your users are directly complaining about having problems with the checkout. Your session replays are showing that click-errors appear when website visitors try to use a certain payment method. And your form analytics data shows a pattern: people often try to skip the “agree to terms and conditions” checkbox.

Drawing a correlation between the three gives you an aha moment: “They don’t see the checkbox, so they don’t accept the terms and conditions, and the form doesn’t submit and throws a click error. That’s why they are unhappy!”

Without getting this data together, it may be hard to understand what’s going on, but once you do it, the picture may quickly become much clearer.

Ask Unbiased Questions

When collecting direct feedback, it’s very easy to accidentally add a suggestion to the question, which would impact the results that you get.

The question “Was your website experience pleasant?” suggests that it’s supposed to be pleasant by default. And users may unconsciously steer towards saying yes. But if you asked “How would you rate your website experience?”, they may have given a lower mark because this time the question isn’t biased.

For the very same reason, it’s best to avoid stuffing two questions into one. The question “Are you satisfied with our website experience and products?” is likely to get biased answers because the likelihood of users being satisfied with at least something is higher than not being satisfied with anything.

Don’t Overwhelm the Users with Feedback Collection

Once a practitioner experiences the power of user feedback, they may become so excited that they try to collect as much feedback as possible, creating long surveys, placing feedback widgets on every page, and bombarding the users with questions over email.

But too many attempts to solicit feedback can result in user frustration – they’ll start abandoning the surveys, ignoring the widgets, unsubscribing from emails, and in the worst case, switching to a competitor.

Another problem with too much feedback is the difficulty of processing it. Too much data can be very discouraging, and too much feedback results in diminishing returns.

So, only solicit feedback when you are sure there’s value in it, and stick to shorter surveys instead of longer ones to improve their completion rate.

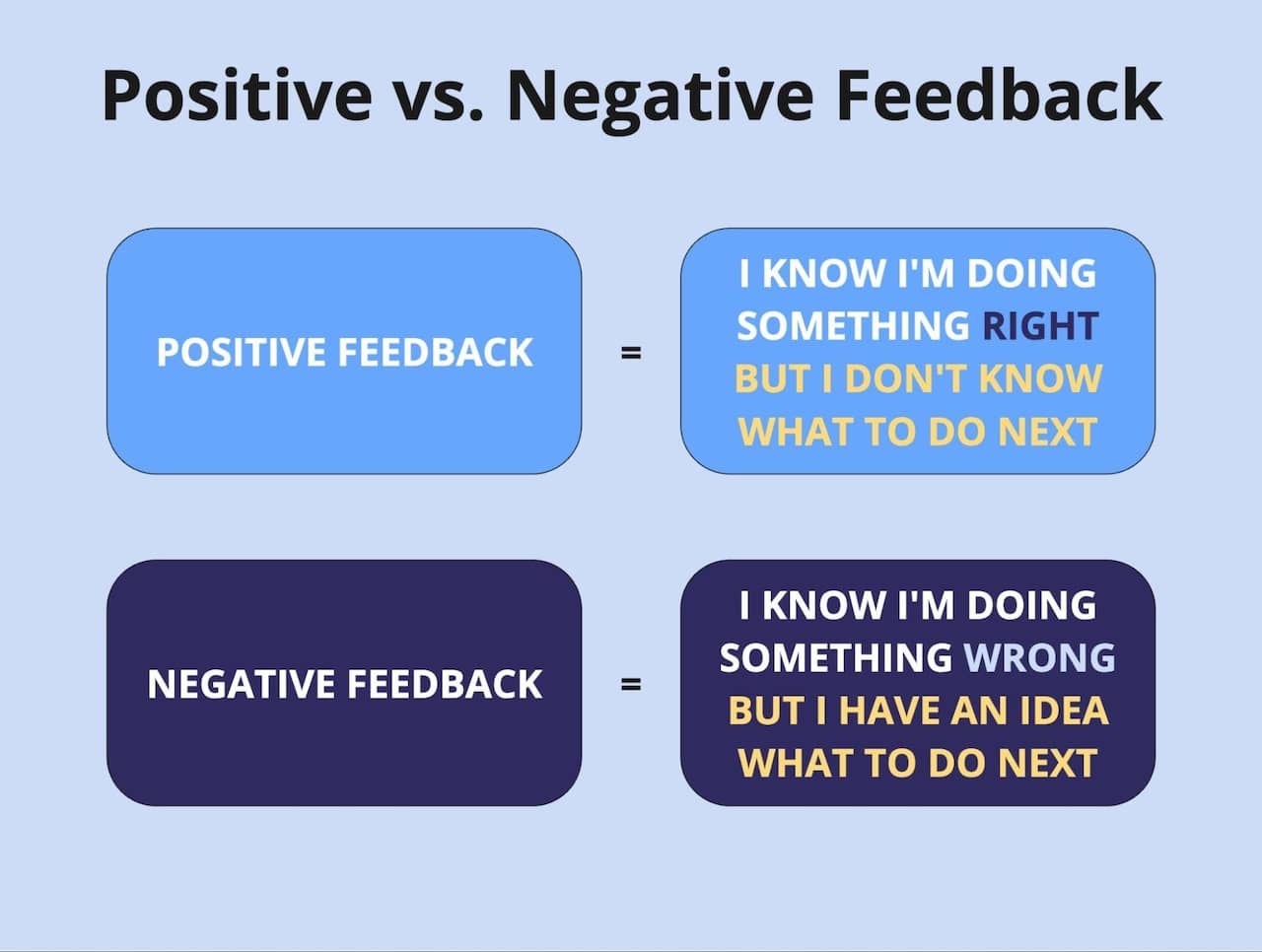

Don’t Be Discouraged By Negative Feedback

Sometimes getting negative feedback feels like a cold shower, and not in a good sense. But in fact, negative feedback is more actionable than positive.

When you get positive feedback, the good news is that the users liked what you did. But what do you do next?

On the other hand, when you get negative feedback, the changes you made didn’t resonate, but likely in this feedback there’s also some information about why they didn’t, and maybe even how to do it right.

So, more often than not, getting negative feedback is more useful than basking in the rays of users’ admiration.

Use Automation and AI to Analyze Feedback

After you start collecting user feedback across several channels, it may seem overwhelming – analyzing it and distilling actionable insights takes so much time!

You can save this time by employing AI to analyze the feedback for you. Of course, it’s going to miss something. Yet, in our experience, it does great when tasked with some feedback-related things:

- gauging the sentiment,

- giving estimated CSAT scores to text reviews,

- creating summaries.

📖Read this post to learn more about when to collect feedback and whom to target .

Conclusion and Further Learning

It’s hard to overestimate the power of user feedback. The Voice of the Customer is the force that drives business decisions in every company that wants to prosper.

Collecting and analyzing feedback allows you to deliver what your customers actually want and successfully navigate change management.

We have some more content that we recommend taking a look at if you want to continue exploring the topic of feedback:

- Check out this on-demand webinar with Mouseflow’s Head of Growth Eddie Casado, where he talks about the different business use cases for Voice of the Customer.

- Watch a video that explains how to create a feedback survey with Mouseflow.

- Read how collecting user feedback helped a global apparel brand Cotopaxi build a conversion rate optimization roadmap.

- Feedback is an important data source for CRO hypotheses. Read more about how to create a hypothesis for a test based on data.