What if it were possible to squeeze more revenue out of the traffic you’re bringing to your website? It likely is. This process is referred to as conversion rate optimization (CRO), but in fact, CRO is much more than just that.

Improving the user experience here and simplifying the user journey there can increase the percentage of visitors who get to the next step and eventually become customers. The more convenient, trustworthy, and straightforward your offering seems to be, the more visitors are likely to convert.

But you can also apply CRO to the product, improving customer retention rates. Or to email communications, improving the open rate. Experts say that CRO is so powerful that it can change organizational cultures or help establish the scientific method in business.

In this guide, we’ll be taking an in-depth look at conversion rate optimization – what it is, why you should care, how you do it, and every other CRO-related thing.

Here’s what you can learn about from it:

- What is conversion rate optimization (CRO)

- What CRO isn’t

- Why you (and everybody else) should care about CRO

- How to get buy-in for a CRO program from stakeholders

- The entire CRO process:

- What CRO tools you will need

- CRO case studies: how other companies do it

Let’s start with a formal, traditional definition.

What is Conversion Rate Optimization?

Conversion rate optimization (often called CRO for short) is a process of modifying the elements on a number of website pages with the goal of increasing conversion rate, effectively helping website visitors achieve their goals.

Well, that’s the classical definition of CRO – one you are likely to hear from a digital marketing manager or an ecommerce manager that has CRO as one item in their long list of duties.

The actual CRO consultants prefer a somewhat different approach to CRO, which doesn’t focus that much on conversions per se. They usually use the term “Experimentation” for it, and it’s more science than anything else. If we stick to their approach, the definition would look a lot different.

What is Experimentation?

Experimentation (sometimes still referred to as CRO by some) is the process of testing data-driven hypotheses by running experiments.

These experiments (or tests) involve changing some elements of a product with the goal of learning as much as possible about its audience, their desires and behavior patterns, and optimizing the product for them.

One of the usual outcomes of this process is increasing conversion rates.

With this definition, CRO is very different from just squeezing out another 0.5% sales from the website.

- Experimentation’s one and only pillar is the scientific method. Which is about trying to prove or disprove a hypothesis by running an experiment, rather than relying on words.

- Experimentation is more of a mindset or a culture, rather than just a discipline.

- Experimentation spans beyond the website – one can apply it to pretty much anything. Shiva Manjunath, Senior Web Product Manager, CRO at Motive, went as far as to optimize his breakfast this way.

- CRO’s main goal is not improving conversions, but learning something new and valuable. Improving conversions is a byproduct of this process.

What Conversion Rate Optimization Isn’t?

If we keep sticking to the professional approach, then conversion rate optimization sheds a lot of things that some consider CRO-related.

Changing some elements on a website without running a test isn’t really conversion rate optimization. Or rather, that’s one of the most typical CRO mistakes.

Running tests without well-researched hypotheses backed by data also isn’t conversion rate optimization. CRO’s call it spaghetti testing.

Looking only at the experiment’s results without trying to understand why they look like this isn’t really CRO either, because you’re not learning anything this way.

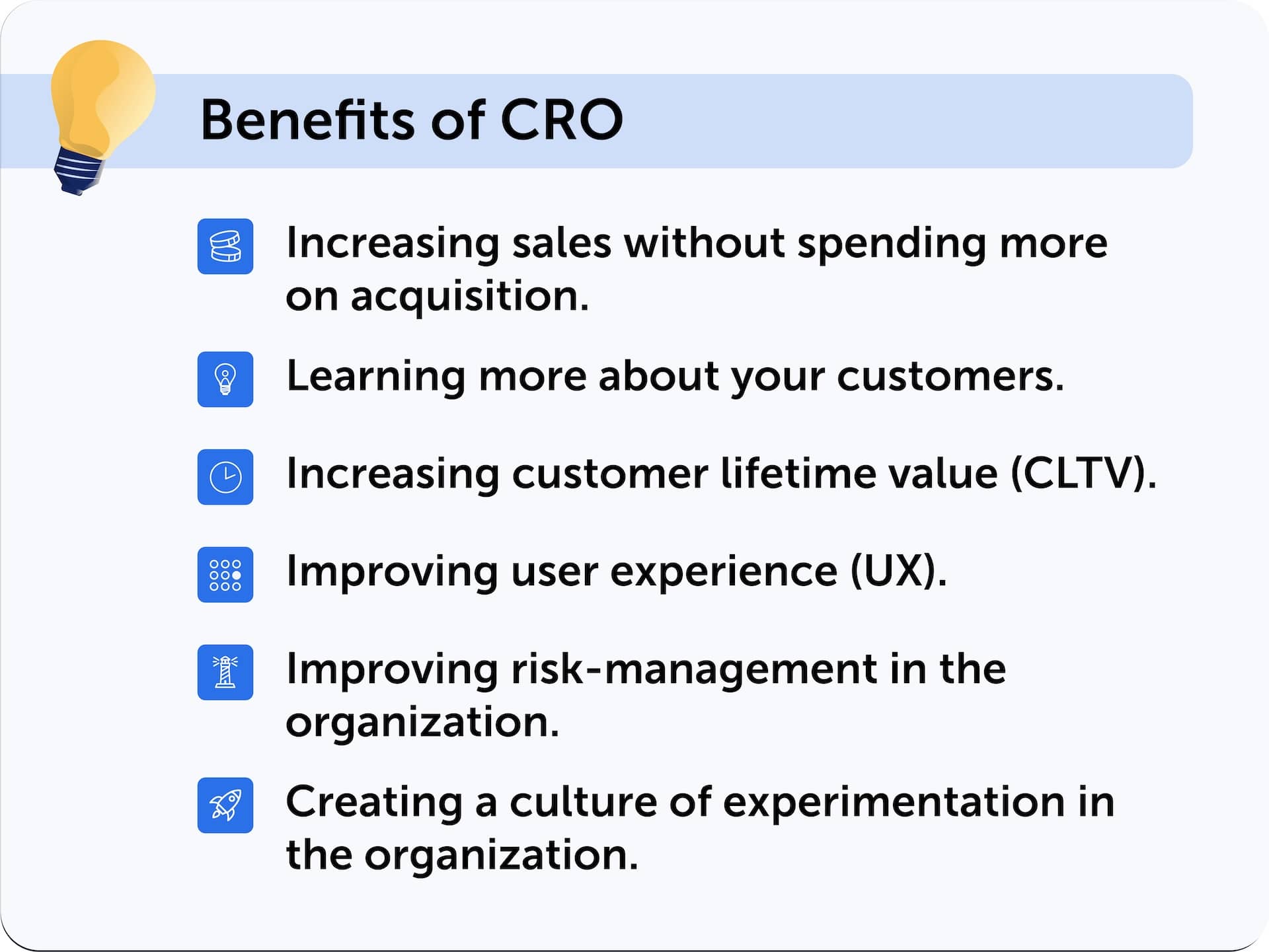

Why Should You Care? (The Benefits of Conversion Rate Optimization)

1. Increasing Sales Without Spending More on Acquisition

For example, in marketing and ecommerce saying “We want to increase sales” often means “We plan on paying more for ads.” But CRO improves conversions, i.e. what happens after the acquisition stage. With it, you can get more out of the same amount of traffic.

2. Learning More About Your Customers

The more experiments you run, the more you understand what matters to your customers, what they like, what they dislike, and how they think. This allows you to create products and services that work better for them. That helps in getting a better product market fit.

3. Increasing Customer Lifetime Value (CLTV)

You can apply CRO not only to acquisition, but to other stages of customer journey as well – testing and optimizing the products that you offer to them and the ways you communicate with them. Because you keep learning about your customers, you can make significant improvements. And the better the product suits them and the more value they can get out of it, the longer they’ll keep using it.

4. Improving User Experience

A lot of tests are often aimed at removing obstacles from the users’ journey. That basically means improving their user experience (UX), or product experience (PX) if we’re talking about product).

5. Improving Risk-Management in the Organization

Instead of always relying on leaps of faith, you can test if something works or not. If it has a positive effect, it stays, if the effect is negative, you just stick with the previous version. That way, you significantly reduce risks that the organization is taking.

6. Creating a Culture of Experimentation in the Organization

As we said, CRO is not only about conversions. It’s about developing the culture of testing every change – in marketing, in product, in logistics – and choosing the variation that proves to be more effective. Usually, a CRO program hatches somewhere in marketing or in product, but the wider it spreads across the organization, the better.

Erik Ries’s famous “Lean Startup” is all about adopting the experimentation mindset.

Getting Buy-in for a CRO Program

If you weren’t convinced that CRO is great and you need to implement it in your organization, hopefully, we’ve convinced you. But there’s a problem – now you need to convince the stakeholders in the organization.

Usually, the best way to do so is by showing them a return on investment (ROI).

Let’s take a look at a simple example and do some basic math.

Conversion Rate Optimization ROI Example

Say, you pay $10,000 for traffic acquisition each month, bringing 20,000 sessions to your website. It results in 400 sales, say, at $100 each, generating you $40,000 revenue, which becomes $30,000 after you deduct the traffic acquisition costs.

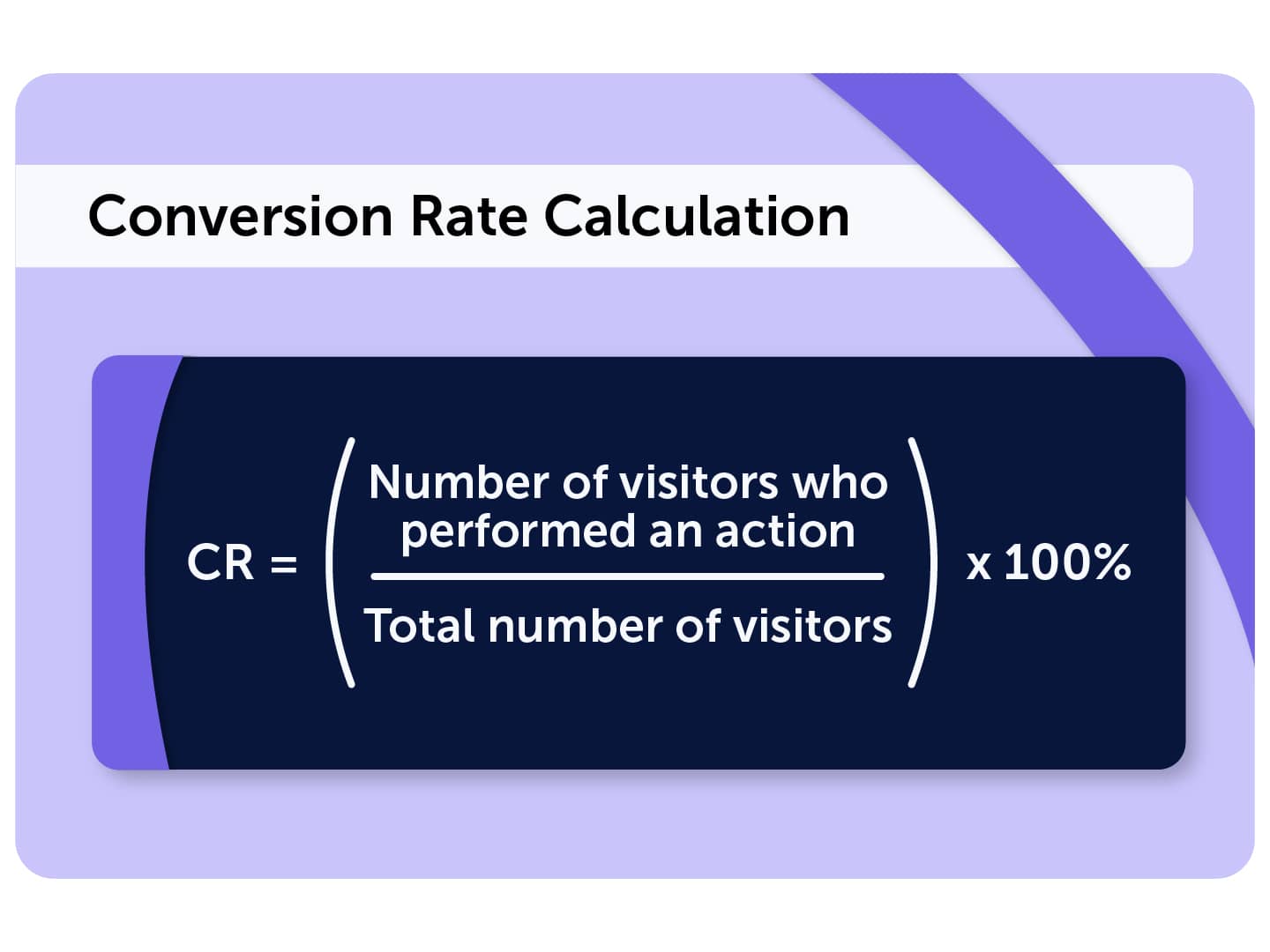

In this imaginary setup, your conversion rate (number of visitors who performed an action divided by the total number of visitors and multiplied by 100%) is 2%.

Now, let’s imagine you analyzed your website, found out some places where users struggled, ran a few experiments, and as a result, managed to improve the conversion rate by 10%, to 2.2%. And you didn’t spend a single additional penny on traffic acquisition.

That means that with the same $10,000 and 20,000 sessions, you’re now getting 440 sales at the very same $100, which now makes you $44,000. And it leaves you with $34,000 after deducting traffic acquisition costs.

As a result, these few experiments that you ran helped you earn 13% more. And you didn’t have to spend more! Communicating something like this to stakeholders can help you secure their support for your CRO efforts.

By the way, 10% conversion rate improvement is quite achievable. For example, this is what a global ecommerce brand RAINS achieved, running experiments and optimizing their website.

CRO Process and Best Practices

Conversion Rate Optimization Framework

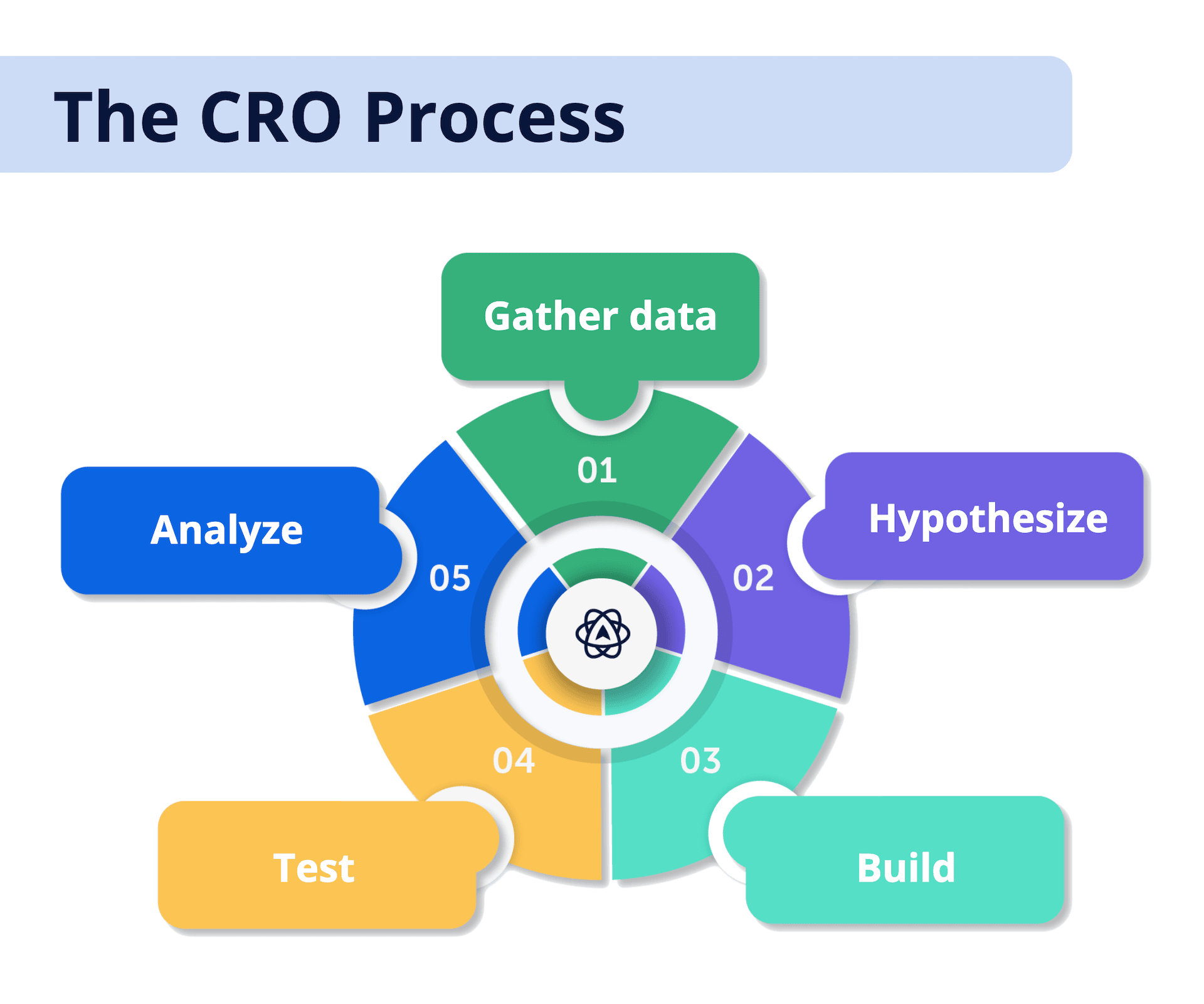

CRO is a very scientific discipline, so it’s no surprise that it relies heavily on the scientific method.

What do scientists do? They analyze a lot of data, talk with other scientists, and based on this data, they come up with an idea. Then, they design experiments that will prove or disprove that idea and run them.

Finally, they analyze the results, and as they do, they try to understand why the experiment was a success or a failure, and if changing something can change the outcome as well.

They talk to other scientists some more, and come up with a new idea based on data and analysis of the experiment and input from colleagues. They again test and analyze. And so on, and so forth. This is how science has been going for ages.

The product experimentation framework, which is likely familiar to most people who have ever worked in startups, is the same.

Similarly, CRO professionals analyze website data from different sources and come up with a hypothesis. They then try to prove or disprove this hypothesis by running a test (usually an A/B test, but there are other variations of CRO tests, which we’ll get to in time). Then they analyze the results and come up with a new hypothesis.

So, be it science, product development, or optimizing conversions on a website, the framework is the same:

Gather data -> Hypothesize and Document -> Test -> Analyze -> Repeat

1. Gathering Data

There’s a huge problem in CRO, and it’s about what people test. The usual source of a hypothesis is thin air. Or, perhaps, someone’s ideas about best practices that they heard from another person.

There are actually two problems with testing such hypotheses:

- The chance of the test being conclusive is low. It’s hard to reach statistical significance when testing random things.

- You don’t really learn about your customers. When you test random things, you only learn if the random thing worked or not. Usually, such tests don’t give you an idea about why it happened.

To have a higher probability of success and to provide you with valuable learnings, experiments need to be informed by data. Which means you need to have this data and analyze it first.

Data sources for CRO

The most typical data sources for CRO are:

- Quantitative analytics (like Google Analytics 4 or Adobe Analytics for websites, Gainsight PX for product analytics, and so on). In the end, you’ll need to quantify the improvement that your test achieved, so you’ll need some quantitative tool.

- Behavior analytics (session replay and heatmap tools like Mouseflow for websites, or Posthog for products)

- User feedback (that could be Mouseflow again – it can handle different types of surveys)

- User testing and user interviews (think usertesting.com or Userlytics)

2. Hypothesis and Documentation

Making sure that you are collecting data and have something to analyze is a prerequisite for developing hypotheses. Now, you can jump right to it. Of course, we have a blog post for you, where we go really deep into how to generate a hypothesis and how to document it.

Read more about CRO hypotheses and how to use data to generate them.

Here, let’s focus on some additional aspects.

Prioritization

Most likely, the data you’ve gathered and the initial analysis will be enough to generate a lot of hypotheses that you could potentially test. To actually start somewhere, it’s good to prioritize them.

There are a lot of prioritization frameworks that different CRO experts use. Here, we’ll briefly explain 3 of them:

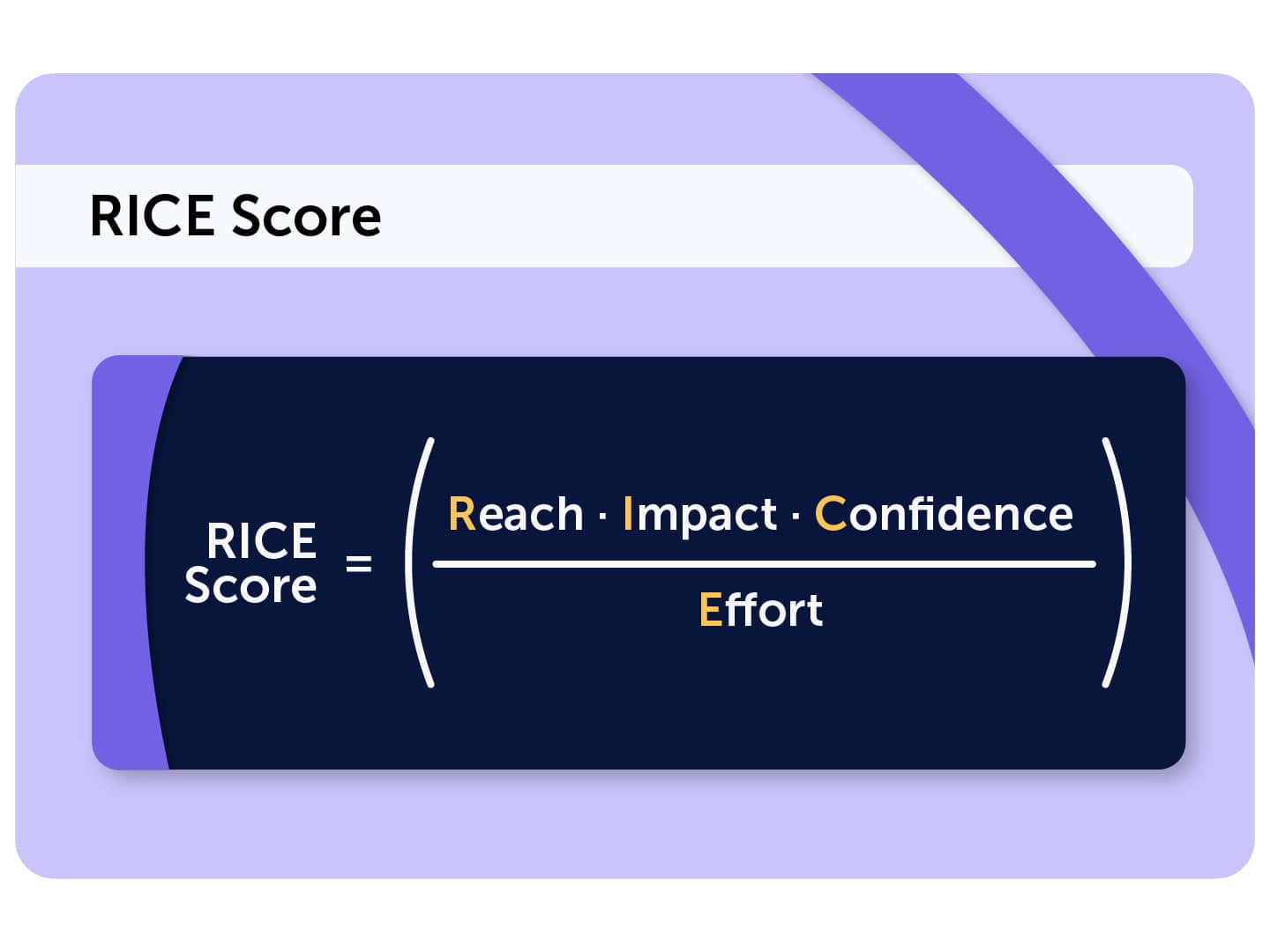

1) RICE Scoring

RICE stands for Reach, Impact, Confidence, and Effort. You estimate each of these 4 factors on a scale from 1 to 10, and then calculate the RICE score as Reach * Impact * Confidence / Effort. The higher the score, the higher the priority.

RICE scoring is great for projects with a lot of quantifiable data, and its main benefit is that as a result you get a clear list of prioritized experiments. But it’s very subjective because estimations of parameters often don’t have data behind them.

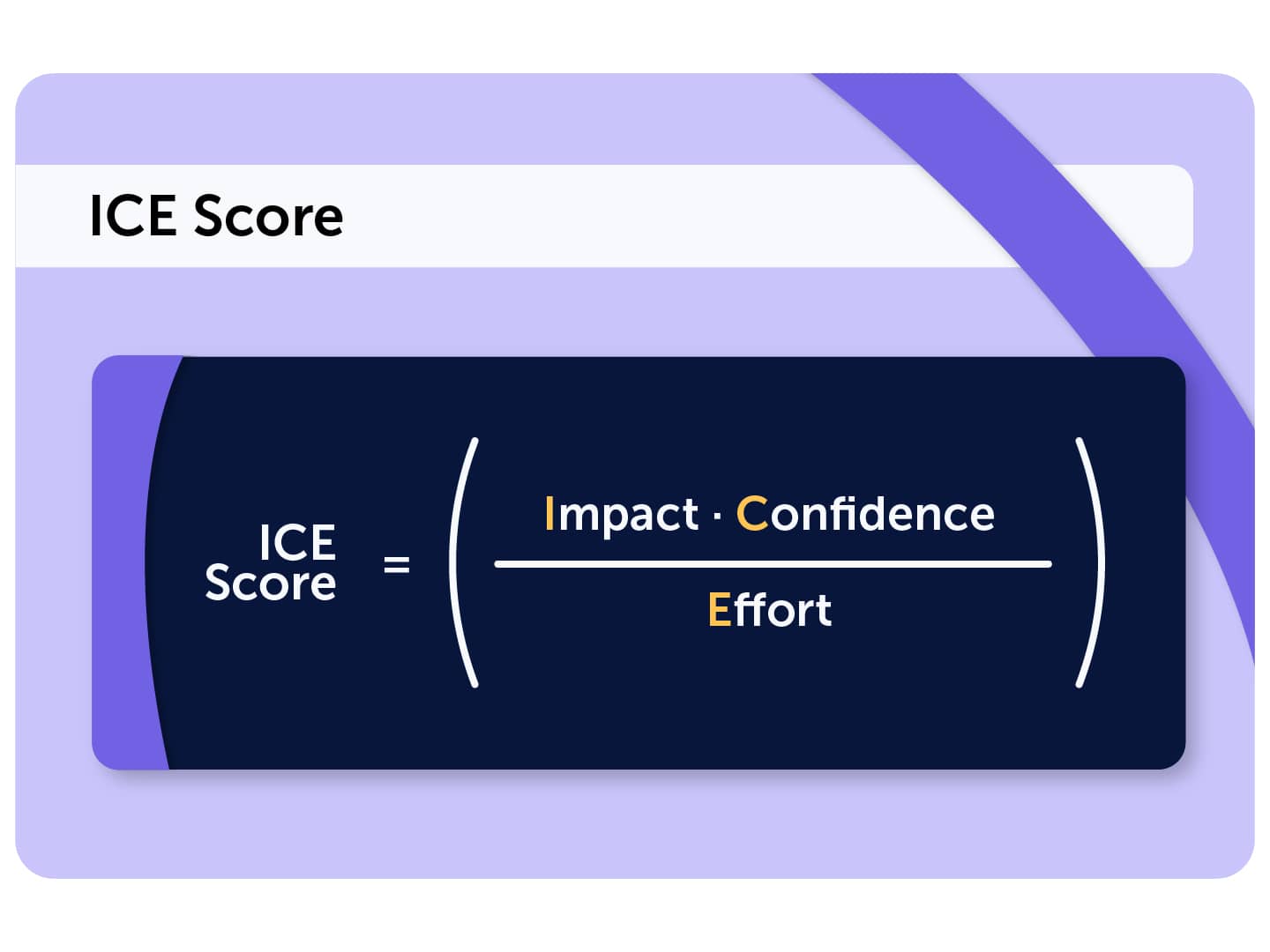

There’s a simplified version of RICE Score that doesn’t consider reach. It’s called – don’t be surprised – ICE score. Because of its simplicity, we used this one in our eCommerce Optimization Checklist, which, by the way, could be a list of worthy hypotheses for ecommerce businesses.

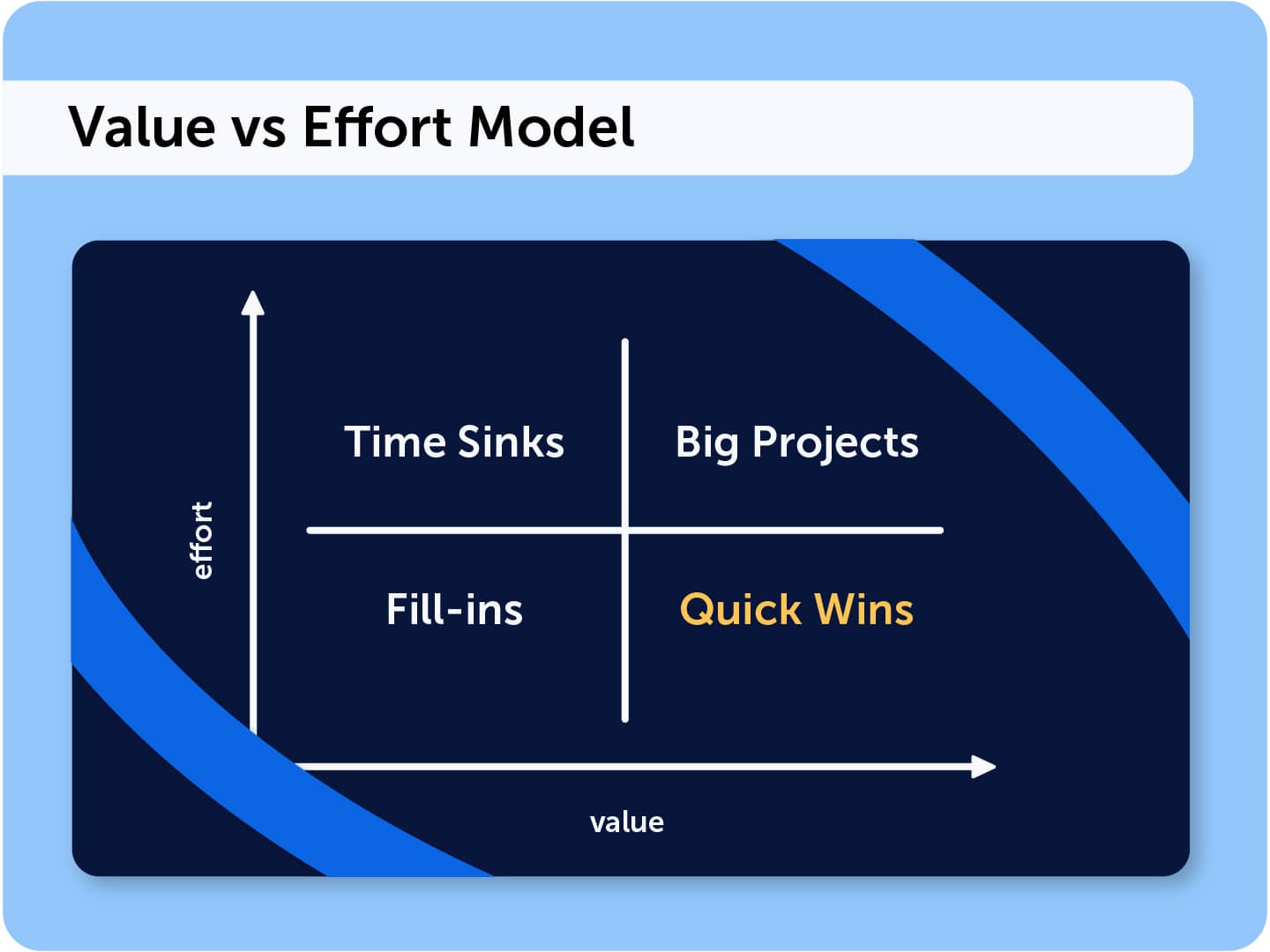

2) Value vs Effort Model

In the Value vs Effort model, you evaluate hypotheses based on the value they provide versus the effort required to implement them. Then, you put them on a quadrant with the Value and Effort axis and focus on the quadrant with maximum value and low effort.

This framework is simple and helps simplify decision making by getting rid of hypotheses with low value or those that require too much effort. There’s a downside to it too: when chasing low-effort high-value experiments, it’s easy to overlook ones with high-effort and very high value that could potentially lead to a breakthrough.

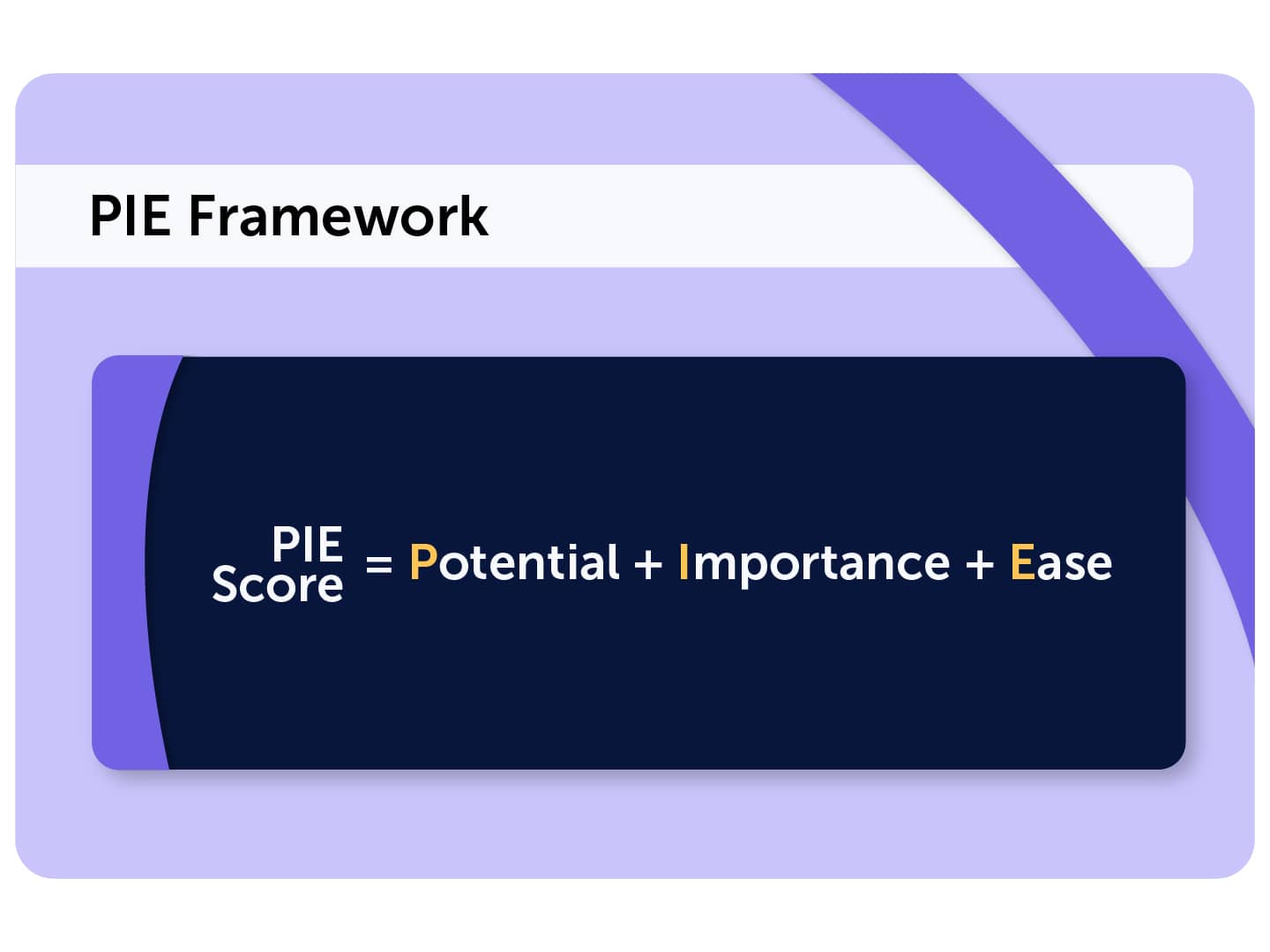

3) P.I.E. Framework

P.I.E. stands for Potential, Importance, and Ease. You rate each hypothesis on these three dimensions to determine its priority.

The P.I.E. framework is relatively straightforward and easy to implement. But, just like RICE, it’s prone to subjectivity,potentially leading to inconsistent scoring.

Choosing the framework is a very subjective thing – the only thing we can recommend is starting with simpler ones and seeing if they work for you.

Planning and Documentation

Before jumping straight to testing, it’s important to document everything so that it’s easier to evaluate the experiment afterwards.

Define the scope of the experiment, stakeholders, participants, conversion metrics that you want to improve, and other metrics that you should keep an eye on.

By the way, revenue is not a good main metric for experiments, even if it may be the end goal. The main metric should be about adding value to the customer – it could be sign-ups, successful cart checkouts, etc. Revenue doesn’t add value to customers.

One thing that you need to mention in the documentation is the type of experiment that you want to run. Let’s quickly go through the most popular types.

Types of CRO experiments

While in most cases by “testing” CRO specialists mean “A/B testing,” there are in fact more variations of tests that you can run. Here are the most common ones:

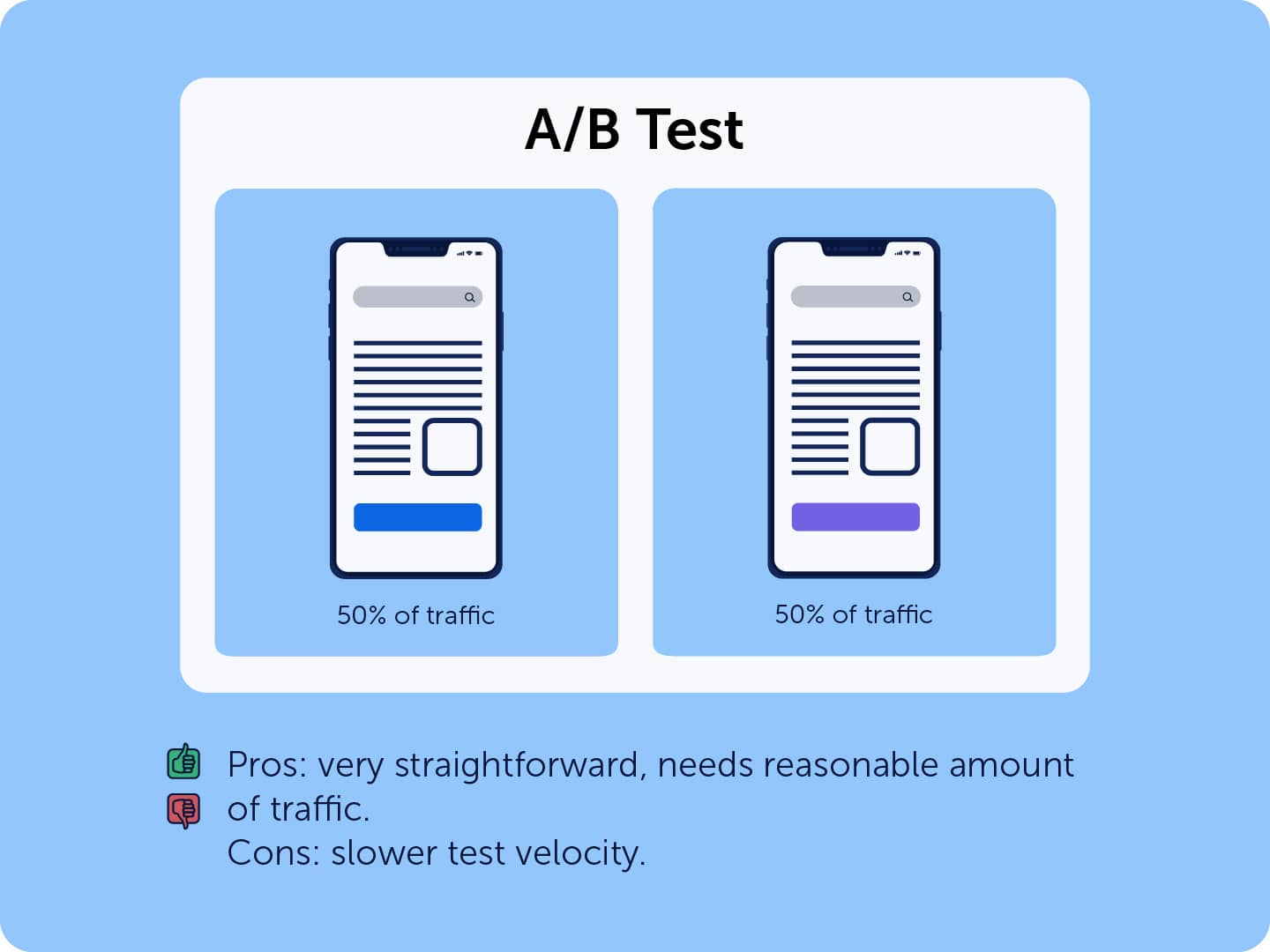

- A/B testing: The most frequently used method, where you compare two versions of a page (A and B) to determine which performs better.

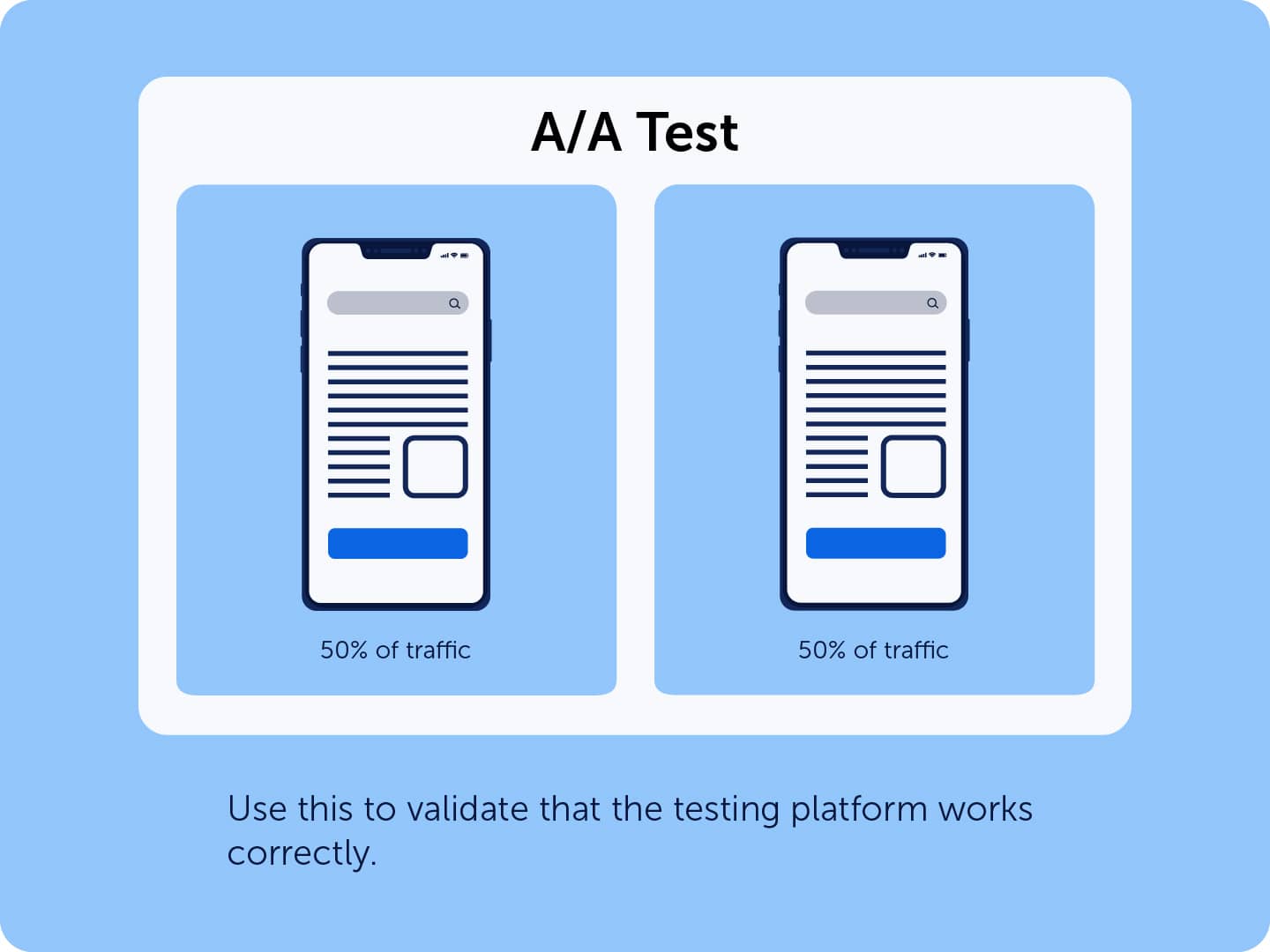

- A/A testing: This tests the same page against itself to verify that the testing tools and configurations are set up correctly. Use an A/A test before starting to test to make sure that your real tests will provide normal results.

- Split URL testing: This type is very similar to A/B testing, but each variation has its own URL, while in A/B testing visitors get to the same URL, but the page appears differently for them. Choose split testing over A/B testing when making significant changes that may require separate hosting environments.

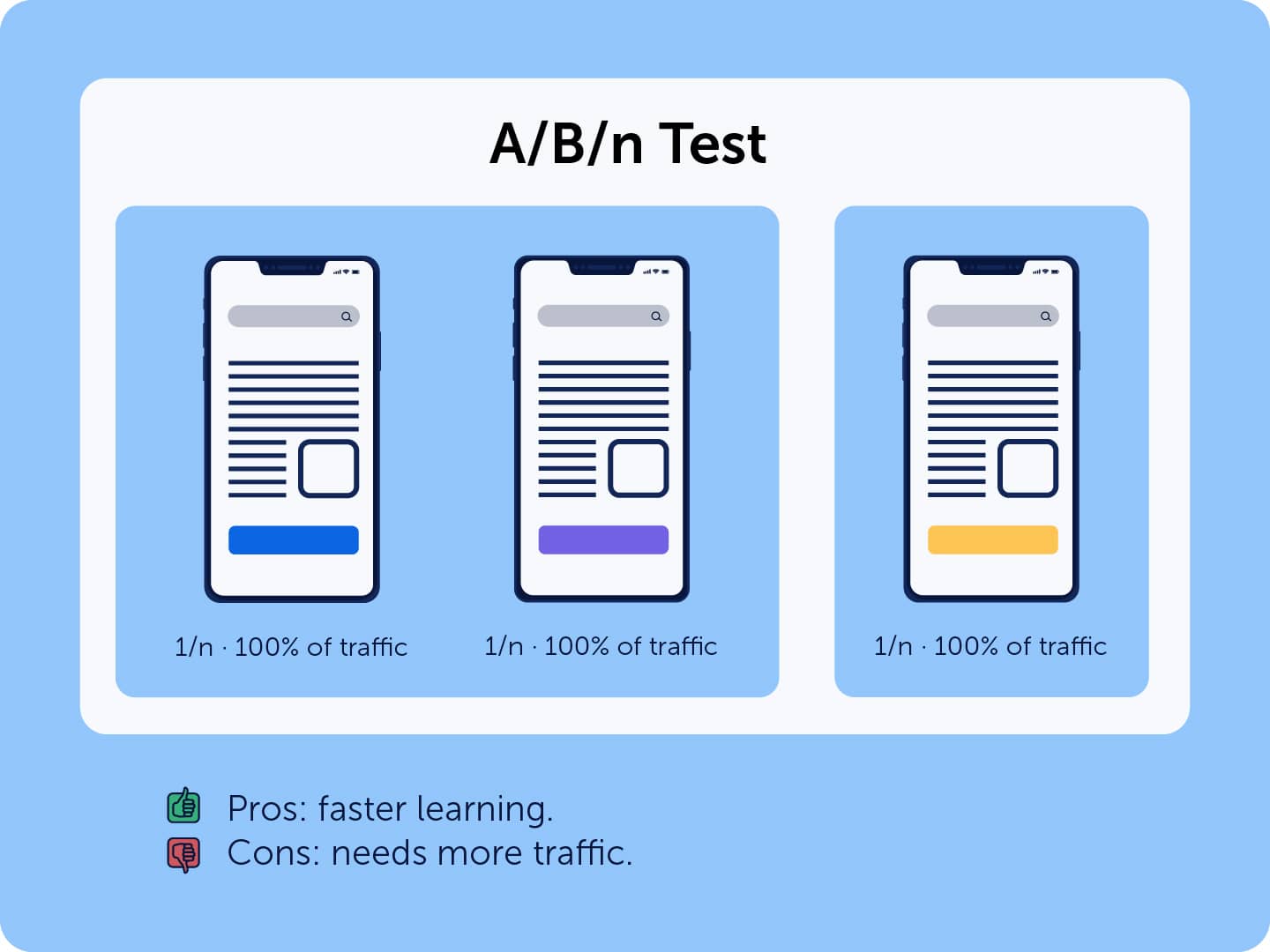

- A/B/n testing: An extension of A/B testing, this method allows testing multiple variations (e.g., A/B/C or A/B/C/D) against the control. This test type can be golden when testing different messaging, for example. Pros: you get to choose the best variation out of several, and the conversion increase can be higher than in an A/B test. Cons: for the test to be conclusive, it requires much more traffic. And it can be harder to set up.

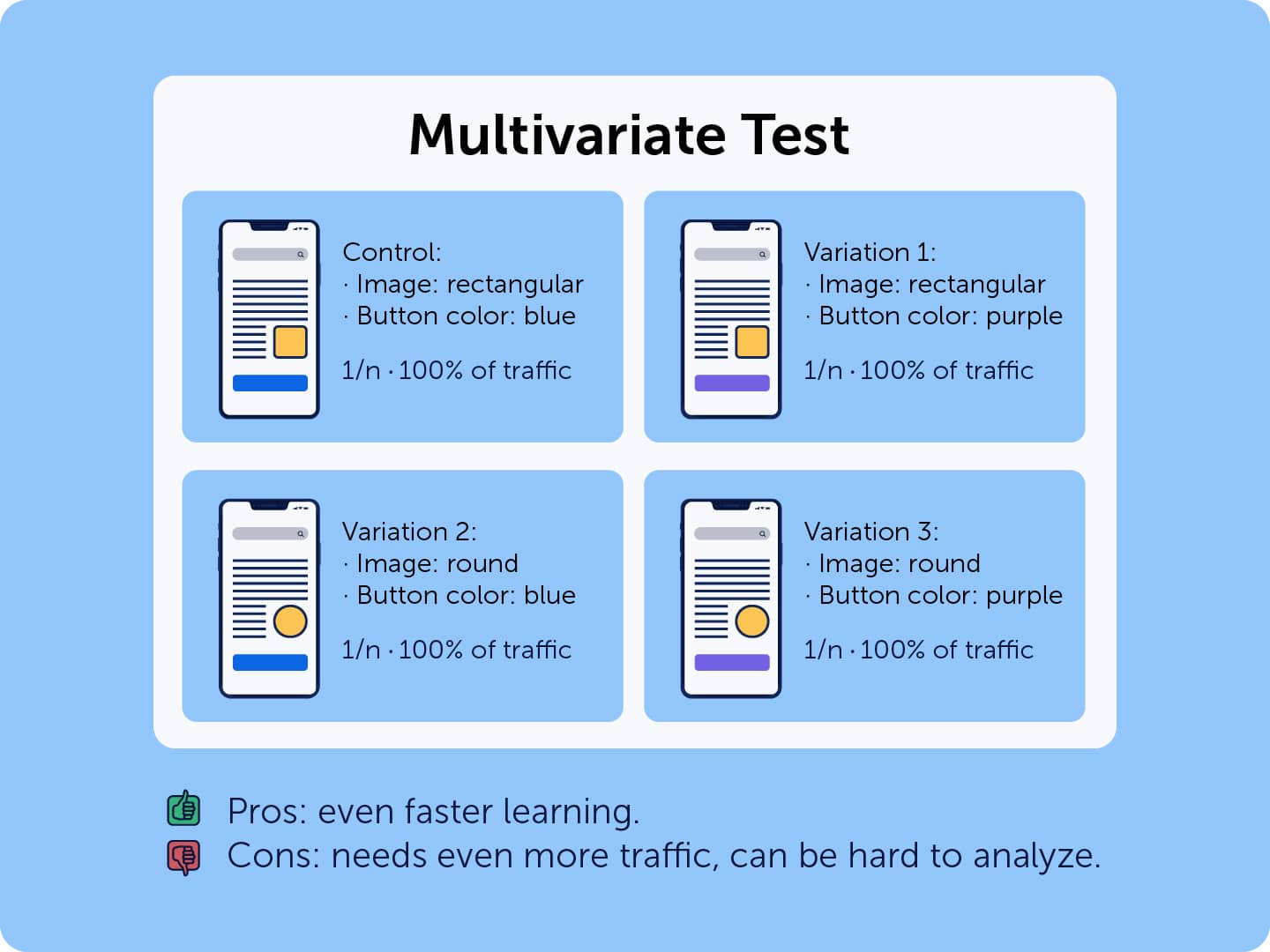

- Multivariate testing (MVT): In MVT, you test multiple variables – change a few things at once to see which variation performs best. For example, changing two elements creates four combinations: AX, AY, BX, BY. Changing 3 will create 9 combinations. This method is valuable for gaining deep insights but requires very high traffic volumes to achieve statistical significance.

After you’ve decided on the type of the test and documented everything, it’s time to build the variation and run the experiment.

3. Testing (Usually A/B testing, But Not Necessarily)

We have a blog post dedicated to CRO tests and doing them the right way, which explains this selection in-depth. Here, we’ll quickly go through some important things to do at the testing stage, aside from pressing the “start the test” button.

- Run an A/A test to make sure your setup is working properly.

- As the experiment is running, watch how your users interact with your website both in variations and in the control version. That helps ensure that everything’s working as expected. Watch replays of visitor sessions coming from different device types and browsers to know the experience is equally pleasant everywhere.

- Don’t stop the experiment prematurely if you (or your boss) thinks it’s not working out (unless something is really broken, of course).

- Set up conversion funnels in your behavior analytics tool for control and variations to get a quick overview of conversion rates at a glance.

What You Should Test: A/B Test Examples

As we’ve stated above multiple times, the best ideas to test always come from data. But if you don’t know where to start, so-called CRO best practices actually make for decent hypotheses. So, you can try experimenting with:

- Using social proof and trust signals. And also changing their placement, how they look, and where users face them in the user journey. Social proof has a tremendous influence on trust, and as a result, on conversions. What type of social proof resonates best with your customers? That’s a good test!

- Tweaking forms – for lead generation or for checkout. Gather evidence about how website visitors use your forms (you can use a form analysis tool for that), and this evidence can give you an idea if you need to shorten the form, or, on the contrary, lengthen it (yes, that also can improve conversions in some cases).

- Adding scarcity to increase urgency. “Only 2 units left in stock,” or “146 rooms booked in this city in the last 24 hours! Only 32% of hotel rooms are still available!”, or something like that. You can try it, but again, treat this as a hypothesis that has a chance to fail. For example, Jonny Longden, Group Digital Director at Boohoo, described how urgency can decrease conversion rates if your audience doesn’t like to rush.

- Optimizing CTA placement and design. To enhance the effectiveness of your CTAs, you can experiment with the visual hierarchy – using contrasting colors and prominent sizes, strategically placing them where users naturally pause. CTA copy can also be a game-changer. And if you needed a reason to run A/B/n tests, that is the perfect use case for them.

Read this post to get more CRO test examples for ecommerce.

4. Analysis

After the experiment concludes, the first thing to do is to see if the results are trustworthy. And that means looking to see if it’s statistically significant.

How Important is Statistical Significance in CRO

Statistical significance in A/B testing tells us how likely it is that the differences in performance between two versions are not due to random chance.

It’s a measure that helps ensure the results are reliable and can be used to make informed decisions. Typically, a test result is considered statistically significant (aka conclusive) if the probability of the result occurring by chance is less than 5% (p-value < 0.05).

That requires a lot of traffic or long test durations, so some CRO experts suggest lowering the desired probability to 90%, leaving a 10% chance of the rest being true.

Pretty much every A/B testing tool calculates statistical significance for you, but if yours doesn’t have one, you can use a statistical significance calculator.

But achieving statistical significance isn’t a must to implement the variation. In some cases, the tests would be inconclusive, but looking at session replays and seeing how your users’ behavior has changed could be enough to decide if you should go with the changes or drop them.

CRO Tools

We discussed that CRO can be a very broad thing. That’s why doing it properly requires a broad set of tools. But in many cases, one platform offers many tools at once, so you don’t need to actually buy a dozen different products.

We won’t bore by again going through why Mouseflow is your best tool for supplying hypothesis data and experiment result analysis (even though we still believe so). Instead, in this section we’ll focus on what kinds of conversion rate optimization tools you may need and what properties they should have.

Quantitative Analytics

This is your main source of numbers. It comes in handy when starting to develop hypotheses, and also for tracking experiment results.

What properties to look for:

- Data sampling. Many analytics tools (Google Analytics 4 included) sample the data, and as a result, the picture that you get can be slightly wrong or incomplete. Not using sampling is always better.

- Conversion goals. Being able to set up different events as goals can help a lot at the analysis stage. The flexibility in configuring conversion metrics is important for CRO.

Analytics tool examples: Google Analytics 4, Adobe Analytics, Mixpanel.

Read more about the impact of data sampling on the data quality

Session Replay and Heatmaps

This is your main source of user behavior data for hypotheses. Watching session replays helps to come up with a hypothesis, see if your test is going as planned, and analyze the results of the test. It’s hard to imagine doing CRO properly without relying on a session recording tool and heatmapping.

What properties to look for:

- Quality of the replay. Being able to see a high-res picture with all the user events is a lot different from watching a low-res video where you can’t inspect elements and don’t see what exactly caused user friction.

- Types of heatmaps the tool offers. Most tools offer click heatmaps and scroll heatmaps. However, geo heatmaps and attention heatmaps (aka eye-tracking) can also be useful tools for CRO, and few tools offer both.

- Data sampling. When it comes to heatmaps, data sampling hits even harder than in the case with quantitative analytics. If you want precise heatmaps, choose a tool that doesn’t sample.

Session replay & heatmap tool examples: Mouseflow, Hotjar, Microsoft Clarity.

Compare the best session replay and heatmap tools

User Feedback

This is not an absolutely necessary CRO tool, but it helps to get a different source of data to support your hypotheses.

What properties to look for:

- Ability to trigger surveys on different event types. Usually, you want to ask users something in particular situations – when they’ve just pressed a button, when they have been inactive for some time, or something like that. It’s better if the user feedback tool offers flexibility here.

- Automated survey analysis. Analyzing feedback can be pretty time consuming. So, it’s good if the tool offers, for example, to automatically calculate the NPS score for you. Or to analyze the sentiment of the responses.

If you choose Mouseflow as your heatmaps and session replay platform, you get a user feedback tool at no cost either way, so why not make use of it?

User feedback tool examples: Mouseflow, Hubspot, SurveyMonkey.

Form Analytics

Another auxiliary CRO tool, that helps only with optimizing forms. But it really simplifies the process.

What properties to look for:

- Privacy. Forms is where a lot of personal information ends up. You need to avoid capturing it to stay compliant with privacy laws, so make sure that your form analytics allows you to exclude fields with passwords and emails, addresses and phone numbers.

- Form compatibility. There are so many types of forms provided by so many different platforms, that it’s hard for form analytics tools to ensure compatibility with all of them. Just make sure yours is compatible with all types of forms that you use or plan on using.

Again, with Mouseflow you get a form analysis tool at no additional cost.

Form analytics tool examples: Mouseflow, Hubspot, Zuko.io.

A/B Testing Tools

A staple for proper experimentation – if you want to do proper CRO, using an A/B testing tool is a must. Google has sunset the free and beloved Google Optimize a while ago, but there are a bunch of other tools that offer even more functionality, even though at a higher cost. Most of them are great, but there’s a few things to pay attention to when choosing one.

What properties to look for:

- Integrations with the rest of your CRO tech stack. Getting to see data for a specific experiment or a specific variation is invaluable, and for that, you need to integrate your A/B testing tool with your analytics tools.

- Effect on load time. Every tool that integrates with your website has a toll on how fast it loads, and A/B testing tools tend to be particularly nasty in this regard. So, try choosing a tool that doesn’t slow your website down too much.

A/B testing tool examples: AB Tasty, Intellimize, Optimizely.

Other Tools

There will also be other tools that you would use to run your experiments. It could be landing page builders to tweak landing pages, email automation platforms to experiment nurturing flows, etc. There’s too many of them, so we won’t be covering them in this guide, but we have a separate post dedicated to the best of the best.

Read more about the best CRO tools for different purposes.

CRO Case Studies

We’ve talked enough about theory, now let’s take a look at some real life examples of how brands optimize their websites for conversions and adopt experimentation mindsets.

How Rains Increased Conversions by 10%

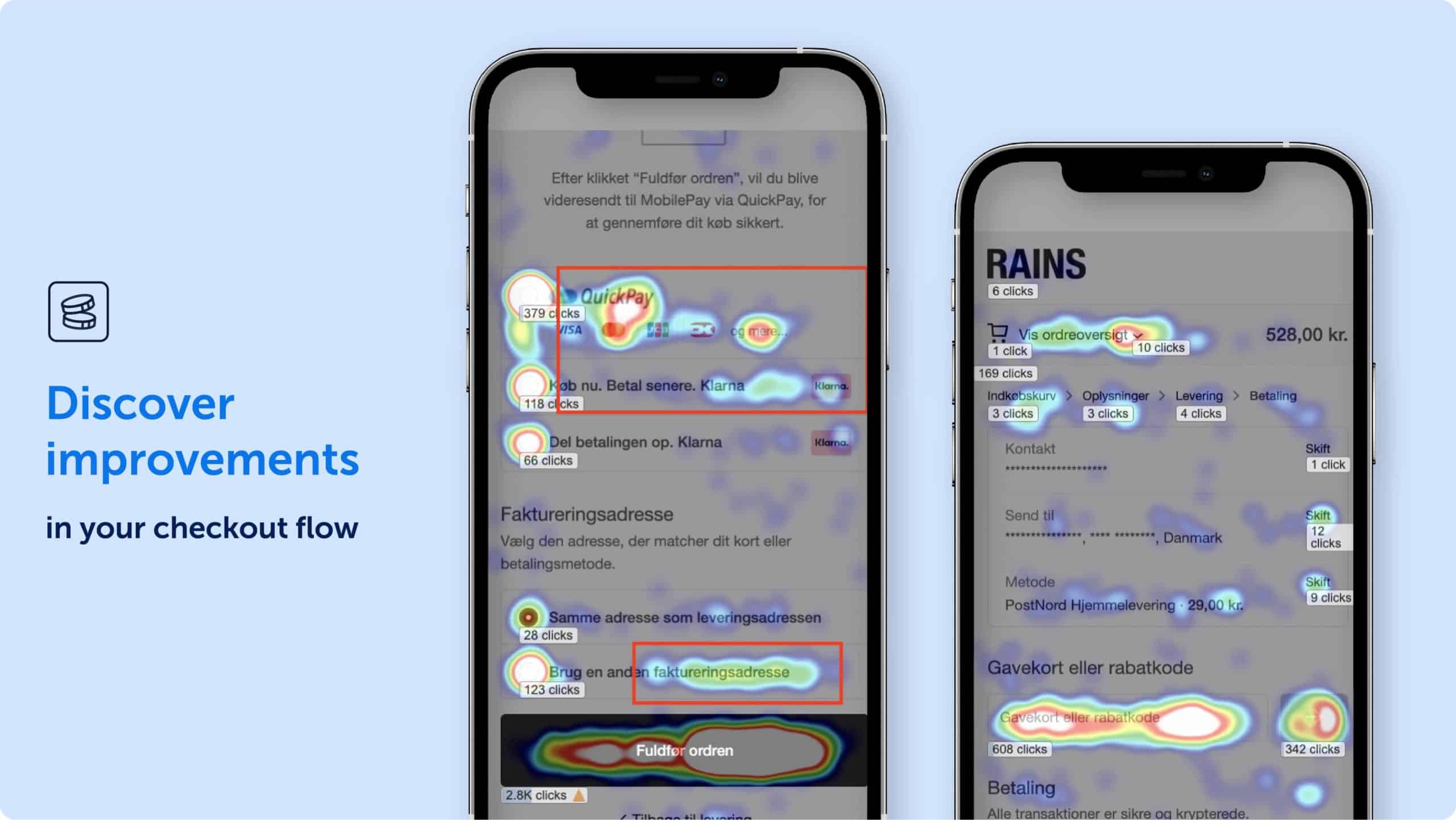

A Danish fashion brand Rains improved their website conversion rates by 9.8% for the cart and 10.8% for the checkout flow.

They used Mouseflow to record over 500,000 sessions and then analyzed them to identify pages with high friction and errors.

They turned insights from session recordings and heatmaps into hypotheses for A/B testing with Google Optimize (may it rest in peace, we miss it too). Their tests focused on usability improvements like making unclickable elements clickable and refining the checkout process.

They implemented the changes when the probability of the test being conclusive was over 90%. We already mentioned the results – a ~10% CR increase.

RAINS relied on heatmaps to find friction in their checkout flow

How Derek Rose Got 37% More Conversions Out of Their Site

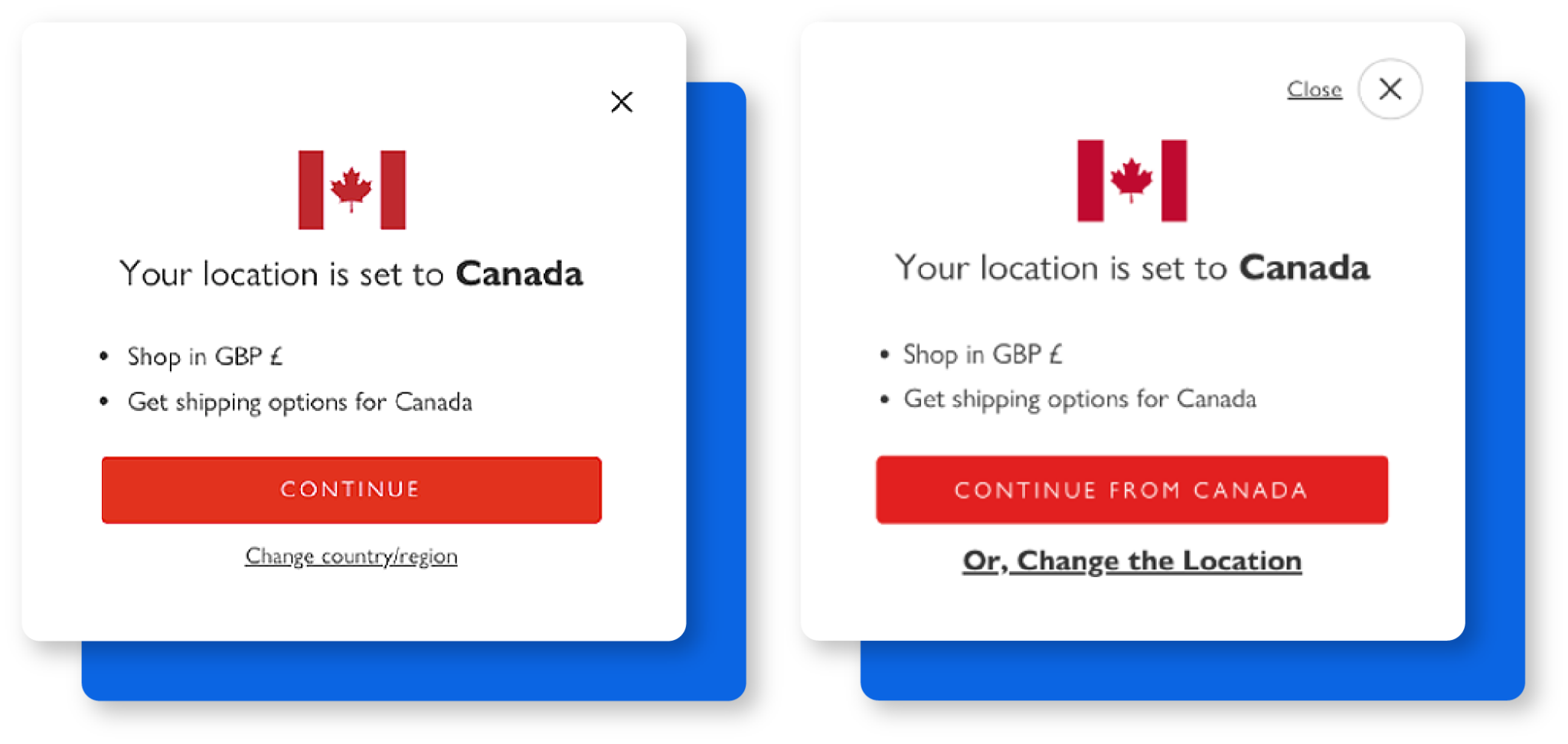

A luxury nightwear brand Derek Rose improved their conversion rate by focusing on user experience adjustments.

They relied on session replay and auxiliary data that Mouseflow offered to look for user friction such as page reloads and click rage. That allowed them to discover issues with popup design and navigation.

They proceeded to redesign the popup, optimize the mobile navigation menu, and fix a few more UI elements here and there. Combined, these UX enhancements led to a 37% increase in conversion rate.

Derek Rose’s analysis of user behavior led to changing how the location pop-up worked

How Ecooking Optimized Their Mobile Website and Got 10% More Conversions

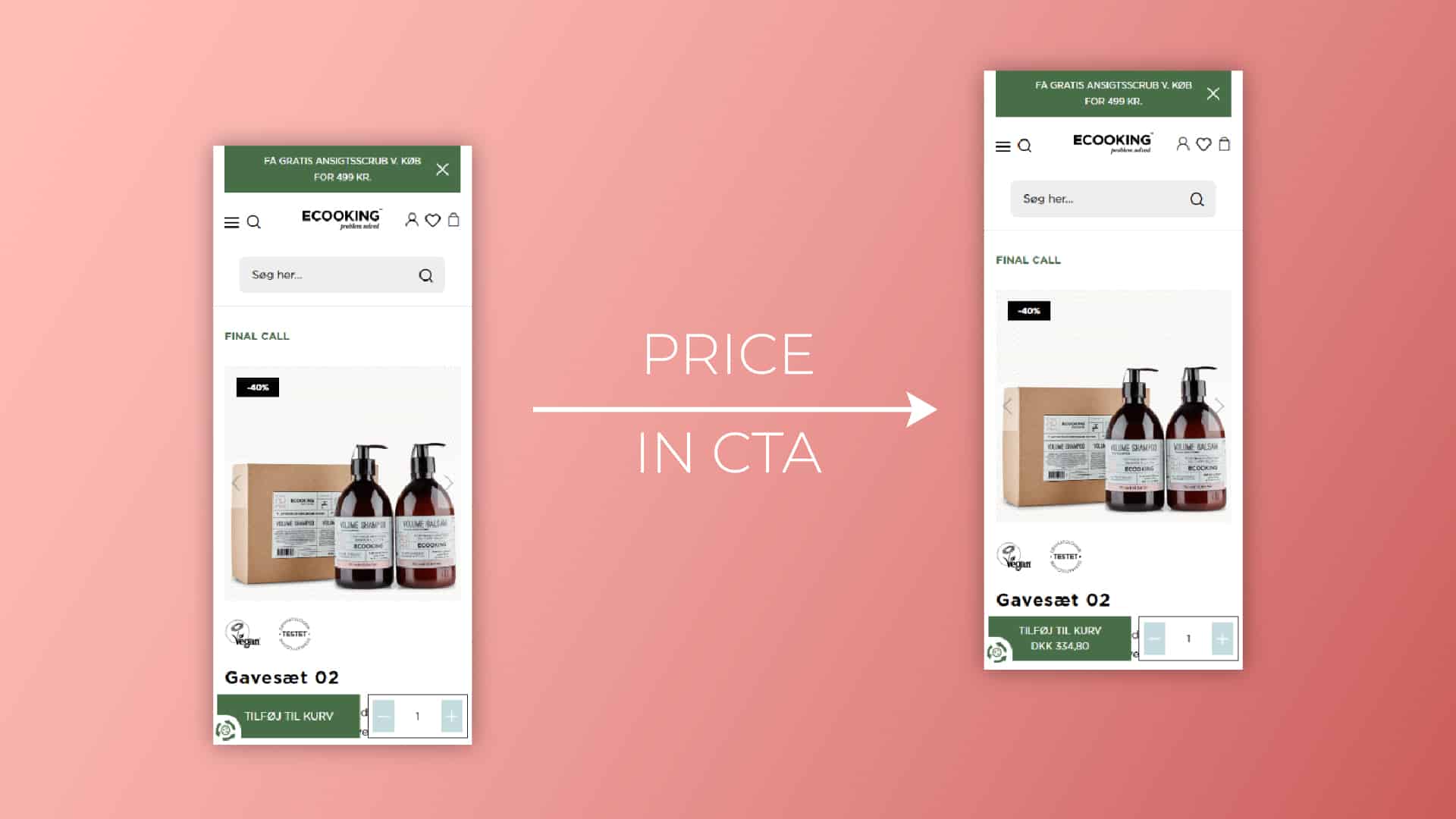

A global skincare and cosmetics brand Ecooking approached optimizing conversions the classical way.

They recorded more than 450,000 sessions with Mouseflow, and used that data to identify high-friction pages and user errors. Then, they ran A/B tests to validate improvements.

Notable changes that proved to have a positive impact included making unclickable elements interactive and improving product description pages (PDPs) by highlighting the key selling points.

The result of one not very extensive optimization sprint was a 10% conversion uplift.

Among other things, Ecooking tested changing CTA copy

Check out other CRO case studies.

Conclusion

Literally every website can benefit from conversion rate optimization.

At the same time, CRO isn’t just about increasing sales by making small tweaks to your website. It’s about adopting a culture of experimentation and understanding your customers. The main value of each test is not in the conversions increase that it contributed, but in the learning the team can gain from it.

Focusing on learning, rather than just the immediate outcomes, can transform the whole organization in a very positive way, including, of course, an increase in conversions.